Path forward for Data Governance: Existence Over Essence

From Standardizing Meaning to Sustaining Accountability in AI-Driven Systems

About Our Contributing Expert

Winfried Etzel | Data Governance & Data Management Specialist & Podcast host at MetaDAMA

Winfried is a senior data governance and data management practitioner, educator, and community builder with deep experience across energy, consulting, and public-sector organizations. He currently serves as Senior Specialist in Data Management at Equinor. Formerly, he served as Manager of Data Governance & Architecture at Aker BP and did extensive consulting work with Bouvet ASA.

As a prominent contributor to the data community, he is the host of the MetaDAMA podcast and has been recognised as one of the Nordic 100 in Data, Analytics & AI and nominated multiple times as Data & AI Influencer of the Year.

Winfried’s work is grounded in a strongly socio-technical view of data governance. Especially as organizations embed automation, AI, and increasingly autonomous systems into their core operations. He complements this foundation with deep professional certification, including CDMP Master, Microsoft Azure AI Fundamentals, and Generative AI credentials. We’re thrilled to feature his unique insights on Modern Data 101!

We actively collaborate with data experts to bring the best resources to a 15,000+ strong community of data leaders and practitioners. If you have something to share, reach out!

🫴🏻 Share your ideas and work: community@moderndata101.com

*Note: Opinions expressed in contributions are not our own and are only curated by us for broader access and discussion. All submissions are vetted for quality & relevance. We keep it information-first and do not support any promotions, paid or otherwise.

Let’s dive in

Rethinking Data Governance in Times of AI

Data governance is often experienced as a burden: slow, bureaucratic, and disconnected from how organizations actually work. Yet, at the same time, it is increasingly recognized as indispensable, especially as organizations embed automation, AI, and autonomous agents into their core operations.

This tension stems from a fundamental mismatch between how data governance is conceptualized and the reality of modern socio-technical systems.

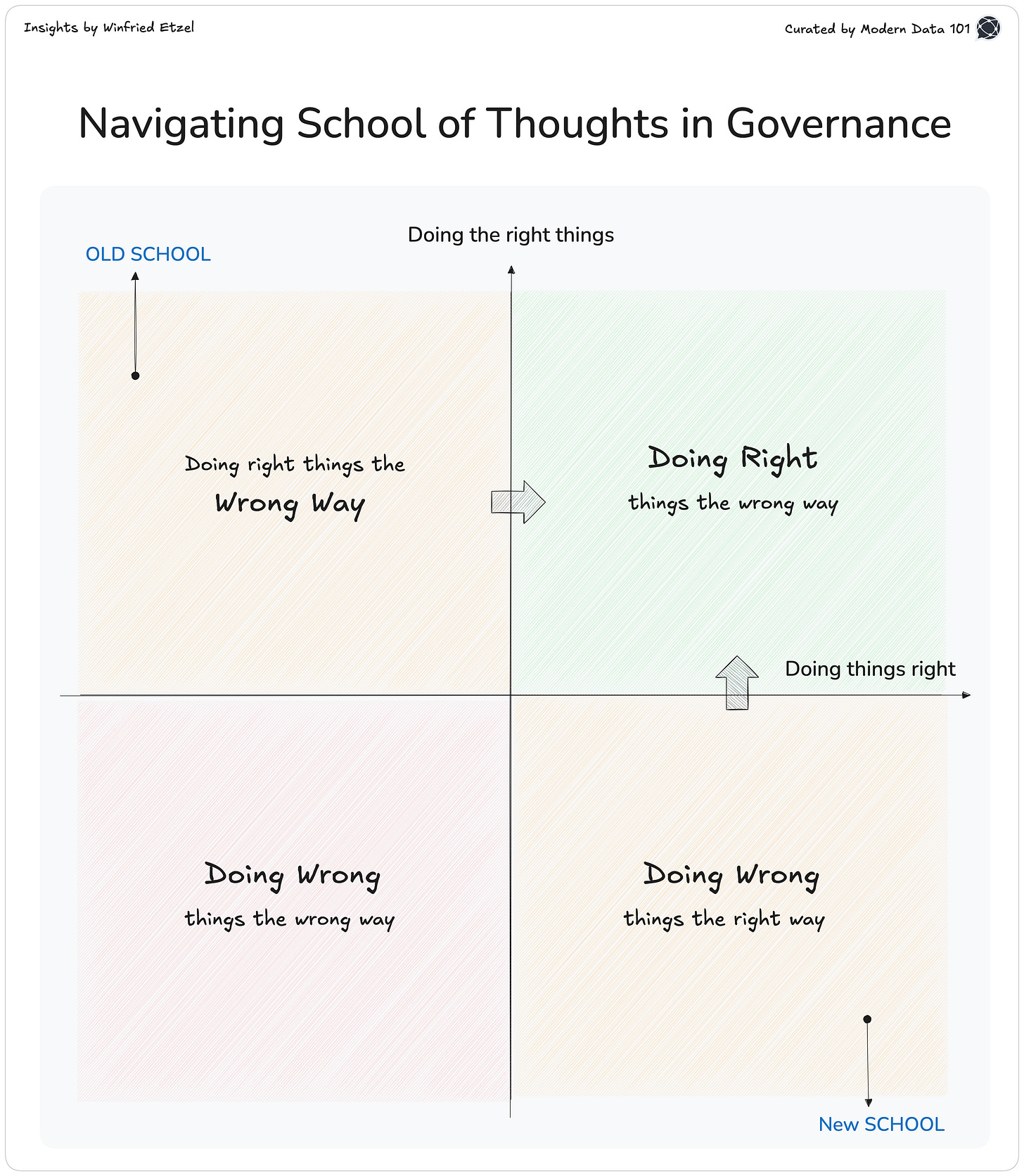

Traditional Old School data governance is rooted in an essence-based worldview:

…the assumption that data has inherent meaning and value that can be defined, standardized, and controlled. A worldview that was rational in centralized/aggregated, slow-moving systems.

In distributed, AI-enabled environments, this assumption no longer holds.

Yes, we still need to respect regulatory realities, where data does carry non-negotiable obligations, where safety-critical data does have inherent risk implications, or where some meaning is constrained and not freely enacted. But a realization that is slowly unfolding is that context and purpose are limiting these non-negotiables.

Data has no intrinsic value. It does not exist in a vacuum.

Value emerges through contextual use and purpose.

Meaning is not stable, but is enacted with intentionality. Yet, New School data governance has not solved the inherent issues of data governance either.

With a focus on automation and design patterns, we finally found the right methodologies to scale data governance efforts. Yet we are still not doing “the right thing the right way,” because…

…scale without intentionality automates existing assumptions.

This is not because we got it “wrong”, but because data governance has evolved, from compliance-focus to value-focus. Now it needs to realize contextual obsolescence and move towards becoming a motor for AI advancement.

Drawing on the socio-technical foundations of data governance, the challenges introduced by autonomous AI systems, the existential reframing of value and meaning, and the operational shift toward embedded and computational data governance, we are required to propose a different lens:

Data governance is not about controlling data or AI.

It is about intentionally structuring socio-technical systems so that meaning, direction, and accountability can be sustained over time. Don’t think of this as a philosophical indulgence. This existential reframing is a practical necessity for governing AI at scale.

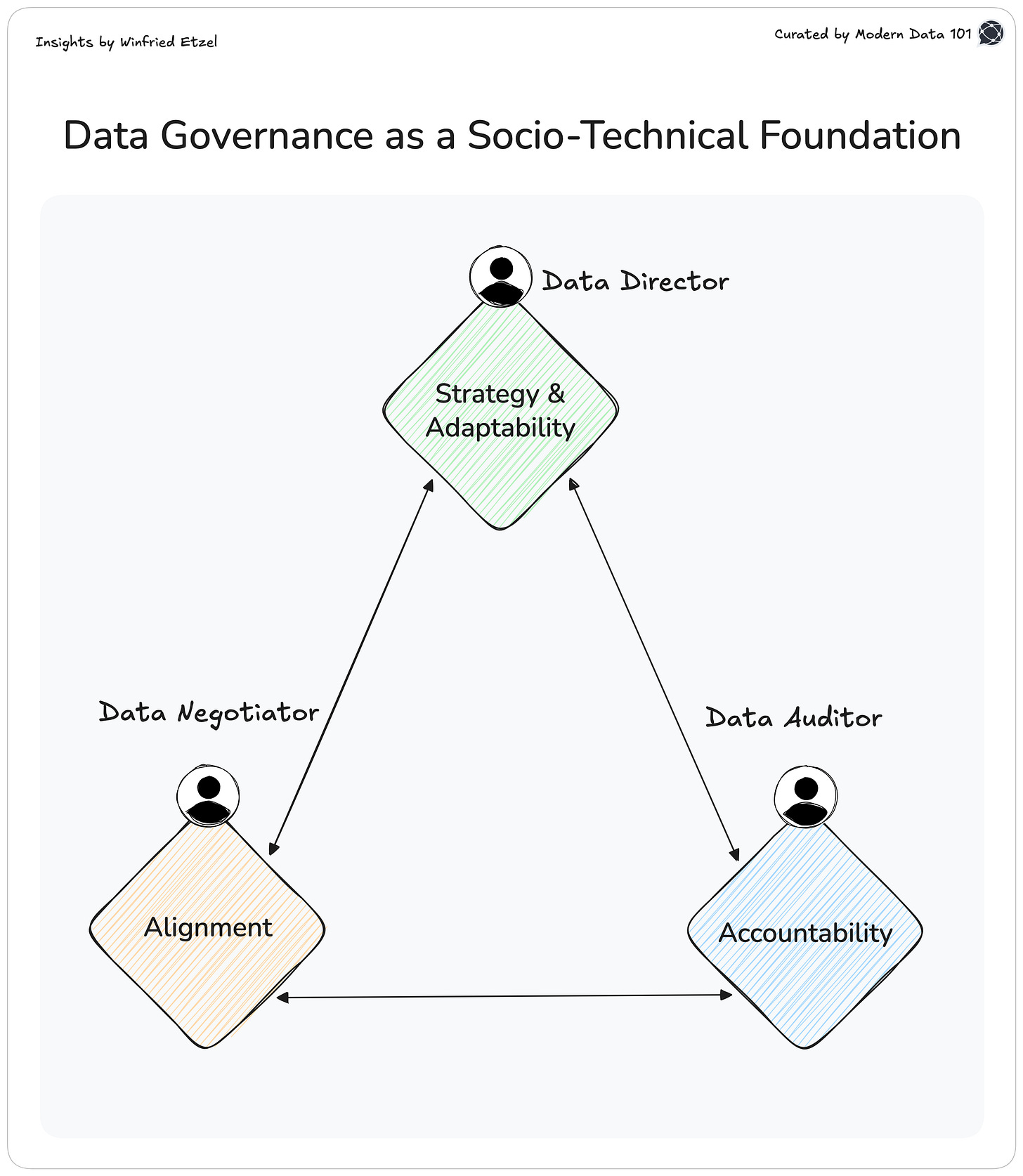

Data Governance as a Socio-Technical Foundation

At its core, data governance is not a technical discipline, but largely a social system.

Data governance exists to ensure that collective action remains purposeful, accountable, and legitimate within complex socio-technical systems.

Its role is to help organizations

negotiate requirements and meaning,

set direction for data, and

maintain accountability in relation to data and data-driven decisions.

Simply put, a socio-technical system is any system where humans and machines work together to achieve goals. And this kind of system, with all its interactions and connectedness between humans and machines, has become the reality in most organizations.

From this perspective, the persistent fragmentation between corporate governance, IT governance, data governance, and AI governance is artificial.

These are not different kinds of governance, they are different contexts in which the same governance logic must operate. The purpose remains constant while only the methods evolve.

This framing is critical, because it exposes the core problem facing data governance today: it has often been treated as an operational or compliance function, rather than as a foundational element of how organizations coordinate action and responsibility.

The Limits of Essence-Based Data Governance

Both Old School and New School data governance are built on an implicit belief: that data has an essence. This belief manifests in familiar practices:

defining canonical data models,

enforcing enterprise-wide definitions,

measuring quality against fixed rules,

assuming alignment once standards are met.

Such practices aim to stabilize meaning by fixing it in advance. They presume that if data is correct, meaning will follow, and that interpretation can therefore be ignored. While there is value in these practices, they treat data and standards for data as constant, while in reality, they are temporary agreements, not eternal truths.

This approach is still viable in centralized, slow-moving systems, bounded context, or facing regulatory deterministic rules. But it breaks down in distributed data landscapes, where data is created, interpreted, and reused across domains with different goals, constraints, and assumptions.

Here, meaning must be negotiated constantly. This brings us to the introduction of a shift in the way we think: from essence to existence. Data cannot reliably carry meaning within itself. Meaning arises only through use, context, and purpose.

Once this is accepted, the role of data governance changes fundamentally.

AI as an Accelerator of Data Governance Failure

Generative AI systems are probabilistic, adaptive, and increasingly autonomous. They consume and produce data like other systems, and the quality of their outputs can have immediate and profound consequences for people, organizations, and society.

Crucially, AI systems act without understanding intent or taking accountability for interpretation. They operate on statistical proxies of meaning and optimize patterns.

When meaning is underspecified or assumed to be stable, AI will still act, but often in ways that feel misaligned, unfair, or irresponsible.

Data governance fails when we project understanding onto systems that operate on unknown or misaligned interpretations of intention. Hallucinations, bias, drift, and opacity are the most well-known failure modes of AI.

But they are not merely technical defects. They are symptoms of data governance that failed to:

negotiate context,

provide clear direction,

or maintain accountability as systems evolved.

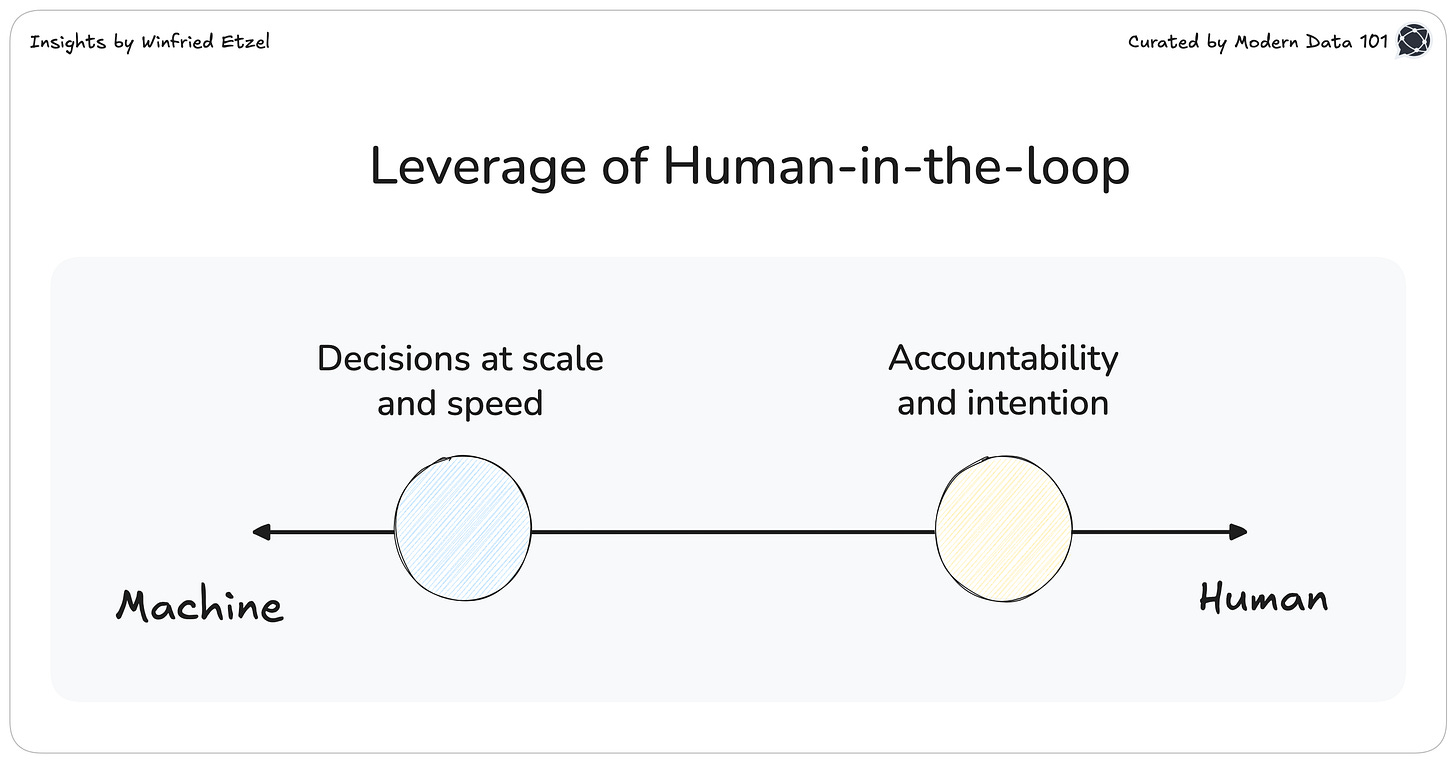

AI compresses decision cycles and shifts action away from human presence. This renders data governance models that rely on retrospective control or human-in-the-loop approvals structurally inadequate.

What this reframing makes explicit is that the challenge of AI governance is an acceleration of existing challenges. The same socio-technical questions data governance has always faced now surface faster, at greater scale, and with less room for retrospective correction.

Embedded, computational data governance is the only way the existing ambition survives.

From Control to Accountability

A common reaction to AI-driven incidents is to demand more control: additional approvals, stricter rules, heavier oversight. But control does not create meaning and rather constrains behavior.

The deeper issue is accountability. Existential thinking reminds us that accountability cannot be delegated away. Organizations remain accountable for the systems they design, deploy, and scale. Even when those systems act autonomously.

Data governance exists to make that accountability explicit and actionable. This is why there is a distinction between human-in-the-loop and human-designed feedback loops. The former attempts to preserve control by inserting humans into execution. The latter:

recognizes that scale requires autonomy, but insists that humans remain accountable for defining intent, boundaries, and escalation paths.

The Stable Core: Data Negotiation, Data Direction, Data Audit

Despite technological change, the core roles of data governance remain remarkably stable. These core roles that data governance has to play in an organization are a defining part of what data governance is.

The definition of data governance to tackle what is to come is different from what you may have encountered before. It is aligned with corporate governance, yet defined for data:

Data governance is a human-based system by which data assets in a socio-technical system are directed, overseen, and by which the organization is held accountable for achieving its defined purpose.

Based on this definition, data governance consistently fulfills three functions:

Data Negotiation

Governance aligns business objectives, regulatory constraints, ethical considerations, and contextual realities. In AI systems, negotiation governs meaning: what data represents, how outputs should be interpreted, and where boundaries lie.Data Direction

Governance provides strategic direction. It answers not only whether systems perform correctly, but whether they should exist at all. Data Direction ensures that automation serves organizational purpose rather than undermining it.Data Audit

Data governance maintains accountability. In autonomous systems, audit shifts from periodic review to continuous assurance, real-time signals, observability, and feedback loops that allow responsibility to be exercised before harm occurs.

These roles are existential stabilizers in complex socio-technical systems. They operate on different levels, from strategy to tactics to assurance. This is not about thinking of data governance as a way to monitor and enforce, but as a discipline that designs for accountability.

Governance Is One

A key implication of this framing is that governance cannot be fragmented in purpose, even when execution is context-specific. Corporate governance, IT governance, data governance, and AI governance share the same purpose: directing collective action and ensuring accountability.

Treating them as separate disciplines creates gaps precisely where AI systems operate across boundaries. Data governance must be understood as part of organizational governance, not as a technical add-on, while remaining context-sensitive in execution. AI makes this integration unavoidable. This is the very reason why the wording of the data governance definition above is aligned with the syntax of the ISO definition of corporate governance (ISO 37000:2021).

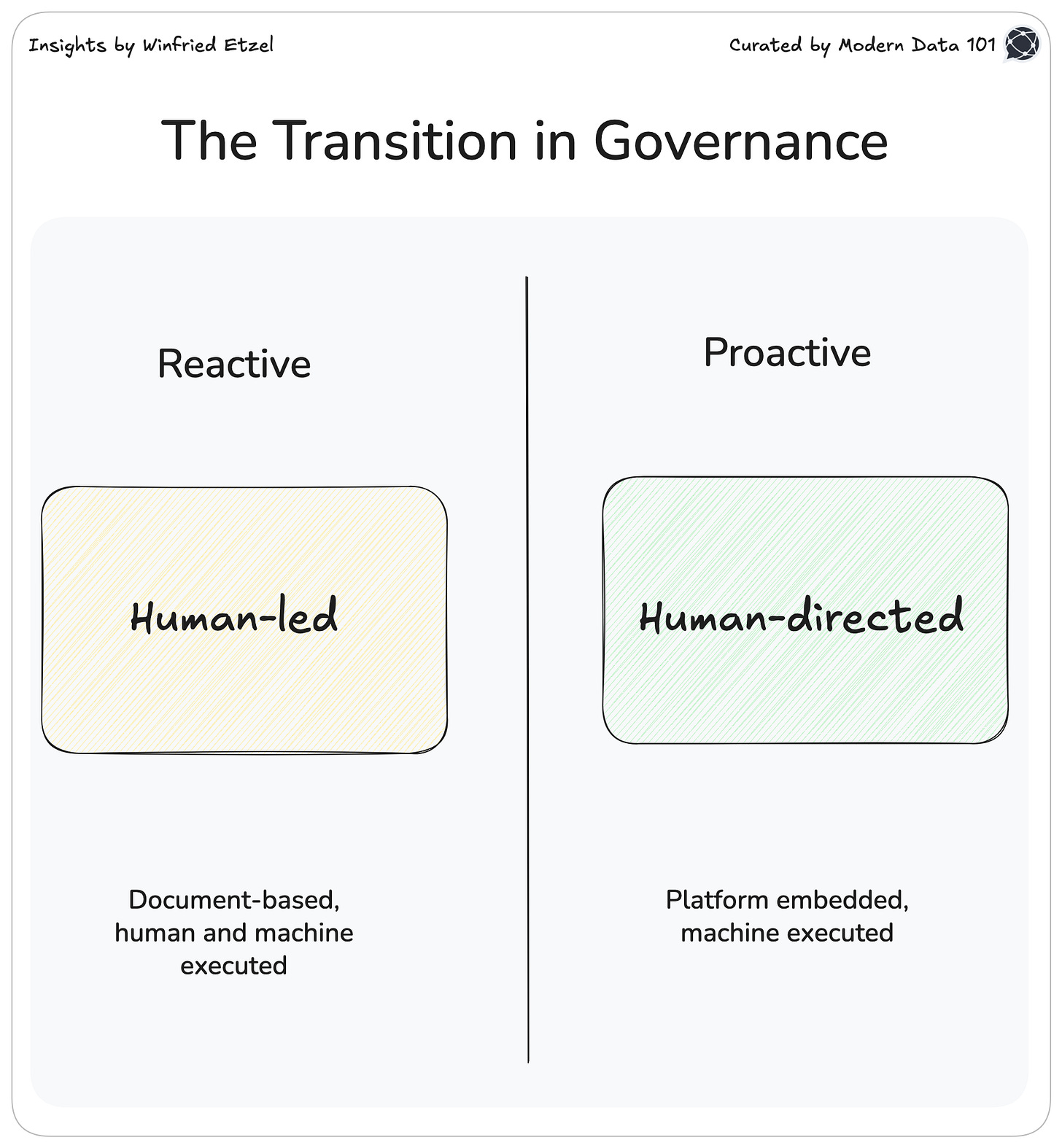

From Human-Led to Human-Directed Governance

Old School data governance depends on human compliance, documentation, and incentives. This approach does not scale once decisions are automated and systems begin to act autonomously.

New School data governance approaches improve speed and decentralization, but still rely on implicit assumptions about meaning and accountability that break down once decisions are executed autonomously by systems.

Embedded data governance responds by making data governance computable. Enforcement, monitoring, and escalation are delegated to systems. Not as a replacement for human judgment, but as an extension of it.

Intent, constraints, and accountability are encoded in contracts, policies, and control mechanisms that platforms and AI agents can execute consistently at scale. This is the automation of existing data governance patterns, redesigned for environments where behavior emerges at runtime rather than being fully predefined.

As systems become agentic, data governance must observe and steer behavior over time. Humans negotiate meaning, set direction, and remain accountable for outcomes. Machines enforce constraints, monitor behavior, trigger escalation, and provide feedback loops through which data governance can learn and adapt.

MD101 Support ☎️

If you have any queries about the piece, feel free to connect with the author(s). Or feel free to connect with the MD101 team directly at community@moderndata101.com 🧡

Author Connect

Connect with Winfred on LinkedIn 🤝🏻

Or hear more from Winfried on his Substack ⬇️

MD101 Support ☎️

If you have any queries about the piece, feel free to connect with the author(s). Or connect with the MD101 team directly at community@moderndata101.com 🧡

From MD101 team 🧡

🧡 Join 1500+ Data Leaders Subscribed to The State of Data Products

Introducing The Catalyst, a special edition of The State of Data Products that compiles insights around the ups and downs in the data and AI arena that fast-tracked change, innovation, and impact.

📘 The Catalyst, first release is our annual wrap-up that compiles the most interesting moments of 2025 in terms of developments in AI & data products, with expert insights from across the industry!

Some focal topics

The gap in AI readiness for enterprises

The shift towards context engineering

Addressing governance debt with a focus on lineage gaps

Revisiting fundamentals for better alignment b/w business vision and AI.

The traditional view of data as essence-based is limiting to the user or organization or changing external operating environments.

But I worry in the same vein that a ‘socio-technical system’ framework can create rigidity, challenges to scaling, and is not easily adaptable to changing external circumstances beyond a team or company’s control.

‘Mission/Purpose-defined value creation objectives’ might be something that could make the framework more flexible, adaptable and scalable perhaps.

I have a patent pending regarding a new governance layer within AI where the human, exactly as you described, is proactive with their governing intent.

If you were to build a system to address the problems you/we are all seeing with data governance… how would you design it? I’d love to pick your brain on your perspective — I want to live in a world where we own our own data and govern our own intent.