Thriving in the Agentic Era: A Case for the Data Developer Platform

Coping with how every team is now a data team, Strategic levers of Data Platforms built after the DDP Standard, and Architectural nuances of an AI-first data system

Data isn’t broken. It is the hidden pulse of recurring patterns. The way we deliver data, though, is broken.

Every team today produces data, but few can build with it. We’ve outsourced the core act of delivery to a patchwork of tools, workflows, and duct-taped orchestration, held together by the brute force of smart people compensating for bad infrastructure.

If we stop thinking in dashboards and start thinking in actions, the tables turn.

The Data Developer Platform (DDP) isn’t a layer or a design paradigm. It’s a complete platform construct that treats data like software, developers like product teams, and models like the interface between intent and value.

The Shift: Why Data Developer Platforms Now

AI didn’t just expand the market, it changed the requirements.

Models need structure. Agents need context. Enterprises need systems that don’t break when the interface shifts from dashboards to dialogue.

Every team is now a data team, whether they planned for it or not. But the tooling hasn’t caught up. It’s still service-layer patchwork pretending to be a platform.

DDP meets the moment because it’s software-native at the core. Built for velocity, semantics, and scale instead of simply pipeline or infrastructure management.

A Quick Recap: What is a Data Developer Platform

Data Developer Platform (DDP) Specification is an open specification that codifies how modern data platforms should be built to enable data-first organisations. It is inspired by the proven Internal Developer Platform (IDP) standard from software engineering. The DDP specification provides a framework that other organisations can adopt and adapt.

A Data Developer Platform is a Unified Infrastructure Specification to abstract complex and distributed subsystems and offer a consistent outcome-first experience to non-expert end users. A Data Developer Platform (DDP) can be thought of as an internal developer platform (IDP) for data engineers and data scientists. Just as an IDP provides a set of tools and services to help developers build and deploy applications more easily, a DDP provides a set of tools and services to help data professionals manage and analyze data more effectively. ~ datadeveloperplatform.org

Learn more about the specifics here: What, Why, How

When we say Data Developer Platform, we refer to data platforms built in the image of DDP or that are an implementation of the Data Developer Platform Standard.

Strategic Leverage of Data Developer Platforms

AI Needs Architecture: A Platform that Remembers

We’re entering the agentic era. Not led by dashboards, but led by action. Systems that don’t just describe the business, but drive it.

And yet most enterprise stacks are stuck. Why? Because they were never built to reason. The Data Developer Platform is. It doesn't treat AI as a layer on top; it treats it as a new kind of user, embedded from architecture to interface.

The core shift is subtle but seismic: data products become semantic assets. Workflows become intent-driven. Interfaces become governed contracts. AI doesn’t just query data, it participates in its evolution. Agents operate with institutional memory, grounded in lineage, guided by policy, and capable of proposing change.

How DDP enables AI-native enterprises:

Model Context Protocol (MCP): A governed interface where agents query with awareness of semantics, lineage, and compliance

Semantic Memory: AI agents inherit institutional context, not just data access, but business reasoning

Intent Feedback Loops: Agents propose changes to data products via GitOps, enabling closed-loop optimisation

Cross-Domain Coordination: Composable workflows across sales, supply chain, finance, all speaking the same semantic language

AI-Safe Data Foundation: Built-in quality and policy layers that prevent hallucination, drift, and misuse

Programmable Infrastructure: Agent workloads are scheduled, scaled, and observed like first-class platform citizens

DDP turns AI from an integration problem into a systems advantage.

Market Dynamics & Expansion Levers

Enterprise data needs have outgrown the dashboard. What was once about insight is now about action. Teams want workflows instead of reports. Actionable agents, not just queries.

And existing stacks weren’t built to meet that bar. The Data Developer Platform steps in where modern tooling fragments, creating a shared surface that connects models, metadata, and execution.

DDP plays horizontally by design. It integrates where the org already lives and activates a semantic spine for smart integrations. The architecture is built to be forward-looking, from semantic modelling to closed-loop agents.

Expansion Levers:

Native integration points: Works with existing tools instead of forcing migrations

Wedge to platform: Starts with elemental data products, expands into semantic network, vertical lineage, and agentic networks.

AI alignment: Connects clean data products to agentic workflows and copilots

Compounding Product Surface + Semantic Contracts

Most data platforms fragment as they scale. Every new use case creates more drift, not more alignment.

DDP inverts that pattern. Every new product adds structure. Every model becomes reusable.

This is where platform leverage shows up: in shared language. DDP’s semantic spine turns internal reuse into network effects.

Strategic levers:

Models as interfaces: Once defined, models can be reused across teams, tools, and agents

Productized data: Shared contracts reduce variance, increase trust, and accelerate integration

Governance by design: Controls are embedded in delivery, not bolted on

Semantic spine: A shared language across domains, data products, agents, and infrastructure

Ecosystem gravity: Reuse grows with usage, compounding value at every layer

Enterprise Transformation Through DDP

Every enterprise wants better decisions, faster. But speed without structure leads to entropy. DDP reintroduces structure as a scaling surface where semantics, quality, and intent are encoded at the product layer, not patched on downstream.

The payoff is organisational. Domains stop building from scratch. Analysts become product owners. Engineers ship with autonomy. And data stops being a dependency; it becomes a capability.

That’s how platforms deliver value: by making more possible instead of doing more.

Strategic Outcomes:

Faster time to insight: Declarative models reduce translation overhead and time-to-value

Lower marginal cost of delivery: Reuse and versioning replace rebuilds and handoffs

Productized trust across domains: Shared semantics and governance embedded at the source

Stickier adoption: Teams build on the platform, not just with it, raising LTV over time

Compounding value: Each data product increases platform utility and ecosystem leverage

The Architectural Opportunity

The Data Developer Platform reintroduces structure as a surface area for scale. It doesn’t compete with tools but aligns them. It offers a horizontal foundation where developers are able to perform beyond operating pipelines. They design durable, semantic systems.

This architecture standard doesn’t bet on a single paradigm. It’s built to absorb them. Whether it’s data mesh, data products, or agentic networks, the platform holds because it’s grounded in an evolutionary structure. It gives teams a way to evolve without collapsing under every new model. While the concepts can change, the foundation doesn’t have to.

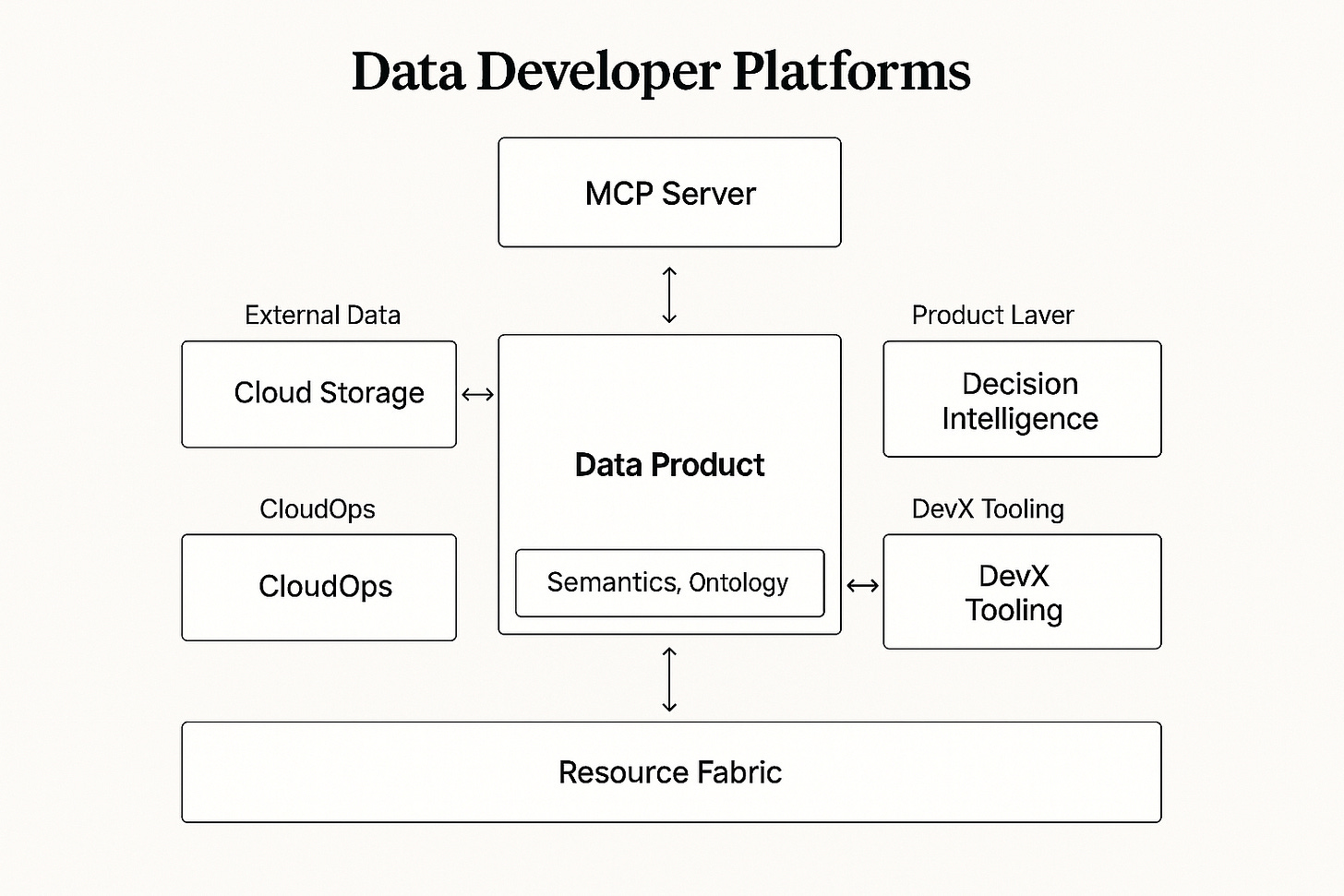

The Open Layers of Data Developer Platforms

The architecture is open by intent. Designed to separate concerns, reduce coupling, and create room for scale. These five architectural planes are points of leverage that open up the stack instead of enforcing technical boundaries.

1. Resource Fabric

Abstracts cloud-specific details into stable, reusable primitives. Compute, storage, and networking become composable. Portability is default.

This is where the cloud stops being a constraint. The Resource Plane standardises compute, storage, and network primitives across providers, so teams can design without worrying about where things run. It enables dynamic provisioning based on cost, locality, and scale.

What you get is optionality and elimination of massive lock-in risk: a layer that shields the system from lock-in, while optimising for the realities of execution.

2. CloudOps

This layer acts as the coordination plane across cloud environments. It handles things like multi-cloud orchestration, persistent storage, and networking across zones and providers. You’ll see it managing cross-cloud scheduling, disaster recovery, and making sure networking policies stay consistent even in heterogeneous setups.

It’s the piece that makes running across clouds feel like running on one.

3. Data Engine

At the centre is a system that holds its shape, even as the tools around it change. It weaves together subsystems like:

the raw materials (streams, tables, lakehouses),

the engines that process them (Spark, Trino, Databricks; pick your flavour),

and a memory layer that tracks how things evolve: schemas, policies, lineage, intent.

It doesn’t just record what happened. It understands why. That context is what makes the system reliable, traceable, and adaptable. Semantically aware.

This semantic awareness enables horizontal data modeling standards, a rare opportunity for ecosystem dominance.

*Horizontal modelling is where ecosystems start to converge. When semantics are shared across teams, tools, and domains, the system stops fragmenting. Reuse becomes possible, and interfaces stay stable. Every new product doesn't start from zero.

4. Product Layer

It’s where teams define products instead of pipelines. Business logic is mapped into models. Metrics are structured as systems. The idea is to start from outcomes, not implementation.

This layer enables model-led product definitions, workflow orchestration, and a universal semantic layer that spans APIs, BI tools, and natural language interfaces. Anchors delivery around business intent and measurable outcomes.

This layer compresses unlocks developer productivity, feedback loops, reduces duplication, and opens up a compound product surface where every model can power multiple interfaces: APIs, agents, apps. What used to be one-off output becomes reusable infrastructure.

5. Interface Layer

This layer handles how data is consumed by people, systems, and machines. It exposes clean interfaces: REST and GraphQL for developers, a natural language engine for business users, and the Model Context Protocol (MCP) for AI agents. Everything runs through the same access controls and audit trails.

This is the monetisable interface: the point where data products meet users, systems, and revenue paths.

Overview of DDP After-effects

Unified Semantics, Distributed Systems

The Data Developer Platform doesn’t centralise for the sake of control; it standardises for reuse. Every layer of the stack, from storage to AI interfaces, shares a common semantic spine. Governance, lineage, access, and intent are expressed once and flow through the system. No more re-translating between tools, teams, or environments.

Declarative Product Delivery

Most data work is still procedural: built around tasks instead of outcomes. DDP flips the flow: teams declare business models, metrics, and dependencies. The platform handles how it gets built across engines, clouds, and teams.

This shrinks time-to-market for data products. Aligns delivery with business velocity. What used to take quarters becomes part of the sprint.

Engineering-Grade Development for Data

Every part of the system, from schemas to security policies, is version-controlled, testable, and composable. GitOps becomes the deployment backbone. CI/CD becomes default. Data developers operate with the same rigour and velocity as application teams.

This enables safe rollbacks, full audit trails, and change control. Reduces fragility and improves cross-team reliability. Infra doesn't become the bottleneck or the risk.

Intent-Driven Architecture

Most platforms translate business logic after the fact. DDP makes it the starting point. Metrics, domains, and data products are defined as first-class constructs, not layered on later. It’s semantic modelling as infrastructure.

This ability gives organisations a living map of their business logic. Ensures that every system, from BI to LLMs, runs on shared understanding instead of duplicated logic.

Future-Proofed via Modularity

DDP doesn’t bet on one paradigm. It’s built to digest many. Data mesh, agentic systems, LLMs, reverse ETL, you name it. Teams can evolve fast without replatforming. Paradigms may change, but the foundation doesn’t have to.

Intelligent Cloud Optimisation

The platform doesn’t care where data lives, only where it runs best. Workloads route automatically based on cost, latency, compliance, and intent. Execution is abstracted from infrastructure, so every job lands where it delivers the most value.

Enables multi-cloud arbitrage without added complexity. Lowers cost and increases leverage with providers, without losing performance or control.

One Surface, Many Interfaces

APIs, notebooks, natural language, agents: all consuming from the same model architecture. Everything that’s exposed is governed, observable, and reusable. This is the monetisable interface, where data becomes product and products become platform.

Compound Ecosystem Effects

Because semantics are standardised, models are portable. Because models are portable, products are composable. And because everything is composable, each new product makes the next one faster to build, creating a compounding effect.

Learn more about Data Developer Platforms here ↗️

Author Connect

Find me on LinkedIn

Find me on LinkedIn

I gotta say a comprehensive view and I will re-review this any number of times .