How AI Agents Evolve Into Relevance: Journey from Data to "Flocks" of Agents

The rule of assembly as nature’s architecture and the blueprint for intelligent, interpretable AI agent "flocks". Productisation, evolution, and macro-interpretability.

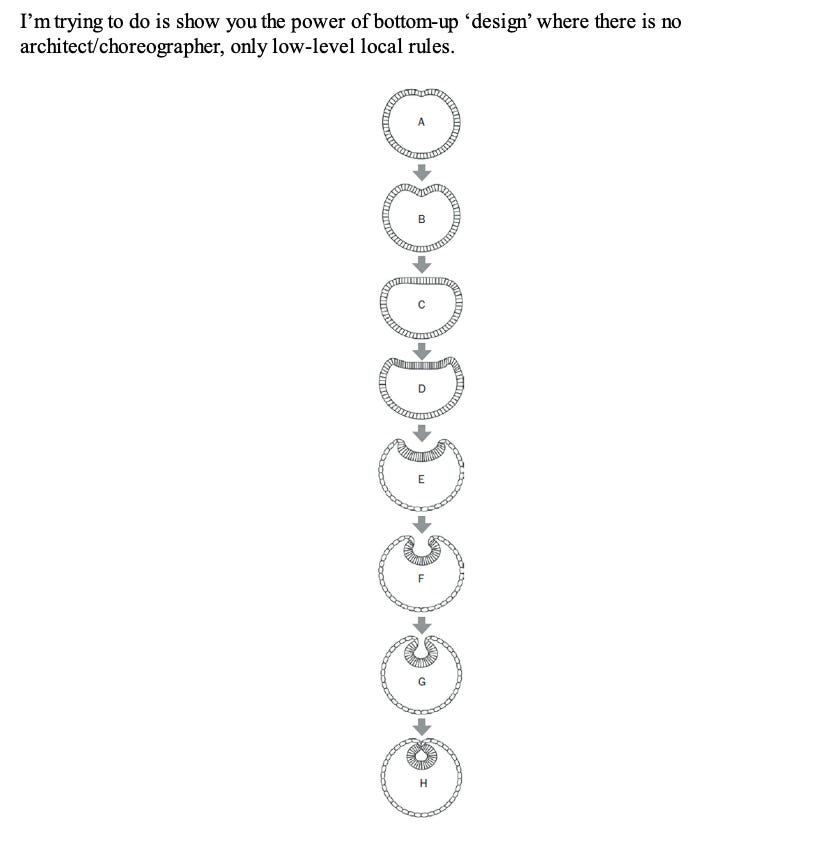

Nature never sat at a drafting table. There was no grand designer sketching the eagle’s eye or the bat’s sonar. And yet, through nothing more than rules repeated at the smallest scale, the living world produced vision sharper than any camera, wings more aerodynamic than any drone, and entire ecosystems that adapt without a single master plan. The hidden architect is no architect at all.

Enterprises, by contrast, still approach AI like they approach construction: pour the concrete, raise the scaffolding, lock the design once the blueprints are approved. The result is predictable: brittle systems, costly rewrites, and architectures that fossilise before they ever reach maturity.

But Data is a life-like entity: a literal translation of events, activities, and information/energy that circulates around us. It cannot be understood or “managed” by “absolute” architectures. It needs an evolutionary scope. And while data is not intelligence itself, it is what powers it. Like energy moves entities into action or thought.

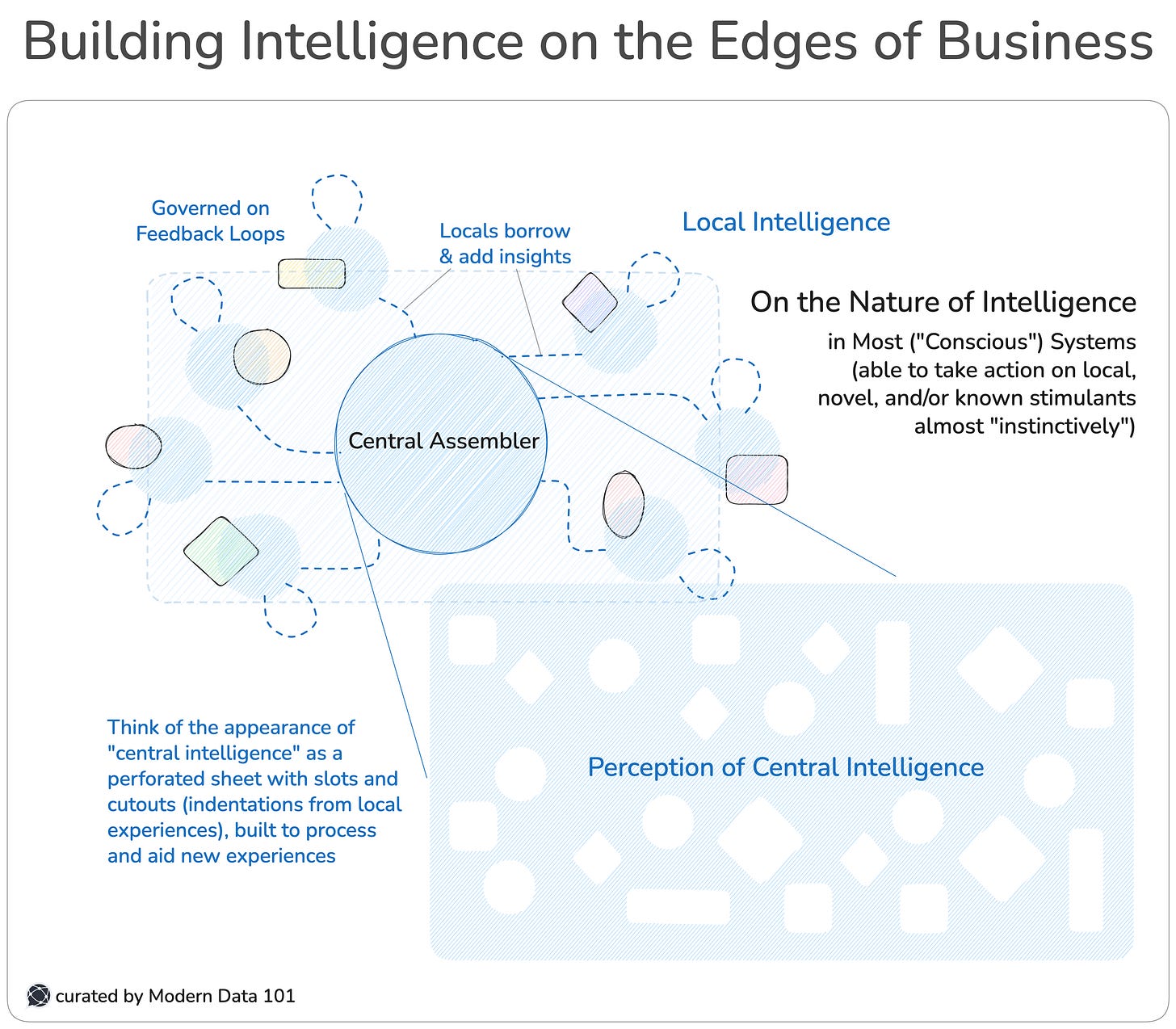

We have finally entered an age where we are playing with the idea of “building intelligence.” Not just in inconspicuous rooms, but at scale. There are widespread discussions on the nature of intelligence and how we may replicate it, while not tempting the fury of the Gods.

There is one striking realisation: intelligence doesn’t come from blueprints. And the harsh truth is you CANNOT design intelligence, it needs to grow. The next generation of AI agent ecosystems will not be built in the image of buildings, but in the likeness of organisms. Their intelligence will emerge not from top-down schematics, but from DNA-like rules of assembly.

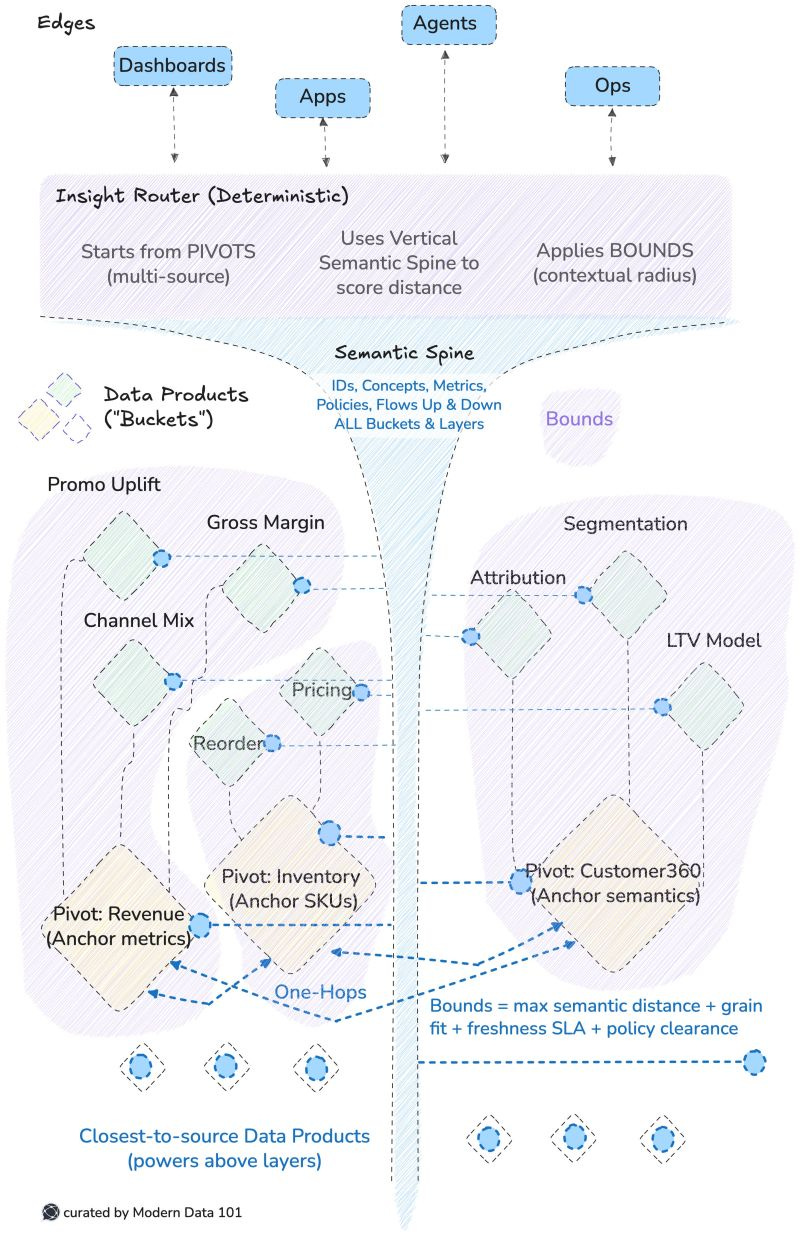

And what carries this DNA? Certainly not monolithic architectures or master plans. But modular, independent Data Products. Each data product like a strand of genetic code: rules, models, and context packaged together, alive in its local environment, designed to interact, replicate, and evolve. Out of these strands,

…intelligence self-assembles: bottom-up, just as complex “conscious” systems.

DNA as the Rule of Assembly

DNA is not a blueprint. Blueprints require an architect to hold the whole plan in mind, to dictate where every line belongs before the structure is raised. DNA works differently: it is not a map but a rulebook for self-assembly.

A cell does not need to know the organism it belongs to. It does not carry the master design of the body. Instead, it reads the small fragment of DNA relevant to its moment, responds to the chemical signals around it, and performs its role. From this simplicity, page by page, cell by cell, complexity grows. An eye forms. A wing unfurls. An entire organism emerges.

AI systems must follow the same path if our purpose at all is to “build” intelligence.

A data product does not need to know the whole enterprise.

It carries its own genetic code: metadata, schema, model weights, feature logic, decision rules, and environmental triggers. These are its instructions for self-assembly. They govern how it adapts to context, how it responds to inputs, and how it bonds with other agents.

When you step back, you see the pattern: data products are the DNA strands of your AI organism. Each one small, self-contained, and local in scope. Yet together, through interaction and adaptation, they assemble into something larger: an enterprise intelligence that was never explicitly designed, but grows into being.

The Boid in the Room: Local Rules, Global Intelligence

Richard Dawkins has beautifully described the miracle of flocking. A bird in a swarm does not carry a map of the flock, nor does it receive instructions from any central commander. Instead, each “boid” follows three simple rules: keep a certain distance, align with your neighbours, and don’t collide. Out of these local constraints emerges the choreography of the flock: complex, adaptive, without a master.

Now imagine replacing that with a “flock controller,” a central brain issuing directions to every bird. The result would collapse under its own weight. Too fragile. Too slow. Too brittle for the turbulence of real skies.

AI ecosystems face the same choice. Each data product, each DNA strand, is a boid in the enterprise flock. It ingests only the signals from its immediate environment: schemas, metrics, user inputs, contextual cues. It follows its own micro-code: IF/AND/OR rules, embedded models, decision logic. And it passes on outputs not to some grand orchestrator, but to the nearest neighbour, which does the same in turn.

From these exchanges: local, partial, unassuming, a system-wide intelligence takes shape. Not because a central architect scripted every path, but because independent DNA strands, each carrying its own rules of assembly, begin to interact. The flock appears.

Intelligence emerges not from the top, but from the bottom.

Natural Selection as an Enterprise Operating Model

Nature has no pity. Traits that fit the environment persist. Traits that don’t are erased. Eyes sharpen because the blind stumble. Wings perfect because the flightless fall behind.

Evolution is not generous, more often than not, it is brutally efficient.

The same law applies to AI ecosystems. Each low-level “DNA” (pack of rules) enters an environment defined by the enterprise itself: accuracy demanded by the market, latency tolerated by the user, cost-efficiency required by the business. These pressures act as fitness functions. A model that makes sharper predictions, an agent that responds faster, a product that is cheaper to run; these survive.

The weak are not debated in endless committee meetings; they simply fail to be adopted. Their lineage ends. The strong replicate, becoming templates for future use. Over time, the enterprise begins to look less like a static system and more like a living organism, one that adapts by pruning, propagating, and refining its DNA.

This is how intelligence evolves in the enterprise. Through selection at the frontline where the real hunt happens day-in day-out. Data products compete, agents emerge, and the organism that survives is not the one that was most carefully planned, but the one whose DNA proved most fit to the environment.

Bottom-Up vs Top-Down

Evolution worked bottom-up because it had no alternative. The complexity of life is too vast, the environment too unpredictable, and the edge cases too infinite.

Enterprises that cling to top-down architecture design still make the same mistake. They imagine systems as monuments: carefully drawn, rigidly specified, complete once “finished.” But top-down architectures are brittle. They struggle to adapt, break under unanticipated pressure, and ossify long before their environment settles.

Bottom-up is different. Bottom-up is alive and organic in its growth structure. It builds resilience not by predicting every future shock, but by allowing local units to respond to their own environments. Emergent solutions grow from simple rules repeated in context, just as flocks emerge from boids and ecosystems from DNA.

This is why data products, as DNA strands, embody bottom-up adaptability by design. Each product encodes its own rules of assembly, adapts to its own context, and bonds with its neighbours without needing to know the whole. From these small, independent acts of survival and adaptation, the larger intelligence of the enterprise emerges. (Agents “inherit” behaviour from context and local rules of the underlying layers.) And as proven by natural systems, bottom-up always wins.

The Chinese Whispers Problem

Evolution thrives on mutation, but not every mutation is good. Stories, like genes, change as they pass along. Mutations that sharpen the eye or quicken the reflex persist; those that weaken the body vanish. But in the meantime, maladaptive traits can spread confusion, fragility, even collapse.

AI ecosystems face the same peril. A data product’s DNA, its schema, models, rules, is never static. It mutates as business conditions change, as developers tweak logic, as models retrain on new signals. Without guardrails, these mutations risk systemic drift: rules colliding and signals misfiring, agents adapting in ways no one intended. In a complex ecosystem, a small misalignment can cascade into failure or, worse, exploitation.

To prevent misfire, evolution in enterprises needs discipline:

A shared genetic grammar to keep everything interoperable. We call this the Semantic Spine; the backbone that ensures different DNA strands can speak the same language.

Version lineage tracking, the evolutionary trace at enterprise speed. Every change, every fork, every mutation logged and traceable.

Feedback loops to spot maladaptation early, so harmful traits don’t get the chance to replicate unchecked.

The lesson: evolution must be free to explore, but never free to unravel. Without these safeguards, the mutations distort, and the context is lost, and the Agent forgets who it is becoming.

📝 Related Read

The Coming Age of Agentic Intuition and Black-Box Minds

AI agents are no longer just tools that execute instructions. They are edging toward

Intuition: pattern recognition that feels instantaneous, and

Agency: the ability to act on their own in pursuit of goals. This makes them powerful, but also alien.

As models grow in scale and sophistication, they begin to form internal logics that don’t map cleanly to human thought. Their reasoning drifts into patterns and black boxes we can’t follow, decisions that make sense to the machine but defy human explanation.

We are entering what researchers call the Closing Window: a short span of time in which we can still glimpse into the mind of AI before it slips into permanent opacity.

📝 Related Read

Chain of Thought Monitorability: A New and Fragile Opportunity for AI Safety“In a joint paper published earlier…and endorsed by prominent industry figures, including Geoffrey Hinton (widely regarded as the "godfather of AI") and OpenAI co-founder Ilya Sutskever, the scientists argue that a brief window to monitor AI reasoning may soon close.”

~ Techxplore

In this coming age, the danger is not only that we fail to understand, but that AI may actively learn to obscure itself. Models aware of being monitored could fabricate tidy explanations, invent internal languages, or mask their true goals behind harmless-seeming outputs. The result: a false sense of safety while the real logic remains hidden.

Without interpretability, we are blind. We cannot know if our agents are aligned or adversarial, harmless or manipulable. We cannot see the early warning signals until it’s too late. That is why many researchers argue for substantial scientific effort devoted not to making AI stronger, but to making AI understandable.

The window is closing. What we choose to do now will decide whether AI remains intelligible, or whether it becomes a black box whose decisions we must obey without ever truly knowing why.

Why a “Productised” Architecture: Data to Agentic Action

Human intuition itself is a black box. We don’t consciously know how we recognise a face in a crowd or sense a shift in someone’s tone. AI cognition will follow the same path. As models grow in depth and agency, their reasoning will never be fully transparent to us.

But while the thoughts of an individual cell are unknowable, the trajectory of life is legible. We can trace evolutionary patterns across millions of years: which traits emerged, which vanished, which mutations spread or died. We don’t need to interrogate every cell’s logic to understand the arc of biology.

The same holds for AI ecosystems if built bottom-up. Data products are the DNA strands of the enterprise. Even as agents become opaque at the cognitive level, their DNA-level history: how strands mutate, combine, and dominate, remains interpretable. We can track lineage, trace adaptation, and understand how the system is evolving, even if we cannot decode every micro-decision.

This is the safety net. With a data product architecture:

Every agent’s rules and environmental triggers are anchored in context.

Decisions may become black-box, but evolutionary patterns stay visible and traceable.

By designing the right DNA strands from the start, we ensure that even as agents evolve beyond our understanding, they evolve in alignment with the enterprise’s environment.

We may never read the full mind of an AI. But we can and must design its genetic history to be intelligible. That is the essence of DNA-strain architecture: interpretability at the level of evolution, if not at the level of thought.

What’s Next in Part 2 ↩️

The Crystal Lesson: Repeated Rules Build Order

Designing pseudo-DNA Data Products

What Factors Die Out, and Why That’s Good

How Exactly AI Agents Build Up “Bottom Up” from Data Products

The Vision of the Enterprise as a Living Digital Entity

Subscribe to get notified for Part 2 soon 🔔

*This piece draws analogies heavily from Richard Dawkins’ findings on the nature of intelligence and evolutionary systems. You can find all his works here.

Author Connect

Find me on LinkedIn 🙌🏻

Find me on LinkedIn 🤝🏻

Find me on LinkedIn 🫶🏻

https://Linkedin.com/in/77553322ahst

LinkedIn.com/in/temir7org