The Art of Discoverability and Reverse Engineering User Happiness

Core Challenges, Range of Discoverability Solutions, User Motivations, Metadata Fundamentals, and Infrastructures that Back This!

TOC

The Problem with Discoverability

Challenges with present-day Metadata Platforms/Technologies

The Solution: Consolidation at a Global Level

What is a Global Metadata Model

Impact of this Model on Data Teams

Impact of this Model on Business

How is Data Disoverability Realised?

What makes a Good Metadata Architecture

Where should the Metadata Engine sit in the Data Stack

What it means to build for the User: Discoverability-as-a-Feature

The Philosophy of UX

What does good UX imply in terms of Discoverability

Discoverability Solutions

Diving right into a Discoverability requirement

“As a data steward, I want to identify all the datasets where the past three quality runs have failed consecutively in a month. These datasets were derived from CRM and third-party data from a specific source system. Also, the datasets of interest should have impacted golden-tier downstream datasets in the past two weeks, eventually affecting production ML models and dashboards used for fraud detection.”

In the current data ecosystem, the above problem is impossible to solve.

The Problem

The data ecosystem has flourished into a giant system with countless sources, pipelines, tools, and users. A few decades ago, almost no organization was thinking in a data-first fashion, which is how their architecture never supported a data-first ideology.

Learn more about the Data-First ideology here↗️

These architectures became a heap of complexities and technical debt as data functions matured and expanded over the years, making it impossible to solve the following problems:

How do you find a suitable dataset to derive specific business insights?

Which is the correct data version? Do you know if the latest one is the right one?

How to source data from across the data ecosystem without any source-dependent constraints?

Is the data reliable and fresh enough for use?

The above list is not exhaustive, and due to such ambiguity, data practitioners are bogged down with inaccurate data channelled to business applications, heavily impacting customer and user experience. The lack of data discoverability directly impacts the organization's bottom line.

Problem with Metadata Platforms

And how it is igniting instead of solving the Data Discoverability problem.

A Big Data Problem?

To supply valuable information on data, extremely low-level details such as column or row versions must be captured, which could mean metadata as extensive as the original data itself. This is a challenge for platforms to organize and operationalize.

A Small Data Problem?

Another very practical use of data is to serve small corners of domains and use cases. Original source data gets split/duplicated/exported for ad-hoc analytics much more frequently than estimated (and Murphy’s law is always at play here - everything that can go wrong does go wrong). These minuscule trajectories in the data stream are where some of the most key customer-facing findings and data-related interactions are taking place - and it’s entirely missed by broad catalogs or discoverability solutions.

Stale Metadata

Data is highly dynamic, so the metadata platform must keep up with constant evolution and maintain fresh metadata to prevent discrepancies and gaps between raw data and business insights.

Overlooked Analytics

Even though analytics or business insights is the end goal of the Data Discoverability problem, most metadata solutions do not serve analytics APIs, which is why data catalogs are able to barely answer complex queries. If the suitable dataset is not identified for analysis, it means weeks to months of wasted analytics and downstream efforts.

No Metadata Modeling

Any organization that isn't data-first suffers from unorganized data that pours in from multiple sources in the Modern Data Stack (MDS). Without logical data modelling, metadata is chaotic and meaningless.

Metadata platforms operating in the Modern Data Stack space tend to face discoverability issues within the metadata itself due to vastness and lack of cohesiveness. Enabling data discoverability with disorganized metadata is, therefore, a far-fetched idea.

The Solution

To solve complex queries like discovering a dataset with filters spanning various source systems and users over a period, a system must log metadata throughout the entire data journey. The system should typically collect and interweave metadata contextually from an integration plane, lineage and historical logs, user data, application logs, and many more components for the query.

Solving the above problem and establishing context requires a remarkably cohesive approach and is a mammoth task, given that metadata itself tends to be a big data problem. To achieve data as an experience, the organization should strive to connect every data point it owns to every business and data function without any friction from the data infrastructure.

🗄️ This is where Metadata Modeling comes into play.

A functional metadata model is achievable by eradicating the chaotic layer of countless point solutions and introducing a common platform that abstracts and composes significant data ecosystem components into a unified structure. This unified platform should be able to source and ingest data from various external and internal systems to omit the disparate nature of data sources. Tied-up components and tied-up data sources enable the centralized metadata plane.

Once the metadata plane is available, a logical layer must sit on top to share context around both data and metadata. The logical or semantic layer enables a free flow of information and context from the business side to the physical data side that IT teams manage with minimal business know-how. The semantic layer offers direct access to business teams to define entities, tags, relationships, stakeholders, and policies.

Context such as relationships, users, access policies, etc., directly from the business side (through the semantic layer) combined with inherent metadata fields from the composable data layer such as time of creation, updates, size, location, etc. makes way for the design of a logical metadata model, or what we call the Knowledge Graph.

🔑 The Global Metadata Model is ideal for answering complex analytics queries.

What is the Global Metadata Model

A data model for metadata that allows users to explore relationships and identify top datasets relevant to their current query.

It interweaves multiple data assets, sources, services, targets, and users to enable logical connections that give meaning to the data.

It activates dormant data by connecting it to the vast network of the data ecosystem, allowing users and machines to start invoking and leveraging vast volumes of data that were previously meaningless due to missing semantics.

What is the Impact of a Metadata Model on Data Teams?

Answering complex analytics questions with simple queries

Supporting both push and pull APIs

Enabling the ability to model metadata into logical entities and relationships

Easily operable APIs across CRUD operations, search, events, listings, and much more

Freedom from infrastructure or organization-specific stack dependencies

Ranking assets for relevance to intelligently manage heavy data volumes

Enabling recovery options through soft deletion, lineage, and versioning capabilities

Prioritizing alerts on observing fatal changes across a vast network of integrations, components, and users

Conducting detailed root-cause analysis to detect and fix bugs in record time

*Additional Note

As data developers, Metadata Observability is also a criciual aspect to ensure governed data usage. Metadata quality measures aren't very different from data quality measures. You have similar parameters, such as completeness, timeliness, or accuracy. But there are some parameters that are specifically critical to metadata observation, such as:

✅ Granularity or Atomicity: The level of detail in the metadata provided.

For example, attribute-level metadata that describes each column in a dataset rather than just the dataset as a whole.✅ Interoperability-as-a-measure (specifically for metadata quality assessment): The ability of metadata to be used and understood across different tools, systems, teams, or platforms.

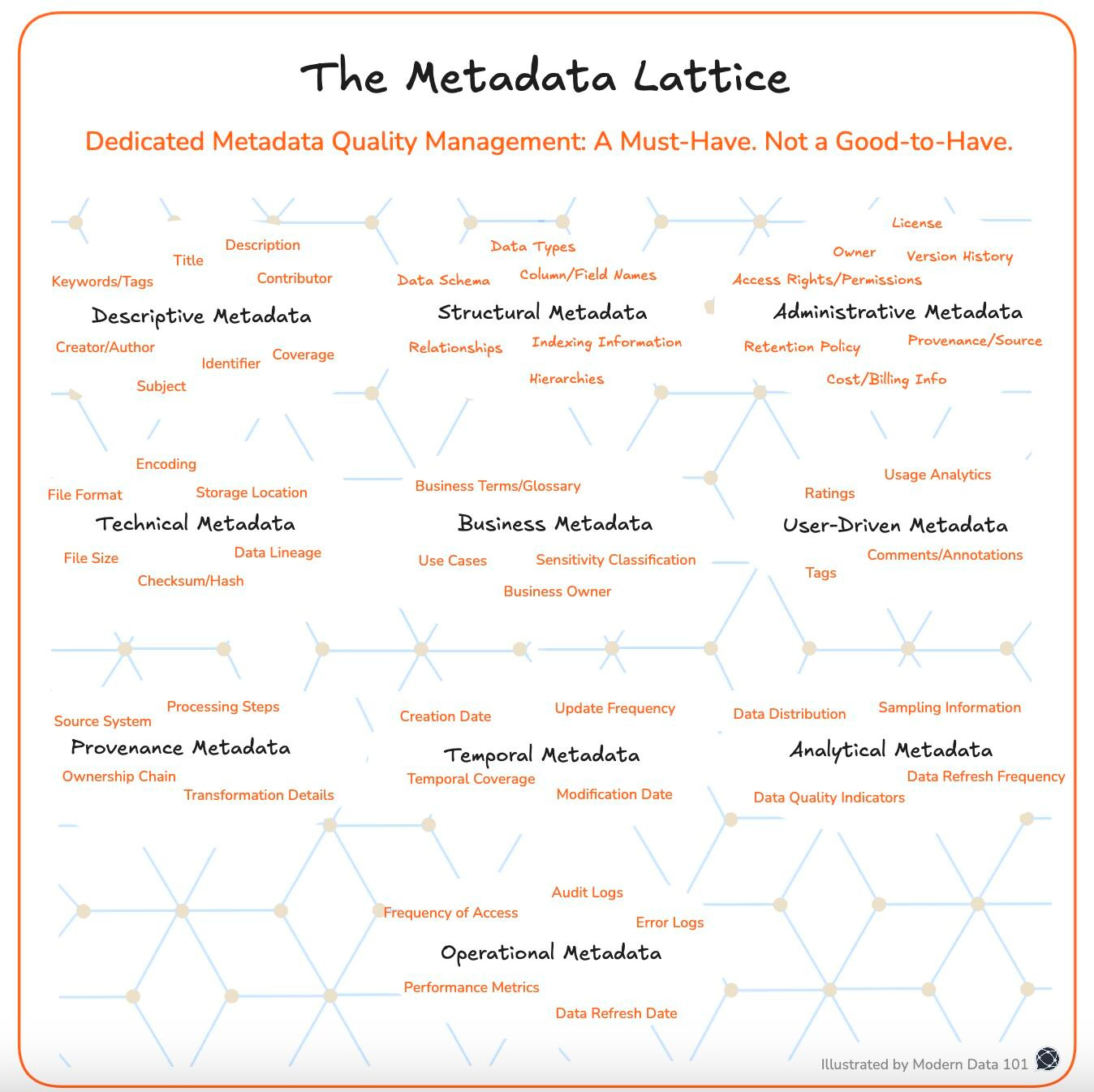

✅ Semantic Clarity: The ability of metadata to partially or fully convey the context of data. For example, a well-maintained high-value Gold Dataset would ideally have all attributes of the metadata lattice checked off, leading to high understandability of what the data and its attributes mean and how they can be used.

What is the Impact of a Metadata Model on Business?

Faster data-to-insights journey: analysts can query complex questions with simple queries and get accurate answers in record time.

Automated improvement in data context that enables a common understanding of data across teams.

Fresh and high-quality data available to customer-facing teams due to lineage, versioning, feedback, and debugging capabilities.

Minimal data discrepancies between teams with a common metadata backbone that reflects changes across downstream and upstream linkages.

High security and governance are ensured by policies that could be automated with fingerprinting or classification of sensitive data.

Smooth data orchestration and real-time sync across multiple entities that produce, manage or consume data.

Metadata is what adds context to the data we own. Consequently, making data usable by businesses. Without context/meaning/metadata, data has no value. This is why metadata is one of the structural pillars of Data Products.

There are times when metadata volume could exceed the actual data. Just to make data usable by adding context like tags, description, freshness, and so on. It makes one wonder that if the path to data usage (metadata) is corrupted, isn’t data usage bound to create a negative impact on business?

Moreover, given the volume that a Metadata Lattice constitutes, we don't just need to start managing metadata quality but also approach it with smart technology that guarantees precision (least areas of tradeoff or leniency when it concerns quality).

How is Data Discoverability Realized?

💡 ‘Good metadata architectures’ are eerily similar to ‘good data architectures’

Imagine a world where every version of a table schema is captured and stored, along with every column, every dashboard, every dataset in the lake, every query, every job run, every access history, etc. Very quickly, metadata starts to look and smell like a Big Data problem. You must also traverse the metadata graph of 10s of millions of vertices and 100s of edges. Still, you think you can hold all that “measly” metadata in a MySQL or PostgreSQL database.

Because your metadata can be extensive and as complex as your data and deserve to be treated with the same respect. An excellent metadata engine should be scalable, reliable, extensible and offer rich APIs. Additionally, to activate metadata, it should be drop-dead simple to integrate new metadata sources and provide complete visibility into the integration process. A system capable of storing and serving at the same scale.

Here are some non-negotiables of a good metadata architecture:

A system capable of storing and serving at the same scale.

Synchronise the data across data stores from graph databases to search engine

Strongly typed metadata models that evolve in a backward-compatible way.

Extendable APIs bring flexibility, customizability, and longevity.

Ease of Integration

To summarise, organizations need a metadata engine that has a stream-based metadata log (for ingestion and change consumption), low latency lookups on metadata, the ability to have full-text and ranked search on metadata attributes, and graph queries on metadata relationships, as well as full scan and analytics capabilities.

Different use cases and applications with various extensions to the core metadata model can be built on top of this metadata stream without sacrificing consistency or freshness. You can also integrate this metadata with your preferred developer tools, such as git, by authoring and versioning this metadata alongside code. Refinements and enrichments of metadata can be performed by processing the metadata change log at low latency or batch processing the compacted metadata log as a table on the data lake.

Where should this Metadata Engine sit in the Data Stack?

The metadata engine needs to be located in the central control plane of the data stack or the data platform: a point which allows end-to-end visibility so the metadata engine is able to log metadata-as-a-stream from touchpoints spread out across the ecosystem: from sources and tools to pipelines and continuously changed and duplicated data assets across data domains.

The global metadata model is a central sub-system of your data stack, given it records and contextualises data and events at a global scale. For example, recording cross-domain relationships or triggers, lineage of data across tools, resources, sources, and customer-facing apps or APIs, and so on.

Localised metadata models, while functional at a domain level, aren’t adept at contextualising cross-domain or cross-stack events/lineage, which are way too common and critical to business. Instead, if the objective is to have narrowed down views (too much metadata is dangerous for both human and machine users), designs such as workspaces or domain-centric logical separations can be created to retain the global context while only using part of the model.

What It Means to Build for the User: Discoverability-as-a-Feature?

User experience is the most important purpose that we must build for. Ultimately, at the end of the line, it’s the human user sitting on a desk with a laptop accompanied by a range of emotions. These emotions are so fundamental to user experience that the trigger is neutral - which implies that we must always build to trigger positive emotions. For instance, pleased, relieved, happy, joyful, familiarity, comfortable, habituated, and so on.

Apple was thinking ahead of its time when Steve Jobs decided to focus heavily on aesthetics (premium design, which made users feel good about being seen with an iPhone) and even fun user apps for the iPhone - such as camera filter additions long before Snapchat or Instagram days. Another amazing example is the early Mac’s focus on font aesthetics: it was the first to introduce different font styles and colors which peaked its consumer-presence compared to the regular Microsofts and IBMs at the time.

Humans are, after all, simple creatures, significantly swayed by the

magnetism of beauty and good design.

What does good UX imply in terms of Discoverability?

It's not only about how information is presented and organized, it goes beyond—it’s about how the user feels while using it. They should feel in charge, confident, and able to get things done quickly and effortlessly.

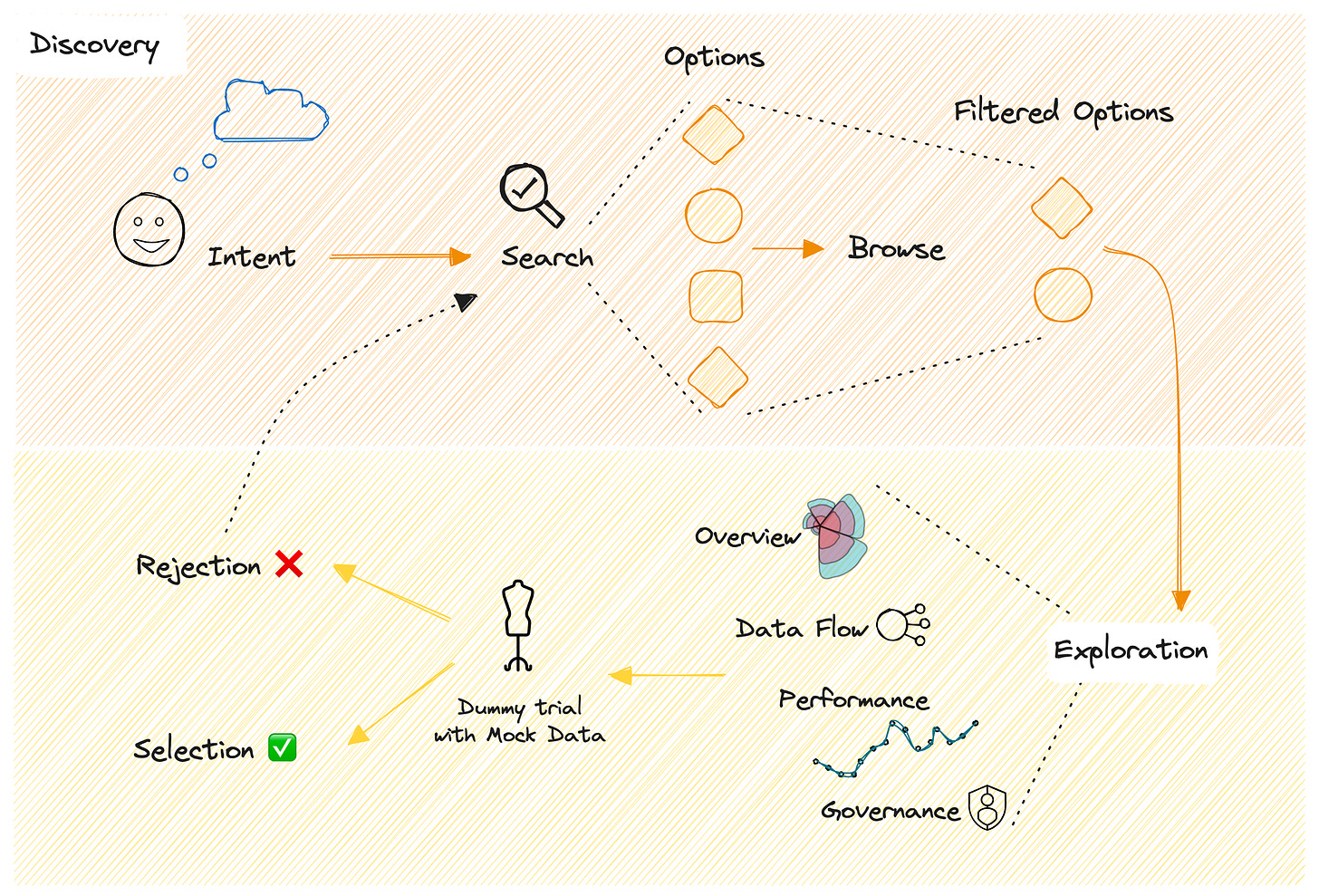

The interface for discoverability must be designed to expose the right information at the right touchpoint in the user’s interaction with data. There are three primary user actions we must consider when building discoverability:

Search

When someone uses Search, it’s because they have something specific in mind and want to find it fast. For Search to really work well, it needs to be backed by a comprehensive metadata model—complete with accurate descriptions, synonyms, and tags. That’s where your data platform comes in, handling and delivering the backend.

Imagine a marketing rep chatting with sales to get more context and deciding to add a synonym as a tag to a data asset—like referring to "lead" as a "prospect." That tag doesn’t just stay in the discoverability interface; it becomes part of the larger metadata model and shows up across all tools, dashboards, and interfaces that the marketing teams use to access that data. Adding other tags, like ones for policies, quality, or context, keeps building on this metadata model. Over time, as more people use and contribute to it, the search experience for both domains becomes more intuitive and precise.

Browse

Browsing is what users do when they want to explore or discover information in a more open-ended way. It’s about navigating through categories, menus, or hierarchies to find assets or options that catch their interest. Ideally, users start with a search and then browse to narrow things down further, using features like filters or explore tools. These features, built on top of semantic information, help zero in on exactly what they’re looking for.

The range of filters would depend on the organisation’s data culture or structure. Some examples would be filtering on the basis of Quality, Performance, Ownership, Accessibility, or Downstream Impact.

Explore

After coming across options post search and browsing actions, users often need to dive deeper into a product to see if it fits their specific purpose. The Explore action gives users a detailed view of the asset/object to help them discover if it’s the one they’ve been looking for. This requires various interactive views to help users interact with the details of the data product.

For instance, interfaces for product overview, lineage maps, governance overview (where users are able to see SLO adherence at a glance for trust, documentation overview where they understand how to use the product, and more.)

Here’s a view of the user journey of the above three actions:

Discoverability Solutions: A 10K Ft. Overview

Catalogs

Provides a structured and searchable list of items to locate and refer.

Catalogs are comprehensive, organized repositories of items, resources, or assets. Their primary purpose is to store, classify, and make resources accessible. They focus on organization, taxonomy, and metadata.

Focus on metadata (what the resource is and its attributes).

Often static, serving as a centralized registry.

Searchable but lacks robust interactivity or engagement mechanisms.

Discoverability Feature in Data Hubs

Help users navigate, find, and use what they need with contextual guidance in vast systems or ecosystems.

These are platforms designed specifically to enhance the exploration and visibility of various entities in the broad data ecosystem, including data assets, models, infrastructure resources, metadata, code bundles/pipelines, and more. They prioritize connecting users to the right resource dynamically through advanced search, recommendations, and contextual guidance.

Key Features:

Dynamic recommendations based on user needs and interactions.

Incorporates interactive features like reviews, sharing, or curation.

Prioritizes exploration and visibility over simple listing.

Marketplaces

Focused towards exploration and exchanges between providers and consumers of resources.

Marketplaces are transactional platforms where assets are not just listed but also traded or exchanged. They focus on connecting buyers and sellers and facilitating transactions.

Facilitates transactions or exchanges.

Focuses on supply-demand dynamics.

Prime factors to consider for underlying data platform enabling the above interfaces

Unification

Complex enterprise architectures may often end up having multiple catalog solutions across different teams or domains. The underlying platform should provide the tools to unify this catalog proliferation.

Extensibility

Ability to integrate with existing discoverability investments (example: existing catalogs) and consolidate/enrich this data with a unified discoverability experience where the organisation doesn’t lose the effort and cost spent on organising data and metadata as per the design language of the catalog of choice.

Interoperable Metadata Model

The APIs on the metadata model must be integrable and interoperable with native stacks of different domains. Or the platform must give the ability to developers to create APIs that are consumer-defined and compatible with the user’s familiar discoverability interfaces.

Strong-backed Infrastructure

The platform must be able to manage a huge scale of data given how metadata has an exponential growth pattern - could boom into big data with the smallest event/trigger/change being logged.

Time travel at the Atomic Level

Alongside broad context features like tags, descriptions, or synonyms, Versions also provide huge context to users. Lineage and Versions are a few of the most powerful tools/functions when it comes to understanding data better which thrives on change.

MD101 Support ☎️

If you have any queries about the piece, feel free to connect with the author(s). Or feel free to connect with the MD101 team directly at community@moderndata101.com 🧡

Author Connect 🖋️

Find me on LinkedIn 🙌🏻

Find me on LinkedIn 💬