Speed-to-Value Funnel: Data Products + Platform and Where to Close the Gaps

Fundamental approach to building products, platforms, and user-relevant data interfaces

"Time kills all deals." In business, it’s not just a saying—but a stifling reality. Speed is more than a virtue; it’s survival. The ability to pivot fast and pivot in favourable directions has always been the most favoured skill in technologists and tech leaders.

It is undeniable that in technology and more immediately within the realms of data, speed is of the highest value. With so many rampant innovations, process upgrades, new roles like never before, and so much novelty, speed is a skill rather than a byproduct of talented minds.

The Speed-to-Value funnel channels this urgency, turning velocity into precision. It’s a system for data and domain leaders in favour of using data as an asset. For data strategists and platform developers who understand that clarity, focus, and rapid execution are the differences between staying relevant with the business/user and being grouped under “traditional” or “legacy”.

The Speed-to-Value Funnel: Velocity Over Directionless Movement

Consensus on Success Metrics (North Stars)

This is where it all begins. Every function in the organisation needs a guiding light—North Star metrics that define success. This stage is about getting alignment across stakeholders to ensure everyone knows and agrees on what 'winning' looks like. By nailing this, we’re not just defining metrics; we’re creating a shared language of success and minimising dissonance.

Contrary to go-to success indicators like “Revenue”, what truly drives long-term growth for data platforms is the rate of user adoption. Data Platforms are a long-term game, while revenue indicates immediate success while missing out on the roadmap of success. By dedicatedly focussing on broader user adoption as the primary North Star of Platform Engineering teams, the platforms evolve into becoming:

More user-friendly: More users will only adopt if the platform is friendly

More receptive to user behaviour

More intuitive features that deliver embedded experiences (don’t make the user feel the need for a feature while being served by an embedded feature)

…and so on.

Primarily, what this does is increase retention, adoption, and, ultimately, revenue potential.

Prioritization & Metric Modeling with Advised Product Framework

Once you know what success means, the next question is: what gets us there fastest? Here, we leverage proven product frameworks to prioritize the right metrics and model them for impact. To prevent any overwhelm, the metric model needs to pass through a prioritisation filter, aka a concrete product strategy. One that enables you to substantiate the ideated model with its true value proposition to prioritise the efforts behind metrics resourcefully.

One is free to use any framework they deem fit for this purpose, such as, say, a Lean Value Tree, right before freezing the Metric Model. Let’s consider the BCG framework, for instance, as an example.

The BCG (Boston Consulting Group) Framework is a growth-share matrix that sheds light on high-performing tracks, discardable tracks or ones that need consistent maintenance. It has four interesting categories, and usually, they apply to products and initiatives, but we are using them for metric prioritisation.

In summary, with a BCG framework-based prioritisation, a metric model would enable, there is more clarity on:

Profitable and sustainable lines

Where to invest further

Where to cap efforts

What to decommission

What to be consistent with

How and to what extent to react to dips and spikes

We have addressed this framework in more detail and expanded on what each of the four segments would mean in terms of metric prioritisation. Access here ↗️

Post-prioritisation, a logical metric model might look like this (for representation)

Functional & Granular Metrics: Instant Tracking

North Star Metrics: Results within 6 months to 1 year

To improve the performance of North Star metrics, the sales manager could come up with new data strategies or enhanced granular metric(s) and convey the same strategy to sales analysts and the data product manager. Based on the new logic, the analyst could tweak the Sales Funnel Accelerator (Logical Model powering the sales funnel metrics) in the following ways:

add, update, or delete new entities to the underlying data model (single-lined logical joins)

define new logic (for metrics or data views) based on the updated requirement (logical queries)

Finally, the analytics engineer simply maps the physical data/tables as per the schema and logic of the new requirements in the logical model/prototype.

Ready-to-Deploy Data Product MVP

Speed is critical, and nothing delivers speed like a pre-configured data product stack. At this stage, you move from concept to execution, deploying key metrics through a ready-to-go Data Product MVP. The magic? Coupled semantic modelling and infrastructure that ensures metrics aren’t just available—they’re accurate, reliable, and actionable.

Usually, for commonplace use cases, a well-adopted platform would already have boilerplates or templates to pull up and customise a little right before deployment testing—Which makes the lives of both business stakeholders and analytics engineers so much easier.

In case there are no templates, we define a data product prototype (like in stage 4). Without any mapping efforts or de-worming physical data, this prototype is already ready to deploy with hyper-realistic mock data (credit to the platform that learns from real-world data).

Again, we have addressed this process in excruciating detail in the guide on “How Data Becomes Product”. Learn what happens after prototype deployment, how a plethora of source and aggregate data products get created on-demand and on the fly and much more in this visual guide.

Customize On-Demand (If Needed)

Business questions evolve, and so should your data products. Flexibility is built in at this stage, allowing for semantic model updates tailored to constantly changing business needs. This step ensures the business teams’ curiosity is never limited by rigid systems—keeping insights fresh and user-relevant.

In more technical terms, at the heart of every satisfying user interaction (by data consumers) are evolving data models. Unlike stagnant models that become outdated as user behaviour shifts, evolving data models continuously adapt to reflect real-time insights, preferences, and patterns. This adaptability is the foundation for building data products that resonate deeply with users.

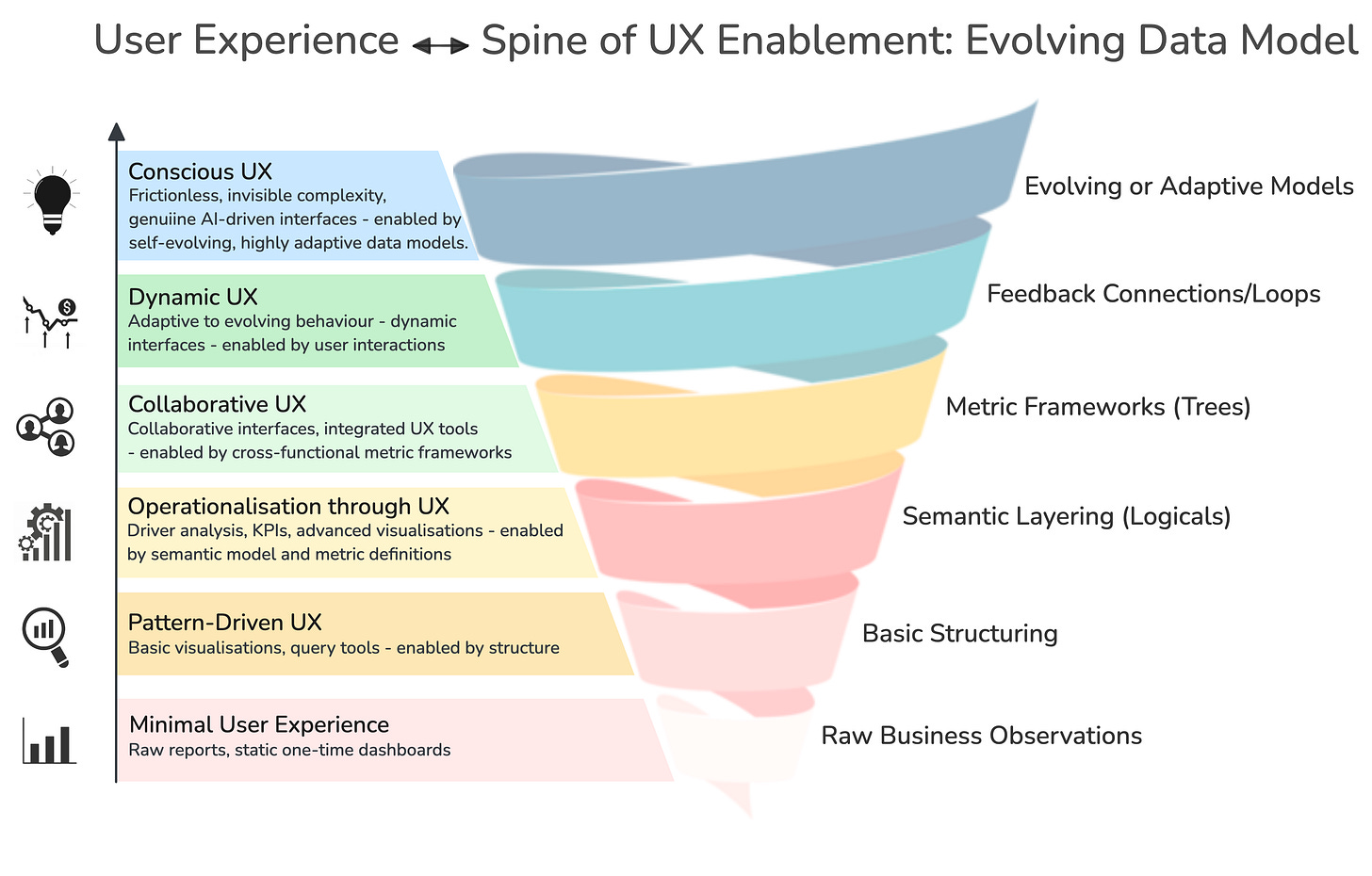

A good data platform installation should help domains and teams rise through the layers of fundamental to advanced user experiences (like in the image below). And better UX always means better ROI for data teams and the business teams they are enabling.

On-Demand, Reliable Insights for Decisions

This is the endgame: the data consumption layer. Transparent metrics and clear root cause analysis enable leaders to make confident, data-driven decisions. Insights that empower business teams to act decisively and stay ahead in a competitive landscape.

Now, these insights could be funnelled through a plethora of consumption options:

Dynamic and NOT one-time use dashboards

GenAI interfaces or AI Agents

Data Apps (e.g.: live purchase volume indicator on, say, Streamlit)

Marketplaces/Catalogs

Business-friendly query workbench (abstracted querying through semantic model)

and so on…

Boosting Adoption: Closing Gaps Where Data Products/Platforms Fail

Consistent efforts in enhancing the product and no streamlined initiatives or prioritisation for a parallel adoption track is a red flag. A product can be super-optimised and feature-rich but ultimately is a failure if it doesn’t get adopted - as good as an inert product with zero features.

It’s, therefore, a non-negotiable to pair maturity & adoption tracks once the MVP is up. This applies in terms of both data products and data platform products, and to be transparent, any and all products. The P0 or base priority of product builders should be the Experience Layer to ultimately supercharge user Adoption.

Bare Bone Product Development

Decide who the target is

Identify the gaps between existing products and target experience (market gaps)

Build an MVP in the direction of filling that gap

Ensure an adoption pathway for the target

Iterate on MVP

Data Product Development

Developing data products requires precision, a user-centered approach, and iterative refinement. Here's how the bare-bones framework aligns:

Decide who the target is

Define the target users of the data product. Are they data analysts, engineers, data scientists, business users, or external customers? Understanding their specific needs, workflows, and pain points is critical.

Identify the gaps

Map the current tools, datasets, and workflows to uncover inefficiencies or unmet needs. Look for gaps such as lack of actionable insights, poor visualization capabilities, limited access to critical datasets, or non-intuitive interfaces. These market gaps guide what features your data product must address.

Build an MVP

Develop the smallest version of the data product that can deliver value to users. For example:

A dashboard solving a specific analytical bottleneck.

A recommendation engine that integrates into an existing system.

A reporting tool focused on one priority KPI. Prioritize modularity and scalability so that enhancements can be added over time.

Ensure an adoption pathway

Onboard users with clear documentation, in-product tutorials, and active support. Leverage a champions program or incentivize early adopters to evangelize the product within their organizations.

Iterate on MVP

Use feedback loops to improve the data product. Examples include monitoring usage logs, conducting user surveys, and running A/B tests. Iterate to optimize performance, refine features, and improve user experience.

Data Platform Development

A data platform requires hardcore infrastructure, modularity for scale, and a design that supports the needs of data consumers across the organisation. Here's how the bare-bones framework applies:

Decide who the target is

Define the platform’s user personas: data engineers, platform administrators, data scientists, or application developers. Consider the orgs’ goals, such as faster data pipelines, self-service analytics, or support for advanced machine learning.

Identify the gaps

Analyze the current data architecture to identify bottlenecks like:

Silos in data storage.

Complex or manual ETL processes.

Performance issues in data pipelines.

Lack of real-time capabilities or governance. Address these technical and functional gaps in the platform roadmap.

Build an MVP

Focus on delivering a foundational platform layer that addresses the most pressing needs. Examples:

A data lake with connectors for key data sources.

A metadata catalog to improve discoverability.

Automation for the most frequent and resource-heavy ETL jobs. Ensure the MVP includes proper security, governance, and observability features to build confidence.

Ensure an adoption pathway

Provide clear guidelines for integrating existing workflows and systems with the platform. Offer onboarding sessions, documentation, and sandbox environments. Partner with early adopters to co-develop additional features and showcase success stories.

Iterate on MVP

Gather continuous feedback from users on platform performance and usability. Iterate on capabilities such as scaling storage, improving query speed, expanding tool integrations, and introducing advanced analytics capabilities. Monitor adoption metrics to ensure the platform is delivering ROI.

There are so many more tidbits and underlying minor strategies that make a product run the extra mile. If you’re still here and still curious, I addressed some strategies that best-in-class Data Developer Platforms use to boost adoption at scale.

Get all the deets here, from templatisation and documentation strategies to the art of the possible and psychological embeddings like developing familiarities and more.

MD101 Support ☎️

If you have any queries about the piece, feel free to connect with the author(s). Or feel free to connect with the MD101 team directly at community@moderndata101.com 🧡

Author Connect 🖋️

Find me on LinkedIn 💬

From The MD101 Team 🧡

Bonus for Sticking With Us to the End!

🧡 The Data Product Playbook

Here’s your own copy of the Actionable Data Product Playbook. With 1500+ downloads so far and quality feedback, we are thrilled with the response to this 6-week guide we’ve built with industry experts and practitioners.

Stay tuned on moderndata101.com for more actionable resources from us!

🎧 Latest from Modern Data 101

Senior Data Engineer who has tested the waters at some of the biggest brands, including the likes of Disney Streaming, Nielsen, Meta, and currently Samsara! Hear it all straight from Matthew Weingarten on his episode releasing next week!

Or check into our latest episode with Rho Lall, Senior Analytics Engineer and find out everything from Data Tech that Empathises to the Practical Relevance of Data Products and more!