Rethinking Data Movement: A First Principles Approach

Why batch-heavy ingestion breaks under modern scale, and what incremental-first, observable pipelines replace it with

TOC

Why Data Movement Needs a Rethink

The Problem: Legacy Ingestion at Scale

The Triple Squeeze on Data Teams

Common Challenges of Data Movement

Broader Operational Complexities

The Shift: The Principles of Modern Data Movement

How a Data Movement Engine Embodies the Principles

The Architecture of Modern Data Movement

Deep-Dives into Modern Data Movement Patterns

Why Data Movement Needs a Rethink

Data used to be simple: a handful of databases, nightly batch jobs, and reports waiting in the morning. Today, that world is invalid. Today, every enterprise is drowning in sources that won’t stop multiplying: relational databases, SaaS APIs, log files, event streams, you name it. Each comes with its own quirks, schemas, and update patterns.

At the same time, the patience window has collapsed. Business stakeholders don’t want yesterday’s snapshot, they expect dashboards that refresh in near-real time. Product teams want features powered by streaming data. AI initiatives demand low-latency, constantly refreshed context. SLAs rise, and tolerance for lag drops to near zero.

We’ve thrown brute force at this problem before. The Spark-era mindset was: spin up clusters, crunch everything in sight, reload the warehouse from scratch. But that model doesn’t scale when your sources are infinite, your SLAs are tighter than ever, and your cost curve is under constant pressure.

This is why the centre of gravity has shifted. Modern data movement is not about “big batch compute.” It’s about:

Incremental-first pipelines that move only what’s changed.

Change Data Capture (CDC) that keeps systems in sync without heavy lifting.

API-aware connectors that respect rate limits, pagination, and quirks of SaaS ecosystems.

Observability baked in, so teams can see, debug, and trust their pipelines end-to-end.

That’s the new playbook. Without it, everything else, like your analytics, your AI, your customer experience, suffers.

The Problem: Legacy Ingestion at Scale

Start from first principles: what is data movement for? At its simplest, ingestion moves state (records, facts, events) from places that produce truth (databases, SaaS apps, logs, streams) to places that consume truth (analytics, models, OLAP, downstream apps).

Two immutable constraints govern this transfer:

Time (how fresh does the consumer need the state?), and

Cost (what resources are we willing to spend to move and store that state?).

Everything else (connectors, formats, scheduling) is an engineering answer to those two questions, plus a third practical requirement:

Trust (did we move the right thing, in the right order, without losing history?).

Legacy ingestion assumed different priors. It assumed sources were few and stable, that overnight freshness was acceptable, and that compute was cheap enough to batch-and-crunch. Those priors no longer hold. Once you reduce the problem to its fundamentals, the failure modes become obvious and inevitable.

The Triple Squeeze on Data Teams

From those fundamentals, we can derive three pressure points that together break the old model.

First: input complexity.

The number and variety of producers exploded. It’s no longer just a couple of OLTP databases; it’s SaaS APIs with pagination and flaky auth, event streams with high cardinality, files with occasional schema drift, and transactional stores that need per-row fidelity. Each source has different semantics for change and different expectations for correctness.

Second: timeliness.

Consumers don’t want a midnight snapshot anymore. Product features, real-time metrics, and AI models expect low-latency context. That collapses the acceptable time window for movement and forces you away from monolithic nightly jobs toward more continuous, incremental approaches.

Third: economic reality.

Compute and storage are not infinite, and every naive scaling move shows up on the bill. The old reflex (spin bigger Spark clusters, redo the whole table) quickly becomes unaffordable when multiplied across dozens or hundreds of sources.

These three forces: complexity, latency, and cost, aren’t independent nuisances. They compound: more sources increase the work per unit time, tighter latency forces more compute concurrency, and that in turn multiplies cost. That is the structural squeeze data teams live inside.

Common Challenges of Data Movement

If you translate those pressures into day-to-day work, a few predictable problems appear. Each one is the logical consequence of violating a fundamental constraint above.

Take cost first. Legacy Spark-footed architectures were built to process everything in bulk; they accepted the shuffle and orchestration tax as part of the workflow. But when your job is “move a small delta” and you still pay a cluster’s tail, every efficient pattern erodes under a fixed overhead. The math of small changes plus big clusters is unforgiving.

Now look at extensibility. When adding a source requires touching the core Java ingestion codebase, you’ve created a high-friction gate. Fundamentally, integration should be an interface, not a platform-team project. If the path to connect is long and centralised, velocity collapses, and connector work becomes a backlog item rather than an enabler.

APIs are a different kind of problem: they break the assumption that all sources are pullable with SQL-like semantics. SaaS systems require auth flows, token refresh, pagination, rate-limit handling, and bespoke error handling. Treating APIs as “just another JDBC” is a category error that manifests as flaky jobs and missed data.

Ecosystem lock-in follows from the same roots. When pipelines are designed to land only into a single “depot,” you trade short-term convenience for long-term brittleness. The fundamental requirement is portability of state: the ability to land data where downstream compute or storage makes the most sense. Locking the sink violates that requirement.

Finally, the absence of native CDC is simply a mismatch of model to reality. Systems change at the row level; polling and full refreshes treat change as a blunt instrument. The logical solution is an incremental-first approach that captures what changed and preserves the before-and-after context; anything else is an engineering concession that will cost you time or money (usually both).

Broader Operational Complexity

When you step back, these problems create a second-order effect: operational entropy. Systems that were meant to be ephemeral become permanent fixtures because they contain business logic and edge-case handling nobody owns. That is how scripts calcify into critical infrastructure.

Two specific dynamics explain why operational complexity escalates.

Schema drift.

Producers will change shape. Without a principled model for detecting and handling evolution, teams either tolerate broken downstream consumers or hide the raw payloads and duplicate effort. The fundamental trade-off is between strict typing and durability of truth; systems that can’t reconcile both end up with shadow copies and audit gaps.Observability poverty.

You need to understand why a broken job failed, whether backfills are safe, and how capacity should be provisioned going forward. This feedback loop is essential to keep the system predictable. Otherwise, capacity planning and incident management risk is guesswork.

Put another way: legacy ingestion fails not because engineers are careless, but because the early design choices violated the problem’s first principles. We treated transient engineering shortcuts as if they were architecture, and the bill for that misalignment has come due: in fragility, cost, and lost velocity.

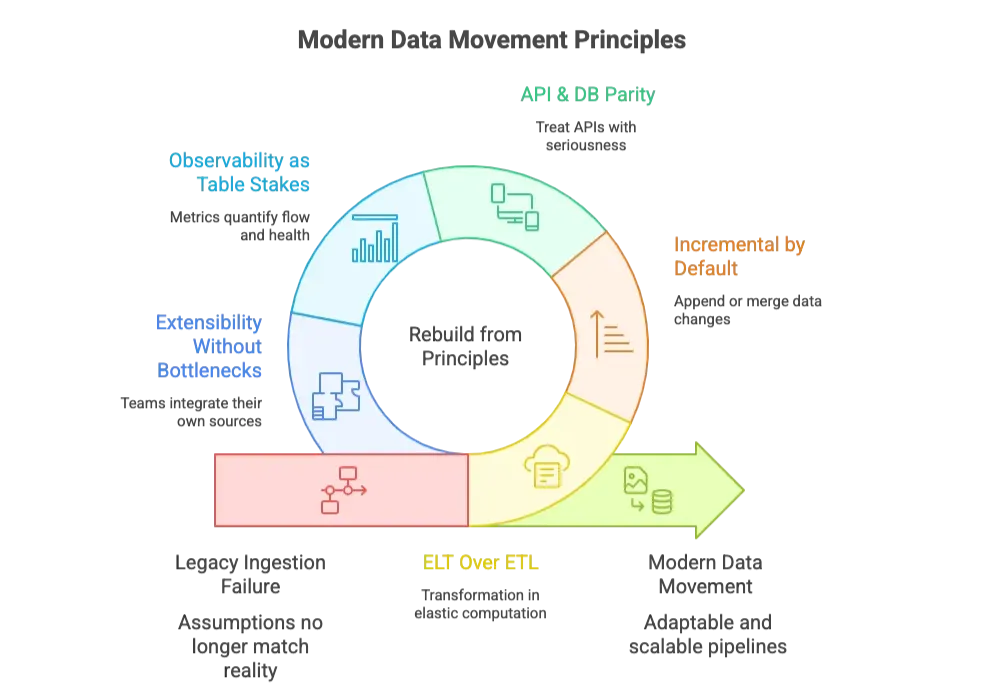

The Shift: The 5 Principles of Modern Data Movement

If legacy ingestion failed because its assumptions no longer matched reality, the way forward is to rebuild from principles that do. Each principle follows directly from the constraints of time, cost, and trust.

1. ELT Over ETL

Transformation belongs where computation is elastic and parallel, not in fragile ingestion code. That’s why the evolved approach is ELT: keep extract and load as lean as possible, then let downstream systems handle the heavy work. It respects the division of labour. Ingestion is about fidelity, while transformation is about interpretation. Mixing them creates unnecessary coupling and fragility, and why did we mix in the first place?

2. Incremental by Default

The unit of change is not a whole table, but the delta between “before” and “after.” Full refreshes use brute-force: they consume bandwidth, spike compute, and lapse SLAs as sources multiply. Modern ingestion assumes incremental first: append or merge strategies that reflect how data actually changes. CDC is not an advanced feature; it’s the baseline. Without it, freshness and cost are permanently at odds.

3 API & DB Parity

APIs are not side channels anymore; they’re often the system of record. Treating them as afterthoughts is an architectural error. A first-class ingestion system handles their realities with the same seriousness it applies to JDBC connections.

4 Observability as Table Stakes

Modern data movement treats observability as a non-negotiable. It projects metrics that quantify flow, health endpoints that expose status, and run history. Only with visibility can teams build the operational discipline that ingestion requires.

5 Extensibility Without Bottlenecks

Finally, scale demands openness. If every new source requires central engineering to write a connector, you’ve recreated the mainframe model: all power, no agility. Extensibility means the teams closest to the data can integrate their own sources and destinations without waiting in a queue. It’s a principle of autonomy: pipelines must adapt at the edges, not only in the core.

How the Standardised Data Movement Engine Embodies The 5 Principles of Data Movement

What is the Data Movement Engine

If the modern principles of data movement can be summed up as incremental-first, API-aware, CDC-capable, observable by default, and extensible without bottlenecks, the Data Movement Engine recommended in Data Developer Platform (DDP) Standard (a community-driven data platform standard) is simply their embodiment in code.

It is the DDP Data Movement Engine, built not as another monolithic ingestion framework, but as a lean system for fast, observable EL/ELT that works equally well across databases, APIs, files, streams, and change data capture.

Its philosophy is clear: make Extract and Load boringly reliable, so that everything downstream (transformation, modelling, analysis) inherits a foundation of trust.

Key Capabilities of DDP’s Data Movement Engine

The Data Movement Engine, in short, is not an ingestion tool bolted onto a stack. It is the articulation of a set of principles in running code, turning abstractions like “incremental-first” or “extensible by design” into concrete patterns you can operate, measure, and trust.

Declarative YAML configs

The Data Movement Engine uses fully declarative YAML, making pipelines explicit, repeatable, and easy to reason about from batch to near-real-time.

Batch (incremental windows, parameterised queries)

Batch ingestion reflects the natural granularity of change, with incremental windows and declarative configs that minimise cost while maximising efficiency and fidelity.

CDC (Debezium-powered, before/after state, offsets)

Change Data Capture is first-class: a Debezium-backed framework that captures every insert, update, and delete with before/after context, schema evolution, and reliable offset management for correctness

Normalisation (schema drift, variant handling, flattening)

Source instability is tamed at the edge: schema evolution, variant types, and nested structures are normalised so downstream systems receive predictable, consistent tables.

Load (idempotent, chunked, Iceberg-native, multi-destination)

Data is written safely and efficiently: idempotent, chunked loads with parallelism, supporting Iceberg-native features, multiple destinations, with at least once guarantee, and without bottlenecks.

Ops (Prometheus metrics, REST APIs, audit logs)

Pipelines are production software: built-in observability with Prometheus metrics, REST APIs for runs, offsets, and health, and structured logs that feed governance and audits.

Extensibility (custom sources/destinations via simple interface)

Teams can integrate new sources or destinations themselves through minimal Python programming, unlocking agility while retaining the Data Movement Engine’s guarantees of incrementality, typing, and reliability.

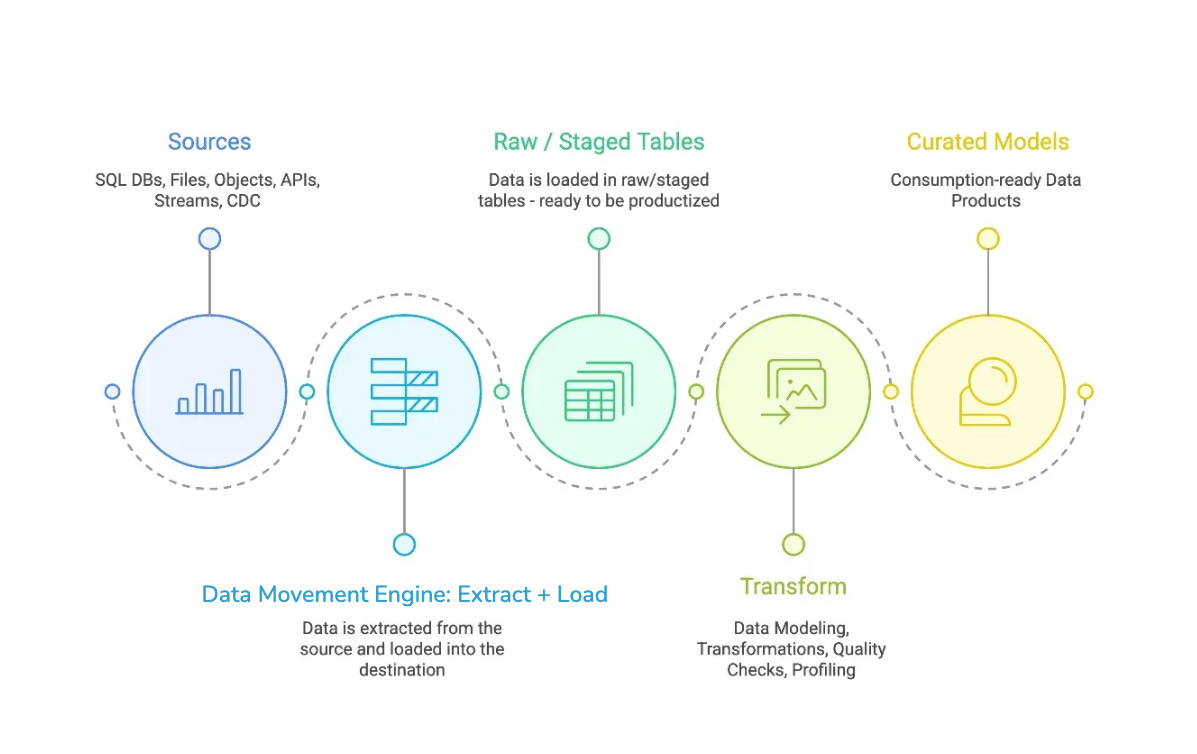

The Architecture for Modern Data Movement: ENL (Extract, Normalise, Load)

The architecture of DDP’s Data Movement Engine is a reflection of its philosophy: keep ingestion predictable, reliable, and focused on moving state faithfully, then let downstream systems handle transformation. This is the essence of ENL: Extract, Normalise, Load.

Extract

Extraction is about connecting to truth at its source. Databases, APIs, files, and streams all have different semantics for change, latency, and reliability. The Data Movement Engine treats each source according to its nature: batch when bulk windows make sense, CDC when capturing every row/column-level change matters. Every extraction is schema-aware, backpressure-safe, and designed to move only what’s necessary: the delta instead of the entire table. Reflecting that time and cost are finite resources.

Normalise

Raw data is rarely for consumption: data types vary, columns mutate, nested structures shift. Normalisation reconciles these by unifying types, adapting to schema evolution, handling columns deterministically, and so on. The goal is simple: take messy, heterogeneous data and make it boringly reliable, without discarding fidelity.

Load

Loading is where correctness meets scale. Writes are idempotent to tolerate retries without duplication. Large datasets are chunked and parallelised so failures are isolated and throughput is predictable. Destinations are pluggable: Iceberg-native by default, but extendable to other sinks, ensuring that data lands where it can be used, governed, and trusted.

The Impact of the ENL Architecture on Data Movement: The DDP Difference

DDP’s Data Movement Engine embodies the principles that address the fundamental mismatch between modern data realities and legacy pipelines. Each differentiator follows logically from first principles: move state efficiently, reliably, and observably, while keeping teams unblocked.

E+L purity by design for scalable data ingestion: Keep Extract + Load lean; push transformations downstream to MPP/lakehouse engines for agility and operational simplicity.

Production-grade CDC for near real-time data pipelines: Capture inserts, updates, and deletes with before/after context, offsets, and exactly-once semantics, eliminating brittle polling and full-refresh bottlenecks.

First-class observability and operational metrics: Built-in Prometheus metrics, run history, health endpoints, and structured logs ensure pipelines run like production software.

Extensibility without platform bottlenecks: Add custom sources or destinations easily via a simple interface, giving teams autonomy without sacrificing correctness or incrementality.

Governance built in with metadata, observability, and audit logs: Every schema change, query log, and data load feeds catalogs and governance systems automatically, ensuring compliance and traceability.

Open yet converged architecture: Run standalone with full capabilities, or integrate seamlessly with Data Developer Platform for discovery, policy enforcement, and semantic layer benefits.

High-performance with pragmatic engineering: High throughput (~45k rows/sec) is the result of multi-worker batch and curated CDC paths while maintaining reliability, observability, and operational control.

Deep Dives into Modern Data Movement Patterns with the Data Movement Engine

Modern data movement isn’t about doing the same thing faster but about rethinking the fundamentals of how data flows. The Data Movement Engine embodies that rethink.

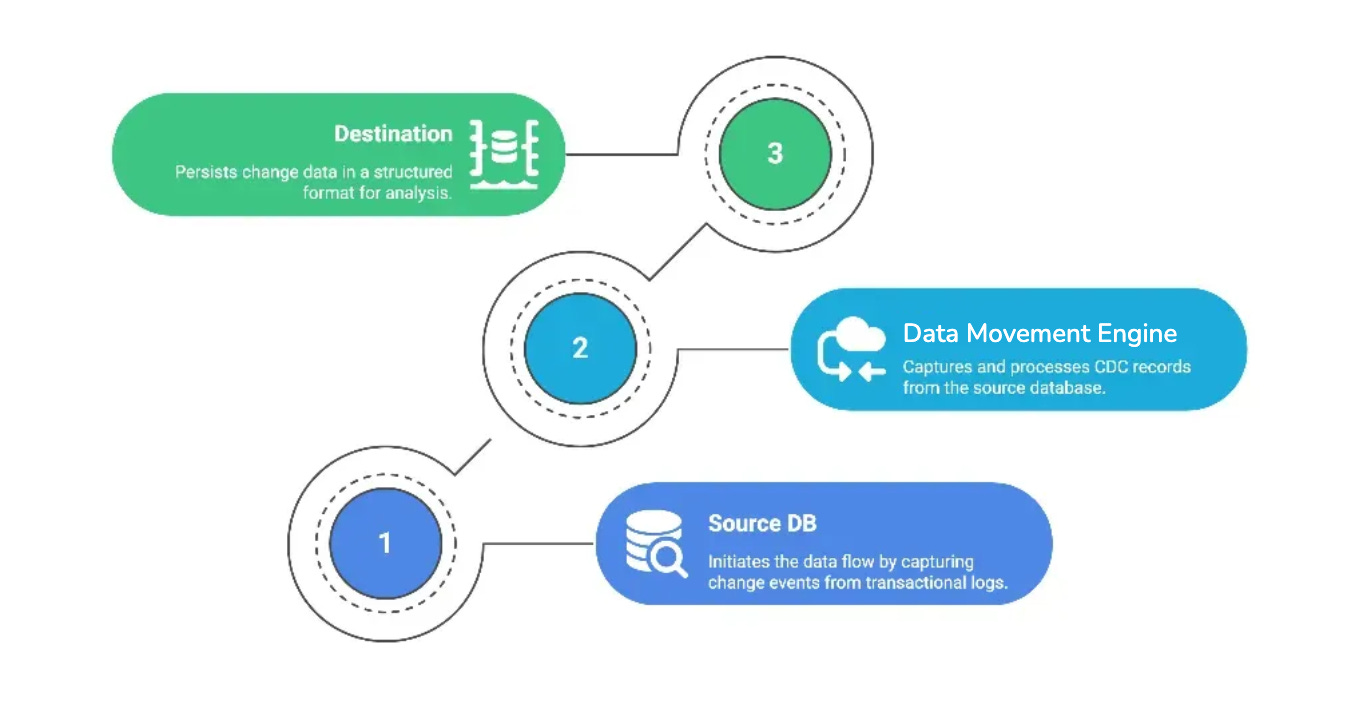

CDC, Done Right

Change is continuous, granular, and sometimes subtle. The Data Movement Engine listens to transactional logs, capturing every insert, update, and delete with full before/after context. Offsets and resumable reads make the process reliable, and compaction keeps history manageable. This replaces brittle polling and expensive full refreshes with streams teams can trust.

Batch That Respects Time & Cost

Batch isn’t dead. It’s a deliberate choice when applied incrementally. The Data Movement Engine uses windows, append/merge strategies, and parallel, chunked loads to balance throughput, cost, and reliability. Parameterised queries and automatic schema handling mean complexity doesn’t become fragility.

Observability & Governance Built In

DDP’s Data Movement Engine captures metrics, exposes APIs for runs, visibility in saved offsets, and health, and tracks structured logs and audit trails. Every load, every change, feeds governance and catalog systems automatically, making trust inherent, not bolted on.

Extensibility as a First Principle

Data isn’t static, and neither should your platform be. The Data Movement Engine lets teams add new sources or destinations via customised Python scripts, while curated CDC connectors preserve reliability. The architecture is sink-pluggable, so innovation never requires rebuilding the core.

Move More Than Just Datasets

DDP’s Data Movement Engine allows you to move metadata as well as usage logs from source systems (eg, Snowflake, PostgreSQL, etc.). It relies on the system tables and views offered by source systems.

The Future of Data Movement

At its core, data movement is about one simple principle: get the truth from its source to where it can be used, reliably and efficiently. Legacy stacks break that principle. They treat every load as a monolith, ignoring the natural grain of change, paying the cost of brittleness, opacity, and duplication at every step.

Modern data movement starts from fundamentals: only move what has changed, capture deltas with precision, treat APIs as first-class citizens, and make every pipeline measurable and accountable. Observability isn’t optional but baked into the fabric of the system.

Data Movement Engine operationalises these principles. It makes Extract + Load predictable and boring, removing the friction so that transformation, analytics, and insights can scale without compromise. When the mechanics of movement are solved, the intelligence of data can finally shine.

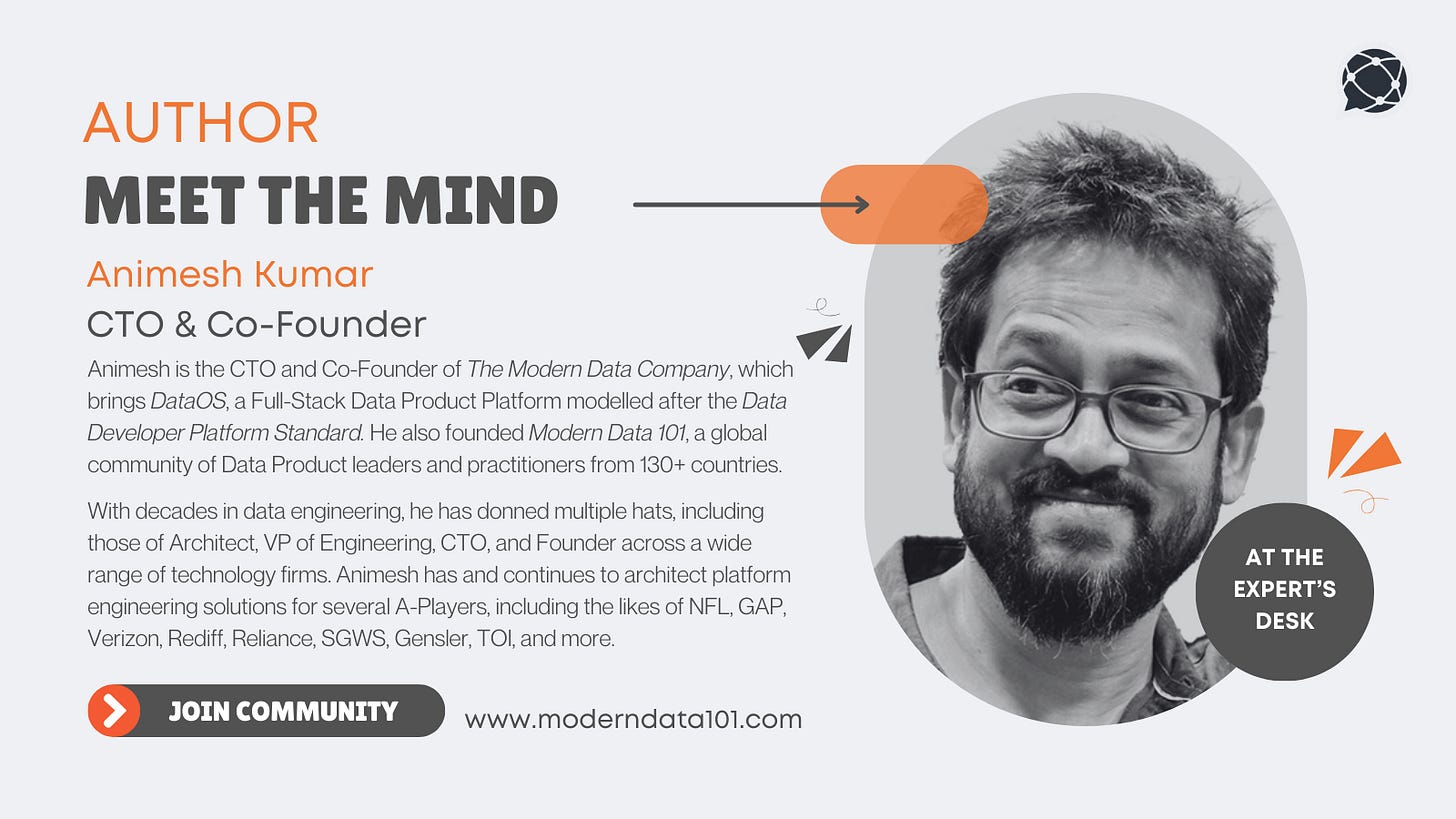

Author Connect 🤝🏻

Discuss shared technologies and ideas by connecting with our Authors or directly dropping us queries on community@moderndata101.com

Find me on LinkedIn 🙌🏻

Find me on LinkedIn 👋🏻

MD101 Support ☎️

If you have any queries about the piece, feel free to connect with the author(s). Or feel free to connect with the MD101 team directly at community@moderndata101.com 🧡

Re: " ELT Over ETL

Transformation belongs where computation is elastic and parallel, not in fragile ingestion code. That’s why the evolved approach is ELT: keep extract and load as lean as possible, then let downstream systems handle the heavy work."

If I'm reading this correctly, your position is that it's a good idea to bring new data into your data or record and then clean it up rather than cleaning it first, although you later espouse ENL and normalize the data before loading it.