Evolution of AI in Enterprises: A Tale of How We Started Using AI for Business

Mapping the Journey of AI in enterprises, Challenges Along the Way, Strategies that Enabled Experimental AI Solutions. Bonus: Dedicated Trajectory of AI Agents.

TOC

The Enterprise AI Timeline: A Civilisation Story

Building Block of AI: A Foundational Phase

Permutations & Combinations of AI: An Experimental Phase

The Arrow Pullback: A Modern & Emerging Phase

The Foundational Problems: What Did Agents Solve in The Evolutionary Scheme?

The Trajectory of Agentic Growth

The Predominance of Static Rule-Based Systems

The Help from LLMs and RAG Models

A Step Single Agent Architectures with Learning Capabilities

The Current State: A Leap to Autonomous Multi-Agent Architecture

How Does the Multi-Agent Architecture Refine the AI Landscape?

Prologue

The dream of creating a self-operating consciousness (or AI-like capabilities) dates back to ancient philosophers and inventors. Tread back to the automatons of 19th-century visionaries like Samuel Butler’s Erewhon (an 1872 Novel), where machines were imagined to surpass human intelligence. Fast forward to today, AI is no longer just a philosopher’s dream but a tangible blessing reshaping enterprises.

The question many ask is: why did AI gain significant traction in enterprises only recently, given its long history? The answer is in the rapid evolution of execution power behind AI capabilities combined with a shift in enterprise needs. In the past, AI systems were clunky, costly, and difficult to integrate into existing infrastructure. For years, companies viewed AI as something experimental or reserved for highly specialized tasks. But with advancements in processing & compute power, AI agents and solutions are easier to adopt, operate, and integrate with existing tools and familiar interfaces businesses are habituated to.

This makes AI more embedded in the fabric of modern enterprises, driving a new AI culture. It’s no longer just about tech for tech’s sake but more about AI percolating into the cultural and operational DNA of businesses. Organizations are beginning to realize that AI is not just a set of tools but a strategic advantage that is transforming how they operate, innovate, and serve their customers. Customer Experience delivered at its finest.

The Enterprise AI Timeline: A Civilisation Story

Building Block of AI: A Foundational Phase (1950-1979)

Think of this phase as the dawn of a civilization—its architects, like Turing, laying the first stones of a vast futuristic metropolis. Alan Turing’s 1950 work Computer Machinery and Intelligence gave rise to the Turing Test, which measured the intelligence of computers. This early era laid the foundations of AI: systems that could primitively mimic human reasoning but were rigid and spoon-fed.

As civilizations evolve, so do their tools and means. The AI community began to explore deeper, refining the initial foundations and creating more specialized systems. Like an empire growing in sophistication, AI began to show signs of backend complexity, although it was still far from widespread adoption or practical for enterprise leverage/investment.

Scientists like Arthur Samuel and John McCarthy contributed to the widening research for concepts around AI. This period saw innovations, such as early robots and rudimentary autonomous systems.

Permutations & Combinations of AI: An Experimental Phase (1980-2010)

A Period of AI Boom

Like a flourishing culture—a time of rapid growth and expansion, the enterprise landscapes were soon dotted with expert systems designed to simulate human expertise and address problems through defined rules and knowledge. XCON became one of the first expert systems in the commercial market that revolutionised the ordering of computer systems by automatically selecting components based on customer needs. This also progressed to the state, where countries organised several conferences and exhibitions around AI, like the AAAI at Stanford and made major investments in favour of planting AI initiatives.

💰AI was already seen as a lucrative investment.

The Japanese Government allocated $850 million (over $2 billion dollars in today’s money) to the Fifth Generation Computer project (source).

Then Came the AI Winter

A period of slowing down or rather cooling down from the heat of all the AI excitement was inevitable (wonder if another one’s coming?). Despite initial excitement, progress slowed. However, like empires that rebound after hardship, AI once again found its momentum. From Jabberwacky (the chatbot) and wider implementations of the backpropagation algorithm (1986) to advancements in neural networks, there was massive reinvigoration of the field.

The period from 1993 to 2010 did change the landscape of AI where it started appearing in certain everyday activities. Think of Roomba, the first robotic vacuum cleaner. The first device launched in 2002 came in like a wave of tech surprise to many. One after the other, innovations followed till 2011 when Apple launched Siri. it became a widely used and popular virtual assistant.

It wasn't a collapse but rather a recalibration—getting AI back on track for the future.

The Arrow Pullback: A Modern & Emerging Phase (2011 - Present)

The Mainstream Adoption of AI

The golden age of AI driven by predictive models deployed at scale, deep learning, reinforcement learning, and transformer models like GPT. AI, at this point, represented a significant leap. It started with democratising algorithms at scale, making it easy for developers and innovators to model and deploy possibilities for businesses.

While GenAI was still distant from the hands of the masses (data devs) during the early stages of Modern AI, it was still undergoing rapid development under research groups and academic forefront. Today, complex models like GenAI are accessible across users - irrespective of their adeptness with core AI skills. Not just accessible but customisable to the granule.

With mass adoption, AI applications have not just been inducing a child-like wonder in the population but significantly affecting real businesses and even creative pursuits as an assistant that boosts human speed with ease. Everything from art and code to impactful scientific discoveries and topline growth, AI has suddenly struck the main cord.

Enterprises across the globe appreciated different GenAI powerhouses in the form of LLMs, RAG, and multimodal capabilities for their enhanced ability to understand and generate texta and other media forms. But despite the linguistic expertise, they were only tethered to human instructions in the form of very specific spoon-fed prompts. And even then, the reasoning could not be entirely trusted, given the huge lack of context the models suffered from. This specifically fell short in the case of real-world tasks or interactions with respect to reasoning and autonomy.

These real-world scenarios demand more than just understanding natural language—they require reasoning, decision-making making, and adapting & responding swiftly to evolving conditions.

With the goal to address this shortcoming, the obvious step ahead was the curation of systems that don’t just process commands; they perceive their environment, make decisions, and take action, either autonomously or semi-autonomously.

The Rising Popularity of AI Agents

AI agents operate autonomously, using reasoning, decision-making, and multi-modal capabilities to complete complex workflows. They combine Generative AI, deep learning, and reinforcement learning to mimic professional expertise and dynamic problem-solving through a chain of nodes of models feeding context to each other.

Agents interpret nuanced queries, follow multi-step processes, and reference prior interactions to maintain continuity, which enables them to respond dynamically and adaptively in real time, mirroring the contextual understanding of skilled professionals.

Whether it is about scheduling meetings, controlling smart devices, or handling customer interactions, the agents perceive environments, make decisions, and act, bridging the gap between linguistic prowess and goal-oriented functionality.

However, agents weren’t this advanced from the very inception, and it rather happened over a period of time, with each phase witnessing the addition of newer AI modules or capabilities to enhance the existing functionality.

We’ll glance through the gradual evolution of these agents in a while!

*Several real-time AI powers autonomous systems, healthcare innovations, and generative AI tools. Research into Artificial General Intelligence (AGI) and concerns over Artificial Superintelligence (ASI) gained momentum, driven by technologies like ChatGPT and AlphaGo.

The Foundational Problems: What Did Agents Solve in The Evolutionary Scheme of AI?

Every technological breakthrough has addressed a specific need or challenge—and the rise of AI agents is no different.

What did AI Agents solve to date?

Limited autonomy

Absence of memory

Lack of reasoning

Weak contextual understanding

Scoped within the script (instructions)

We’ll evaluate these through the growth of agents over time and how each phase solved each of these problems.

The Trajectory of Agentic Growth

The Predominance of Static Rule-Based Systems

When AI agents were first created, they were governed by a number of static rule-based models. These systems operate on the simple yet effective principle of "if-then" logic.

Now imagine an assistant (agent) that follows a script. This agent could handle basic FAQ queries like, “What are the business hours?” or execute simple tasks like “Send this email to the team at 5 PM.”

The challenge it overcame: The problem of automating repetitive tasks and handling straightforward, high-volume requests.

How did it help?

Consistently provided accurate responses to repetitive queries, ensuring reliability

Took on routine tasks, lightening the workload for humans to improve efficiency

Ensured reliable outcomes in well-defined scenarios to define predictability

They mimic human logic through explicitly programmed rules, delivering reliability and predictability. While less dynamic than other forms, their strength lies in their human-crafted precision.

But scalability is a crucial aspect we look for in almost every stack today. Your AI stack, if not scalable enough, will only become a liability in future. And that is a common challenge for rule-based systems. As these systems grow in complexity, managing a vast volume of rule sets becomes challenging.

The Help from LLMs and RAG Models

These are like salespeople who have memorized every product catalogue and can provide information on demand.

The foundation models, including different large language models, pre-trained on vast datasets, provide knowledge and insights and can be fine-tuned for specific tasks. If we compare it with the agent that followed a script, these are a step ahead. They are assistants capable of understanding and answering complex, contextual questions, such as “What’s the best way to summarize this report?”

The challenge it overcame: The problem of scaling knowledge delivery across diverse and evolving queries without requiring constant updates or human intervention.

What did it help in?

Broader Knowledge: Could handle diverse requests without requiring hard coding.

Scalability: Could answer advanced queries across domains using pre-trained data.

Flexibility: Adapted to varied user queries, offering general-purpose solutions.

Agents dependent on the foundational models, therefore, did show signs of limitations that previous phases of AI models had shown, a primary being limited autonomy (though more enhanced than LLMs and RAG systems, yet not optimal) and adaptability.

A Step Towards Single Agent Architecture with Learning Capabilities

Now consider that specialist assistant. She is an expert in her domain, has almost all the knowledge and decison-making power and skills.

The lack of adaptability and learning in earlier agents was short-lived, as innovators introduced agents with machine learning (ML) capabilities. These agents could learn from data and adapt to new situations. They were often standalone systems handling specific tasks.

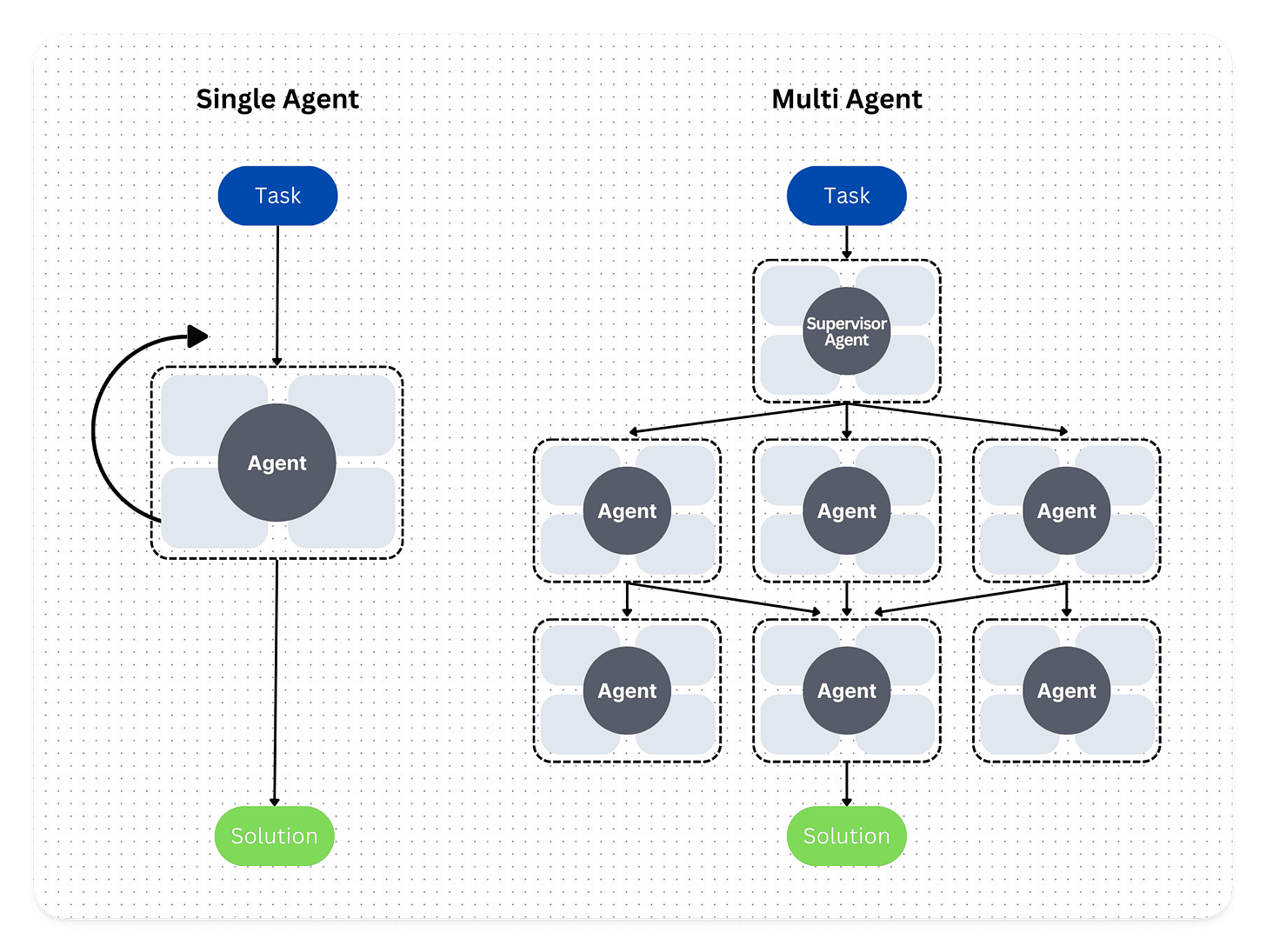

Methods like reinforcement learning (RL) enabled agents to optimize decisions based on environmental feedback. However, their scope remained limited to isolated applications. These single-agent architectures often struggled to coordinate and collaborate with other agents for cross-domain functions.

But was this the end? Not even close!

With AI agents, the advancement is in leaps and bounds. The challenges that single-agent architecture introduced had to be dismissed in no time.

Often, the context windows are limited for single agent architecture, which limits the capability of the agent to handle voluminous (beyond a particular range of conversations/datasets), leading to suboptimal access and output quality. So, these agents are seen to lack context and generate irrelevant hallucinating responses.

Hence, we arrived at the autonomous multi-agent architecture of the current state.

The Current State: A Leap to Autonomous Multi-Agent Architecture

They are like the coordinating team of assistants. This brings organisations closer to their goals of an AI makeover. The agents are designed to be goal-driven, acting independently, autonomously making decisions & taking actions based on their perception of the environment and goals.

What makes autonomous agents different from traditional agents is the capability to execute a series of tasks (like a workflow or a step-by-step process) by leveraging memory tools.

This advancement of AI agents leverages GenAI, one or more large language models and deep learning (or a combination of more AI architectures) to build actionable intelligence and reasoning capabilities to carry out a sequence or chain of tasks. Interestingly, these agents can validate outputs, iterate to improve quality and adapt or self-learn from past interactions.

How Does the Multi-Agent Architecture Refine the AI Landscape?

Food for thought: Definitions of every improvement keep evolving, and so does that of the agents. If you trail back to the definition of an agent, you’ll find it synonymous with today’s multi-agent autonomous systems.

Amplifying efficiency

Multi-agent systems tackle complex tasks by breaking them into smaller, manageable pieces and assigning each piece to a specialized agent. This approach streamlines processes, minimizes delays, and enhances overall productivity. Think of it as a team of experts, each focusing on their unique role, working together seamlessly to deliver faster and more efficient outcomes.

Expertise at scale

These systems shine in scenarios requiring deep, diverse knowledge. Multi-agent architectures allow for the integration of agents with niche expertise—marketing, logistics, analytics, or customer service. This collective intelligence enables organizations to tackle multifaceted challenges more effectively than a generalized model could.

Dynamic flexibility

Multi-agent systems thrive on adaptability. As business needs evolve or workloads grow, new agents can be seamlessly added without overhauling the entire system. They can be upgraded, replaced, or customized with minimal disruption, ensuring the system remains agile and future-proof.

Self-driving workflows

In a multi-agent setup, agents coordinate seamlessly, automating complex workflows end-to-end. Each agent handles specific steps, ensuring repetitive tasks run smoothly and autonomously, freeing up human resources for more strategic initiatives.

MD101 Support ☎️

If you have any queries about the piece, feel free to connect with the author(s). Or feel free to connect with the MD101 team directly at community@moderndata101.com 🧡

Author Connect 🖋️

Find me on LinkedIn 💬

Find me on LinkedIn 💬