Never seen a data quality issue that wasn’t actually an ownership problem | John Wernfeldt

Why most “data quality issues” are actually ownership failures

About Our Contributing Expert

John Wernfeldt | Managing Director: Data, Analytics & AI

John Wernfeldt works with CDOs and senior data leaders who are under pressure to “be AI-ready” while still struggling with trust, ownership, and decision clarity in their data. His focus is practical data governance and foundations, the kind that reduce rework, end metric debates, and give executives confidence to act.

Based in Stockholm, John is Managing Director at Northridge Analytics, where he helps organisations turn data and analytics into tangible business value, and President of DAMA Sweden. His background spans data strategy, governance, architecture, and analytics leadership roles at Gartner, Capgemini Invent, and KPMG.

Known for cutting through hype, John emphasises clear decision rights, fit-for-purpose quality, and governance embedded into delivery. His work helps organisations move from fragmented, fragile data to trusted foundations that scale with analytics and AI. We’re thrilled to feature his unique insights on Modern Data 101!

We actively collaborate with data experts to bring the best resources to a 15,000+ strong community of data leaders and practitioners. If you have something to share, reach out!

🫴🏻 Share your ideas and work: community@moderndata101.com

*Note: Opinions expressed in contributions are not our own and are only curated by us for broader access and discussion. All submissions are vetted for quality & relevance. We keep it information-first and do not support any promotions, paid or otherwise.

Let’s Dive In

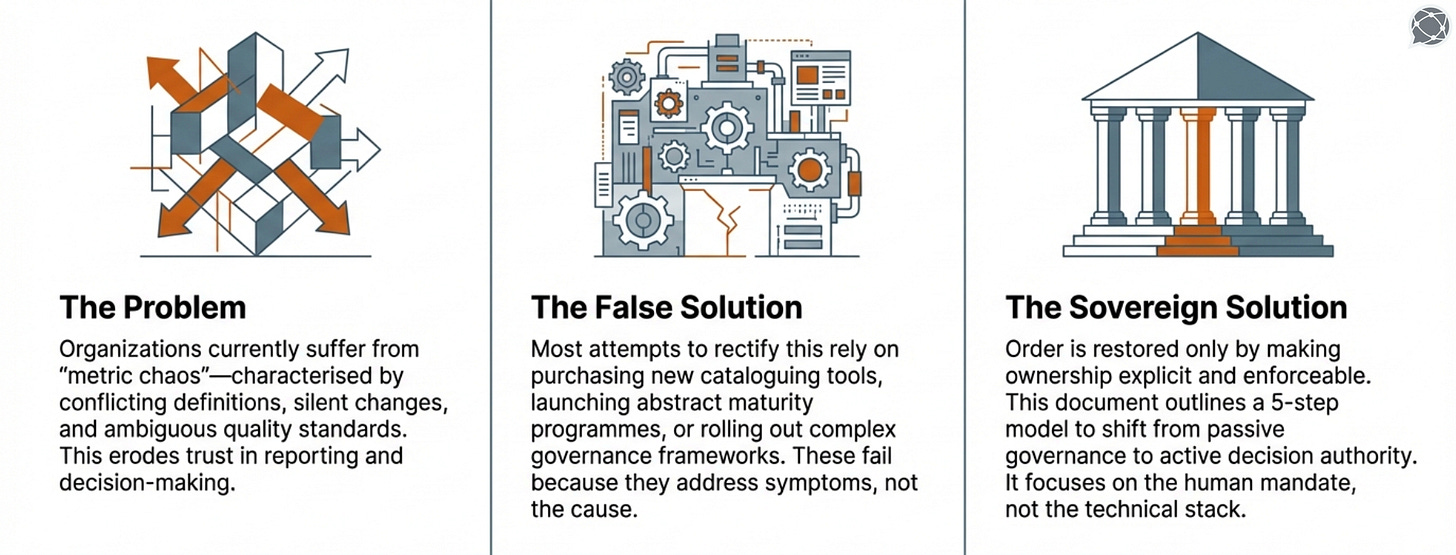

I’ve never seen a “data quality issue” that wasn’t, at its core, an ownership problem. I’ve seen this play out more times than I can count.

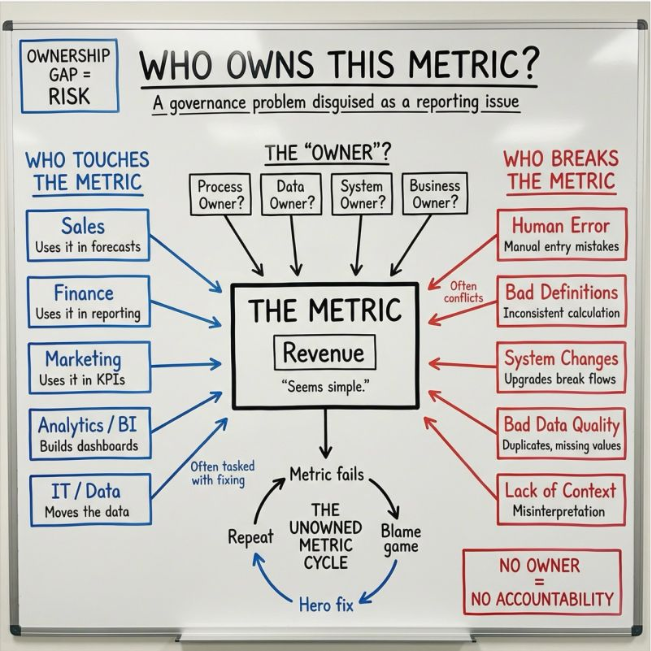

A number looks off, someone flags it, everyone agrees it’s “important.” And then the questions begin. Sales uses it in forecasts, finance uses it in reporting, marketing uses it in KPIs, analytics builds dashboards on top, and IT moves the data and gets asked to fix it.

Everyone touches the metric, yet no one owns it.

When a metric breaks, the same explanations always show up

When the number is questioned, the conversation goes in predictable directions.

A recent survey on the state of data and AI in enterprises conclusively suggests how the same metric gets corrupted as it changes hands. Because no one in particular owns the health of the metric. Many of us touch the same metric, and we end up seeing different versions.

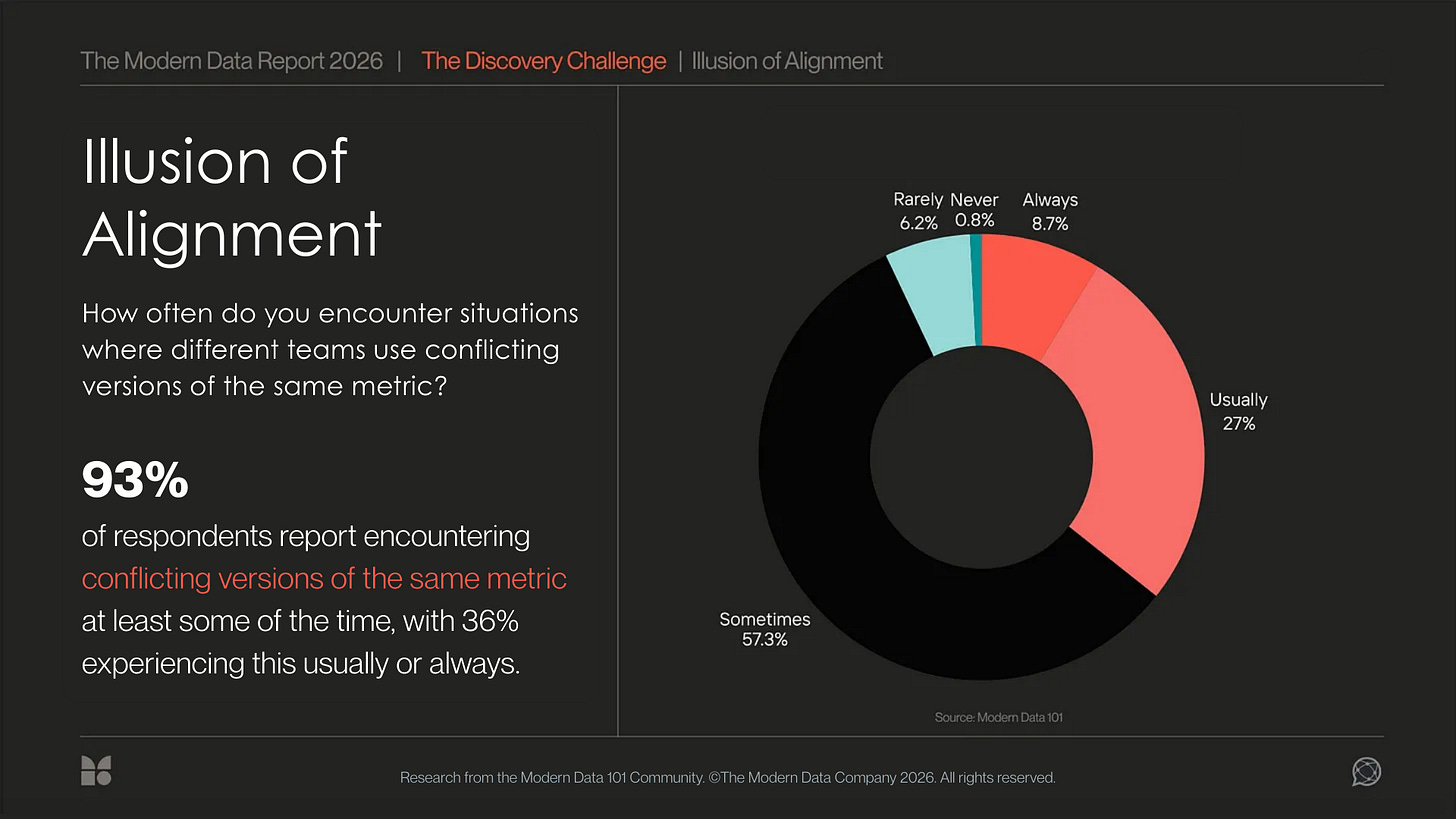

93% of respondents say they encounter conflicting metrics. Nearly half do not fully trust their own data, and 68% explicitly state that it is not trustworthy enough for AI

- Findings from the Modern Data Report 2026

There could be many reasons behind this experience. For example, the definition is unclear, the source system changed, there was manual input, and the data quality dropped. All of these things are often true, but they’re also mostly irrelevant.

Because the only question that actually matters never gets answered:

Who can say what this metric means, how it’s calculated, and when it’s allowed to change?

When that person doesn’t exist, the discussion goes nowhere.

The cycle that follows is always the same

When ownership is missing, organisations fall into a loop that looks like this:

The metric breaks, and a hero steps in to fix it. People argue about whose fault it is, and a temporary workaround is put in place. Then everyone moves on. Only until next month.

No owner leads to no accountability, and no accountability leads to permanent risk.

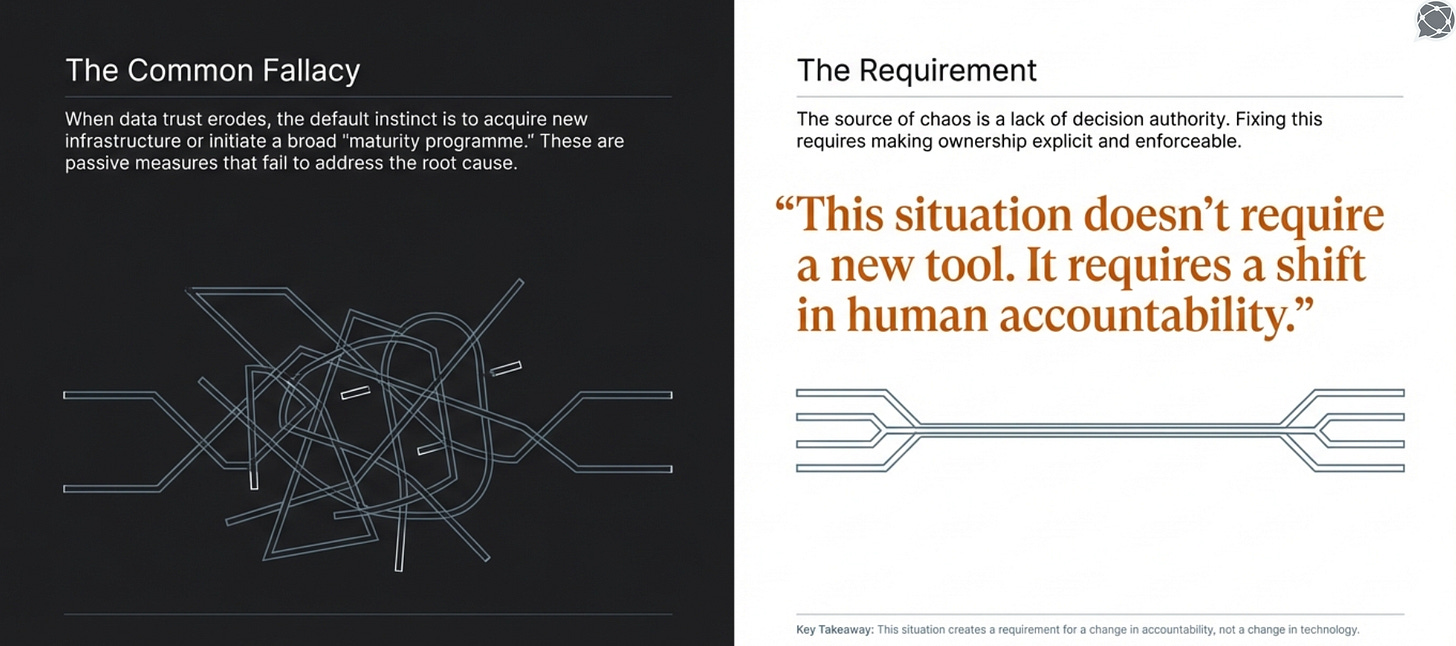

This is why so many teams believe they have reporting problems, BI problems, or tooling problems. What they truly have is a governance gap.

Why “data quality” becomes the wrong label

Calling this a data quality issue is convenient. It allows the organisation to:

Treat the problem as technical

Push responsibility toward IT or analytics

Focus on fixing symptoms instead of decisions

Data quality becomes a proxy for unresolved ownership. But quality cannot exist in a vacuum. It only improves when someone is accountable for the outcome. Until then, every fix is temporary.

The problem is decision authority, not data. At its core, a metric is not a technical artifact. It’s a decision artifact. It exists so someone can make a decision, explain it, and stand behind it.

If no one has the authority to:

Define the metric

Approve changes

Reject misuse

Be accountable when it’s wrong

Then the metric will always be fragile, no matter how clean the pipeline looks.

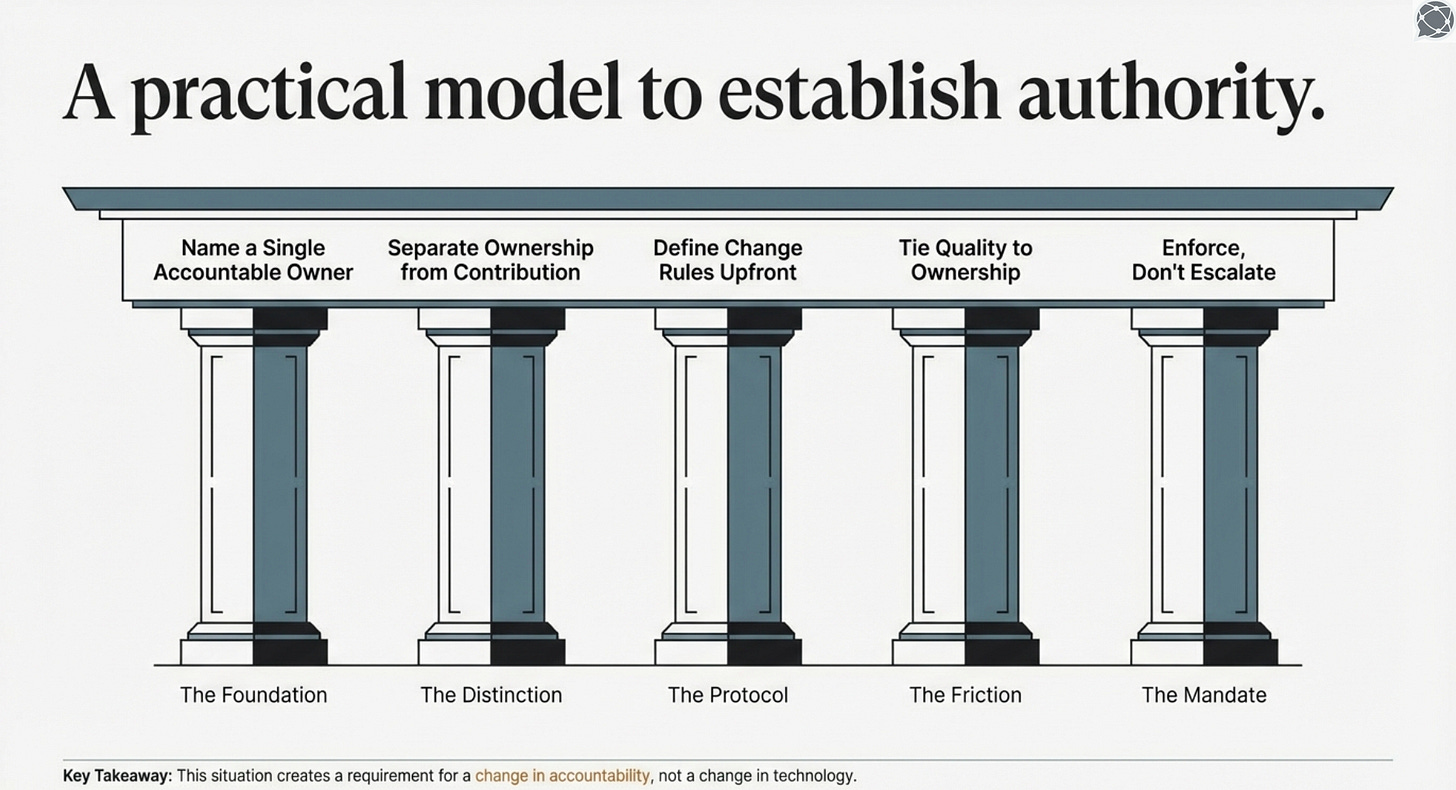

A simple model to fix decision authority for data

This situation doesn’t require a new tool, a maturity program, or a framework rollout. It requires making ownership explicit and enforceable.

Here’s a proven practical model to improve or establish decision authority.

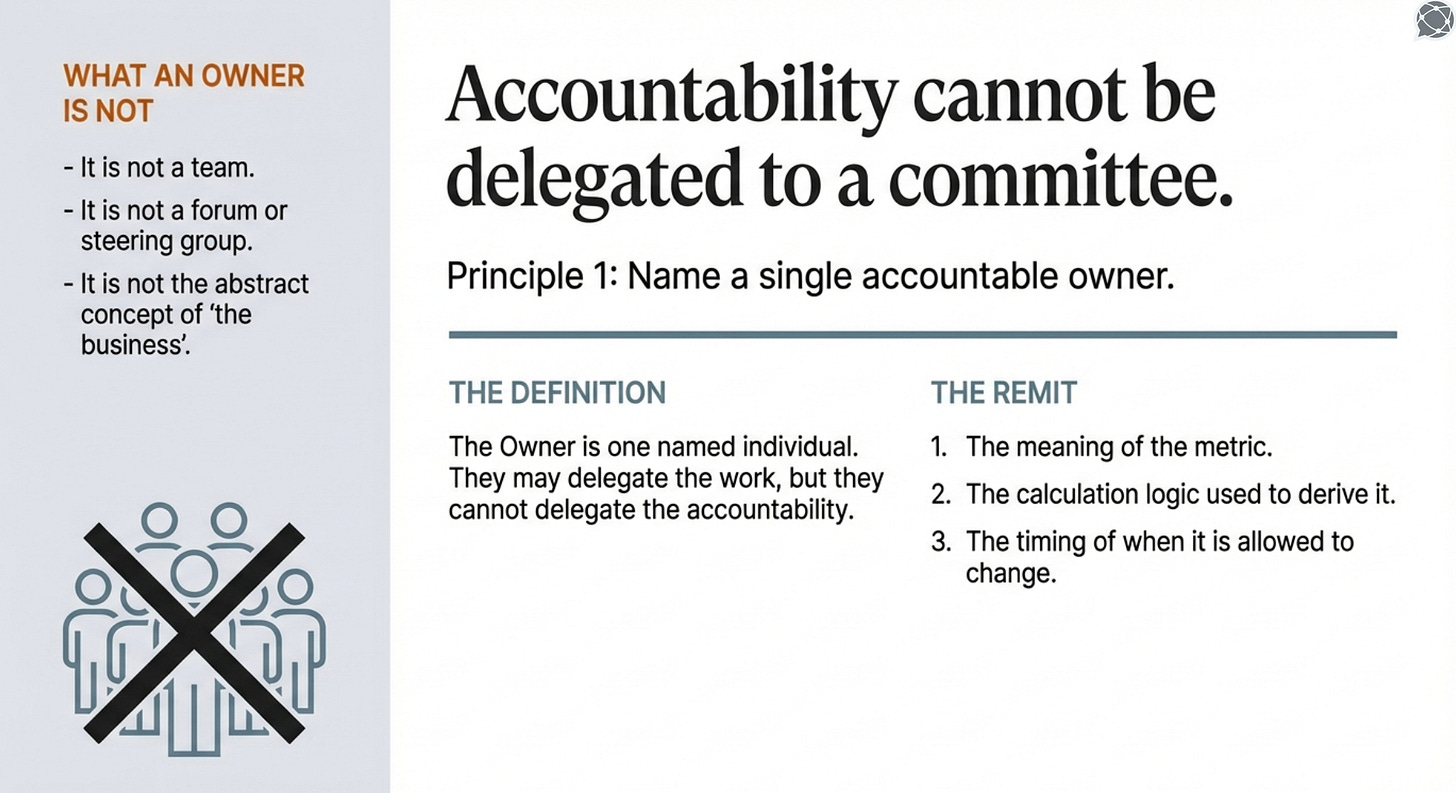

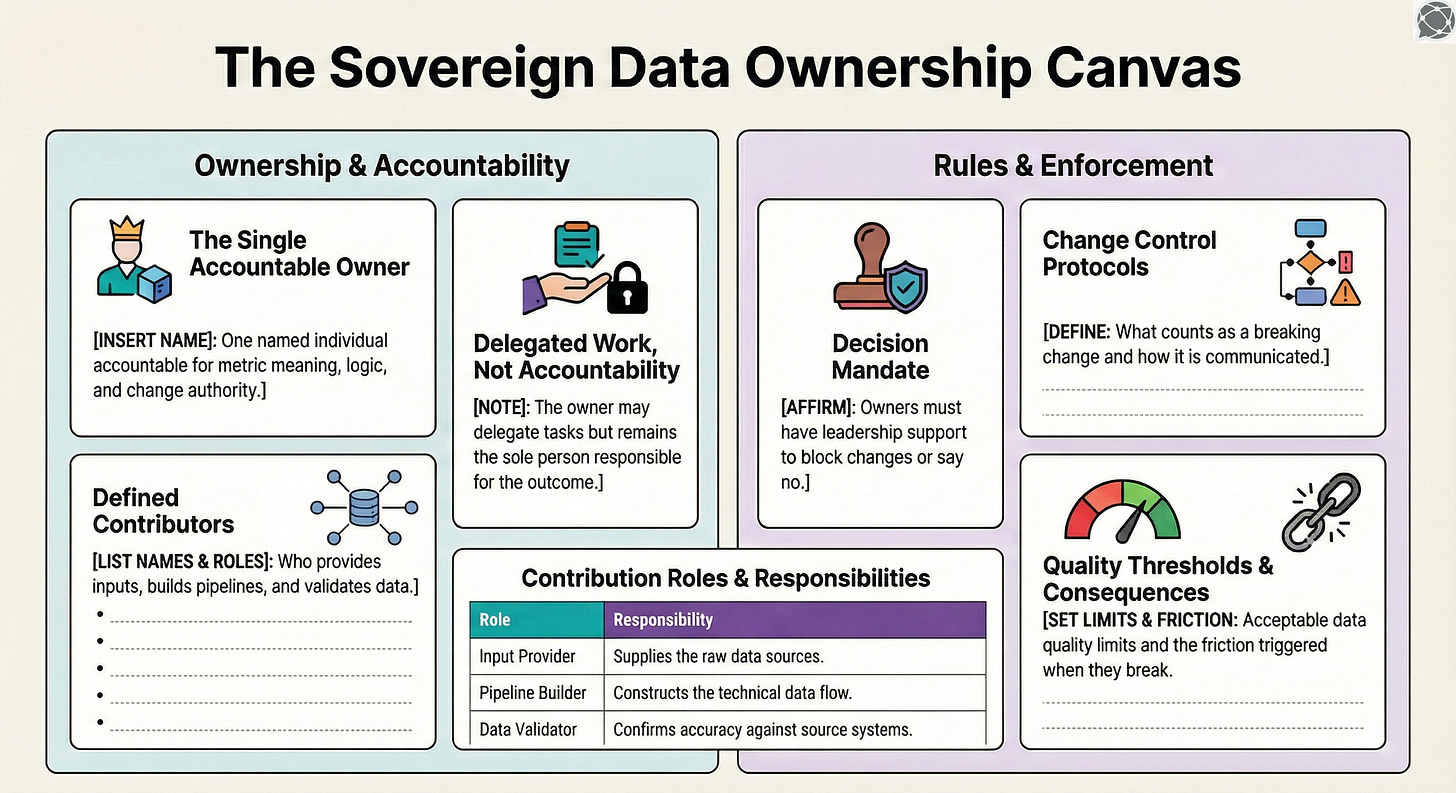

1. Name a single accountable owner

Not a team, a forum, or “the business”. One named person who is accountable for:

The meaning of the metric

The calculation logic

When it is allowed to change

This person may delegate work, but cannot delegate accountability.

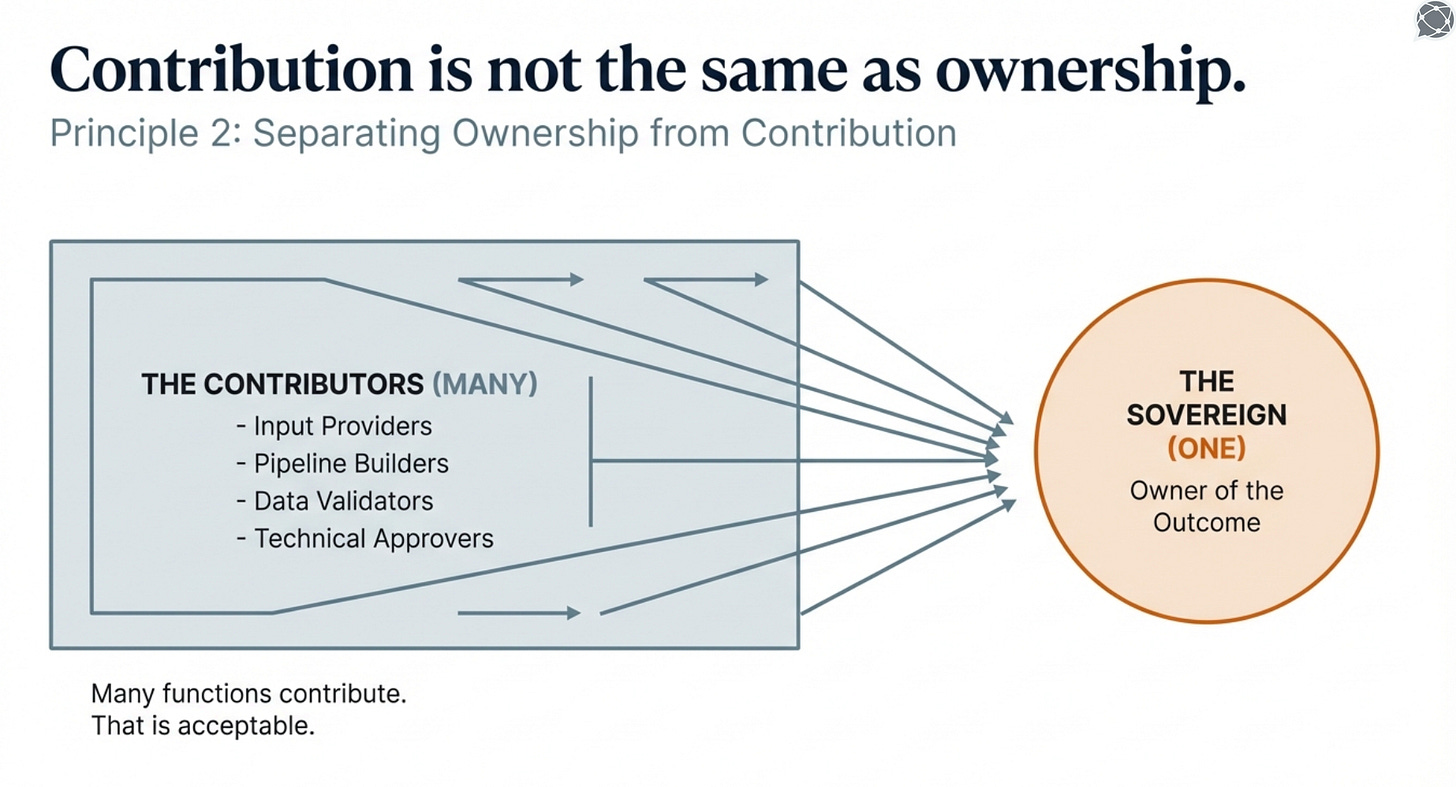

2. Separate ownership from contribution

Many functions will contribute to a metric. That’s fine. But contribution is not ownership. We need to make it explicit:

Who provides inputs

Who builds pipelines

Who validates data

Who approves changes

Only one person owns the outcome.

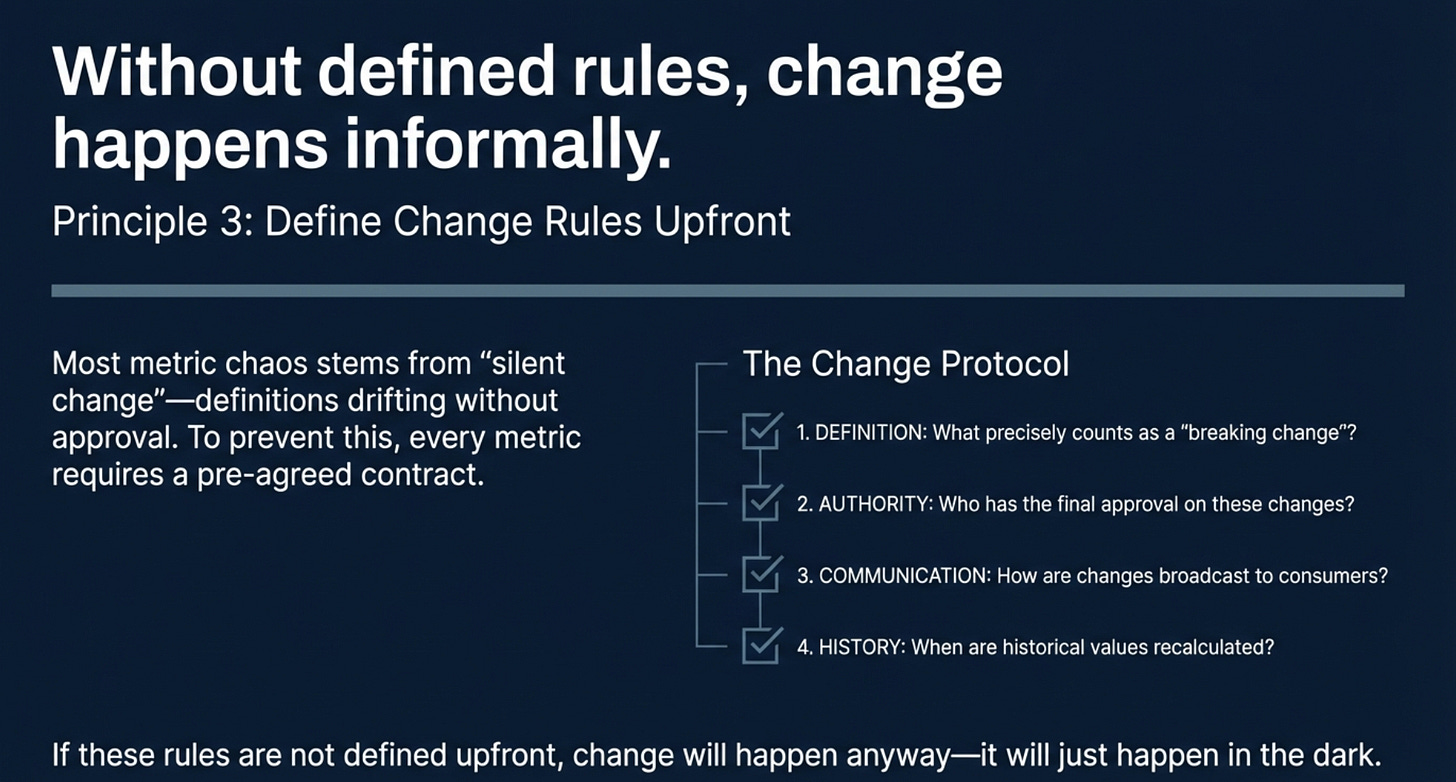

3. Define change rules upfront

Most metric chaos comes from silent change. Every metric needs clear answers to:

What counts as a breaking change

Who approves changes

How changes are communicated

When historical values are recalculated

If this isn’t defined, change will happen anyway. Just informally.

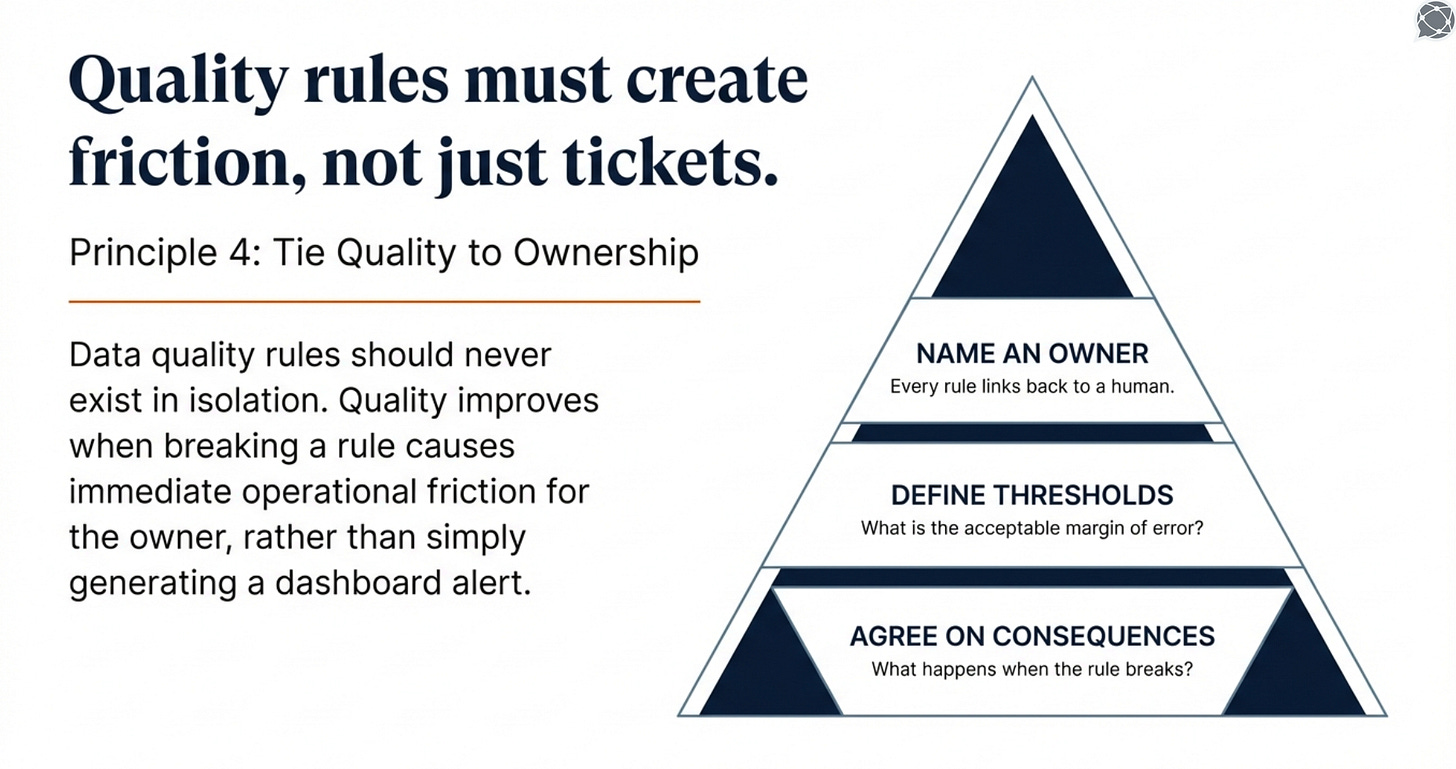

4. Tie data quality rules to ownership

Data quality rules should never exist in isolation. For each critical rule:

Name an owner

Define acceptable thresholds

Agree on consequences when it breaks

Quality improves when breaking the rule creates friction instead of tickets.

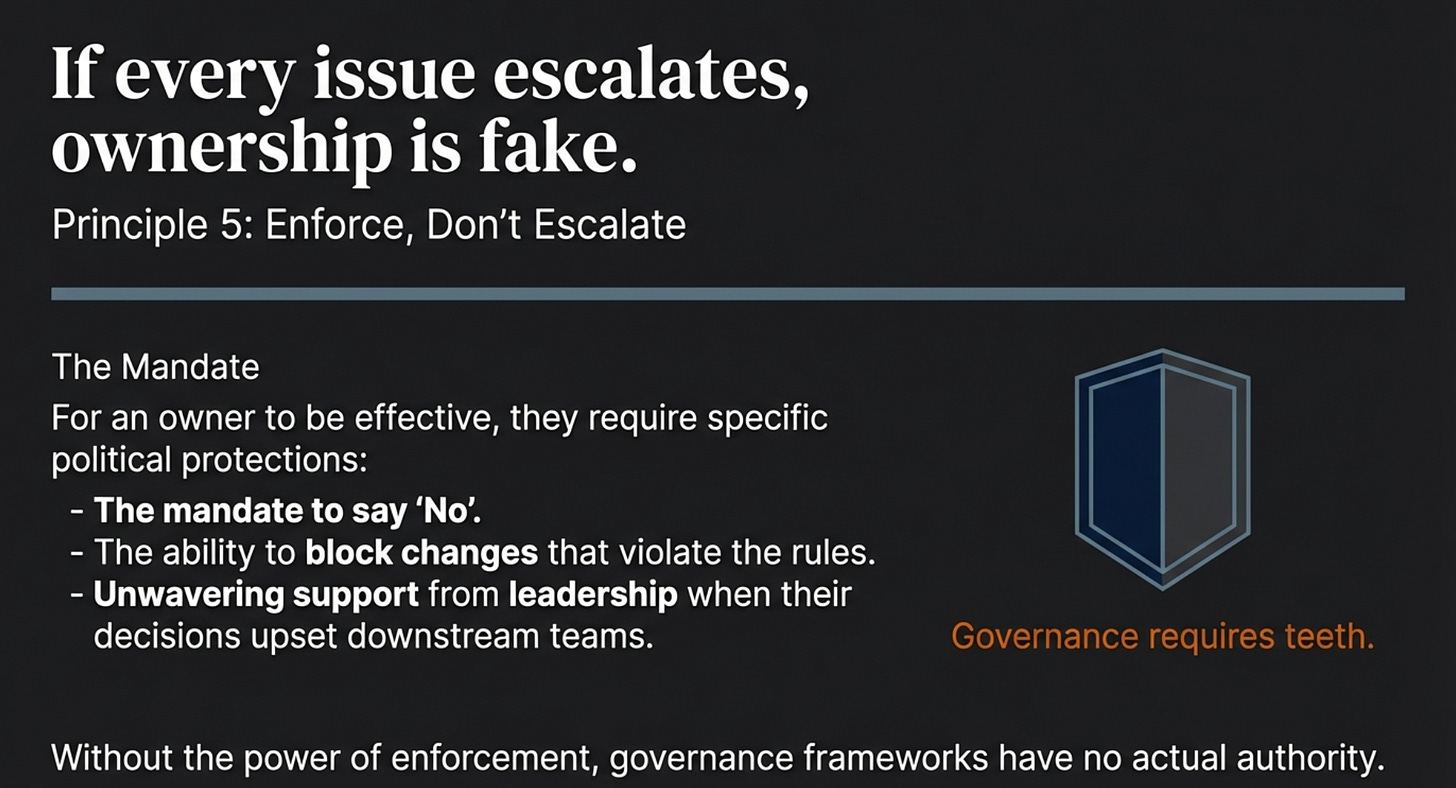

5. Enforce, don’t escalate

If every issue escalates to a steering group, ownership is fake. Owners need:

The mandate to say no

The ability to block changes

Support from leadership when decisions upset someone

Without enforcement, governance has no authority.

The Sovereign Data Ownership Canvas

What changes when this model is enforced

When ownership is explicit and enforced, metrics stop drifting, quality issues surface earlier, blame games decrease, fixes stick, and trust increases. Data is almost never perfect, but these changes are kicked off because accountability is clear.

The uncomfortable part

Most organisations avoid implementing such a model because it’s uncomfortable. It forces decisions instead of alignment, accountability instead of consensus, and clear trade-offs instead of vague agreements.

But avoiding discomfort is exactly what creates recurring data quality issues.

Final Note

Adapted from concepts shared by the author | Curated by Modern Data 101

If a metric matters, someone must be accountable for it. Until that happens, data quality initiatives won’t stick, BI tools won’t help, and AI will amplify the problem instead of solving it.

Until we have fixed the root of the problem and assigned an owner, nothing downstream really matters.

I’m collecting practical tools like this in my Substack, starting with a Metric Specification Template I actually use. If that’s useful, it lives here: link to metric specification template

MD101 Support ☎️

If you have any queries about the piece, feel free to connect with the author(s). Or feel free to connect with the MD101 team directly at community@moderndata101.com 🧡

Author Connect

Connect with John Wernfeldt on LinkedIn 💬 | Or dive into his work on Substack:

From MD101 team 🧡

🌎 Global Modern Data Report 2026

The Modern Data Report 2026 is a first-principles examination of why AI adoption stalls inside otherwise data-rich enterprises. Grounded in direct signals from practitioners and leaders, it exposes the structural gaps between data availability and decision activation.

With hundreds of datapoints from 500+ data leaders and experts from across 64 countries, this report reframes AI readiness away from models and tooling, and toward the conditions required and/or desired for reliable action.

Does this imply that if you fix the data you’re actually making matters worse?

Well John,

I have been in data warehousing for 35 years now. It's gone down hill a LOT since 2010 and I was "woke cancelled" out of the area.

One day men in the data warehousing area will welcome me back and take my advice.

Until then? You can be pretty sure that data warehousing and all related issues will continue to go down hill. Data quality is just one small area where data warehousing has gone down hill. There are many others.

And because men in this area do such a lousy job they have resorted to gouging their customers to make money rather than delivering extremely high value and being paid a fair slice of the improved profit.

The whole "data" area is a joke on the business side of the house in most companies now...sad to say.