Could Data Products Save (Real) Money?

Value Evaluation Model, Calculation Functions, Product Remediation, and more!

Short Answer: Yes. But let’s take a closer look.

This piece is a community contribution from Francesco, an expert craftsman of efficient Data Architectures using various design patterns. He embraces new patterns, such as Data Products, Data Mesh, and Fabric, to capitalise on data value more effectively. We highly appreciate his contribution and readiness to share his knowledge with MD101.

We actively collaborate with data experts to bring the best resources to a 6000+ strong community of data practitioners. If you have something to say on Modern Data practices & innovations, feel free to reach out! Note: All submissions are vetted for quality & relevance. We keep it information-first and do not support any promotions, paid or otherwise!

It is important to remember that managing data as a product guarantees interoperability, facilitates data and code reuse, and promotes an "as a product" approach, which includes considering the profitability of our digital product.

The steps we will follow are:

Pre-requisites: Data Taxonomy and Selection of Drivers

Tuning: Classification by Functional Domains and Calculation Function

Monitoring & Remediation: Analysis of Behaviors and Trends, and Applicable Actions

While the 'data as a product' paradigm might theoretically assign absolute economic values to calculate the profitability of our digital product, this is quite complex (if not unachievable) because it requires complete synergies among enterprise architecture layers. In the article, I will explore how this complexity may not necessarily hinder the initial purpose.

I. Pre-requisites

We will analyze all preparatory aspects, whether technological or methodological.

Data Taxonomy

Assuming a basic level of observability is in place (both for determining costs and for usage), the real differentiating requirement is methodological. It is crucial to have a domain-driven organization (not necessarily a mesh) and a taxonomy that can assign meaning to the various data products. This taxonomy should also be distributed across different data products (data contracts) with potential impacts on the data governance model (data product owner).

In this sense, it is strongly recommended to have a federated modeling service to manage and guarantee this within the data architecture (regardless of whether it is distributed or not).

This is necessary because we will use the single element of the taxonomy, i.e., the entity data (and not the single data product), as the calculation element.

📝 Related Reads

Selection of Drivers

Secondly, it is necessary to select an evaluation model, for example, from this work:

From which we can consider some drivers:

Effort: Implementation cost, execution cost of pipelines & structures, the hourly cost of involved figures (data steward, data product owner), number of data products/output ports available;

Consumption: increased cloud consumption due to accesses, number of applications, number of accesses to data products, and related number of users;

Business Value: Categorization of the data entity and categorization of the applications using it.

Obviously, each business context might select different drivers, but these seem sufficiently agnostic to be considered universal.

It is important to emphasize that not all drivers are economically quantifiable, so it will be necessary to reason in terms of function points or correlations.

II. Classification of Domains

At this point, two actions are necessary:

Identification of Domains

This action is of fundamental importance because it will serve to identify the outliers (see below). In theory, the domains should come either from data governance or from the enterprise architecture group as instantiations of business processes.

Whichever choice is applied, it is important to reach the point where all data products have at least one business entity and are, therefore, allocable in a functional domain (for consumption data products, a series of scenarios dependent on the applied data governance model opens up).

Calculation Function

This activity is undoubtedly the most evident. After several months of simulations on real data, I concluded that there is no ideal mathematical formula but that we need to work in an incremental approach until we are able to achieve a distribution that should look like the following diagram:

Some verification thumb rules adopted are:

High-value entities should be records or related to the core business

All domain entities should be clusterable (at least 85% of the distribution)

At this point, you should be able to obtain a clustering like follows

III. Monitoring and Remediation

To explain the benefits of the approaches, I provide some examples from the dataset I am using:

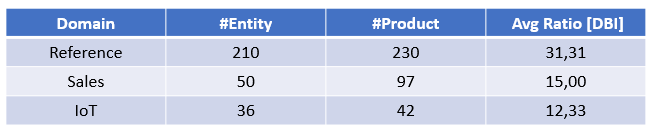

Where Avg Ratio is computed as the average ratio of (Consumption + Value) : (Effort) axis (similar to Data Benefit Index). This makes sense, in my opinion, that Reference data is usually more valuable than IoT data.

Monitoring & Remediation - First Run

The analyses that can be performed are applicable both during the setup phase and recurring (I will apply them only to the "Reference" domain).

Analysis of Unused Products

140 out of 230 products initially appeared unused (Consumption + Value equal to 0). Several causes for this phenomenon exist:

Ghost IT: The product is used but not tracked;

Enabling Entity: The entity is not directly used but serves other products, either as a technical object or as a model hierarchy root;

Outlier: Entities that are out of the confidence range. In our case, there were specifically two entities wrongly attributed to the domain that had anomalous behaviors.

Over-engineered Data Product(s): each product should have a shaped market and a consolidated user base. In data management, we could have multiple products that are over-engineered, and that could be shrunk/merged with others.

Analysis of Entities on Products

An analysis of product/entity assignments reveals that several entities are duplicated without a real reason other than the sedimentation of application layers. For these integrations, it is possible to consider the dismissal of duplicates or their integration into the correct entity (of the reference domain).

Products Managed by Non-Master Domains

A very common phenomenon (but not relevant for a reference domain), in this case, it is also possible to consider reassignments and dismissals.

Applying these normalization actions leads to the following changes:

In detail, the benefits are due to the following actions:

#Entity: Entities are rationalized both through the dismissal of irrelevant ones and through consolidation into more relevant entities;

#Product: The reduction is mainly due to the merging of derived products into masters and a rationalization of output ports;

%Usage: Obviously increases to the maximum as unused objects are dismissed. It is important to note that many technical and service objects are not dismissed but "incorporated" into the relevant business products (thereby also contributing to the increase in unit cost);

Total Cost: Obviously reduced due mainly to the dismissal of duplicate and unusable structures;

Product Cost: Tends to increase both due to the added cost of enabling structures and the unification of multiple products.

Monitoring and Remediation Post Setup and Tuning

Following the initial run and the setup and tuning activities, it is necessary to consider how products and entities move within the diagram over time. Without proactive management, each entity could experience two seemingly opposite phenomena:

Enablement of New Accesses/Users: This increases both its value and partially its costs.

Increase in Operating and Management Costs: Due to growing data volume, tickets, and versioning/ports.

Both phenomena have a net result that shifts our product or data entity along the effort axis with a proportional decrease in generated value until it reaches the non-exercisable threshold.

This evolution could lead our entity outside the domain clustering, thus affecting it.

This clustering approach allows evaluating not only the absolute worst-performing products but also enables the analysis of point decay (e.g., percentage) according to calculation functions that can be defined as needed (and which we won't elaborate on here).

Two Categories of Corrective Solutions

Actions Aimed at Improving Relevance

Such as creating new output ports or unifying multiple products (which, although increasing unit cost, reduces overall cost in the larger economy).Optimization Actions

These can include decommissioning (e.g., archiving historical data), re-architecting or decommissioning unnecessary functionalities (e.g., input and output ports or components of data contracts), or reducing SLAs.

These two contributions have a positive vector summation that improves the entity's performance and, thus, its intrinsic profitability.

📝 Related Reads

Versioning, Cataloging, and Decommissioning Data Products

Conclusion and Next Steps

The approach is certainly enabling as it allows for rationalization of the information assets both for the quantifiable component and for the intangible one (product quality, clear ownership).

However, it should be evaluated how to assign a business benefit for each initiative (theorized in enterprise architecture frameworks) and how to extend the model to products for which improvement actions cannot be applied (external products or those managed by regulatory constraints).

Suggested Further Monitoring Actions

Periodic Review of Data Taxonomy and Driver Selection: Regularly update the taxonomy and evaluation drivers to reflect changes in business processes and data usage patterns.

More on Drivers/Metrics & Taxonomy/SemanticsStakeholder Feedback Loop: Create a feedback loop with key stakeholders, including data stewards and product owners, to gather insights and make necessary adjustments to the data product management strategy.

More on Feedback LoopsData Democratization and Integration with a Marketplace: Facilitate wider access to data by democratizing it and integrating it with a data marketplace. This allows for better and more fitting utilization of data products, encourages innovation, and provides opportunities for external users to leverage the data, thus potentially creating new revenue streams.

More on Marketplaces (Data Products)

🔏 Author Connect

Find me on LinkedIn

🧺 More from Francesco!

Medallion Approach to Data Products: Beyond the Promised "Gold"

This piece is a community contribution from Francesco, an expert craftsman of efficient Data Architectures using various patterns. He embraces new patterns, such as Data Products, Data Mesh, and Fabric, to capitalise on data value more effectively. We highly appreciate his contribution and readiness to share his knowledge with MD101.

Bringing Home Your Very First Data Product | Issue #49

This piece is a community contribution from Francesco, an expert craftsman of efficient Data Architectures using various patterns. He embraces new patterns as well, such as Data Mesh and Fabric, to capitalize on data value more effectively. We highly appreciate his contribution and readiness to share his knowledge with MD101.