Operationalizing Data Product Delivery in the Data Ecosystem

Defining the Foundations to Underpin Data Products and the Operational Processes to Build Them

This piece is a community contribution from Dylan Anderson, a strategy consultant and data storyteller who excels at bridging the gap between data and business strategy. With a passion for translating complex data into actionable insights, Dylan shares his expertise on LinkedIn and his blogs, The Data Ecosystem and Medium. We’re thrilled to feature his unique insights!

We actively collaborate with data experts to bring the best resources to a 9000+ strong community of data practitioners. If you have something to say on Modern Data practices & innovations, feel free to reach out! Note: All submissions are vetted for quality & relevance. We keep it information-first and do not support any promotions, paid or otherwise!

A lot of people talk about the future of data being product-focused.

This means treating data like a product—aligning it with business use cases, making it accessible, promoting usability, ensuring security, and all the other things people mention when they talk about product management.

And this focus on these qualities occurs whether you define a data product as an analytical solution (classic definition) or an accessible dataset (Data Mesh approach). Note for the purposes of this article we will be taking the classic approach, with the reasoning explained in another article.

But the problem is that data product delivery is hard. Take the recent AI boom. Every large organisation with some budget set up a team to deliver AI products, thinking they would be the next OpenAI. A year later, many of those teams disbanded (I know a few), a ton of the projects wound down, and most products didn’t demonstrate the value that was promised or expected.

The problem isn’t constrained to AI, either. As a consultant, a good chunk of my firm’s revenue is made by building data products for clients who can’t do it themselves. This is everything from dashboards, reporting tools, machine learning algorithms, and other analytical solutions.

What is the reason for this inability to deliver internally?

Companies haven’t figured out the right delivery operating model. Their waterfall process doesn’t fit the business needs, and shouting agile over and over again without implementing it properly doesn’t work. Moreover, they lack the foundational elements required to construct data products on top.

So today, we are going to break it down and explain how to build production-ready data products with the right operating model and delivery process. Buckle up, data friends!

What is a Data Product

Before diving in, we must briefly explain what we are building.

As I’ve mentioned, data is a dynamic ecosystem in which each domain contributes to, and is influenced by, the whole. This ecosystem contains different types of participants and stakeholders, marked by complex relationships with both data and each other.

Therefore, you have to take a holistic approach that encapsulates both the data landscape and the larger business context. Consequently, in its purest sense, a data product must be a tool or solution from which end business users can draw insights and make decisions.

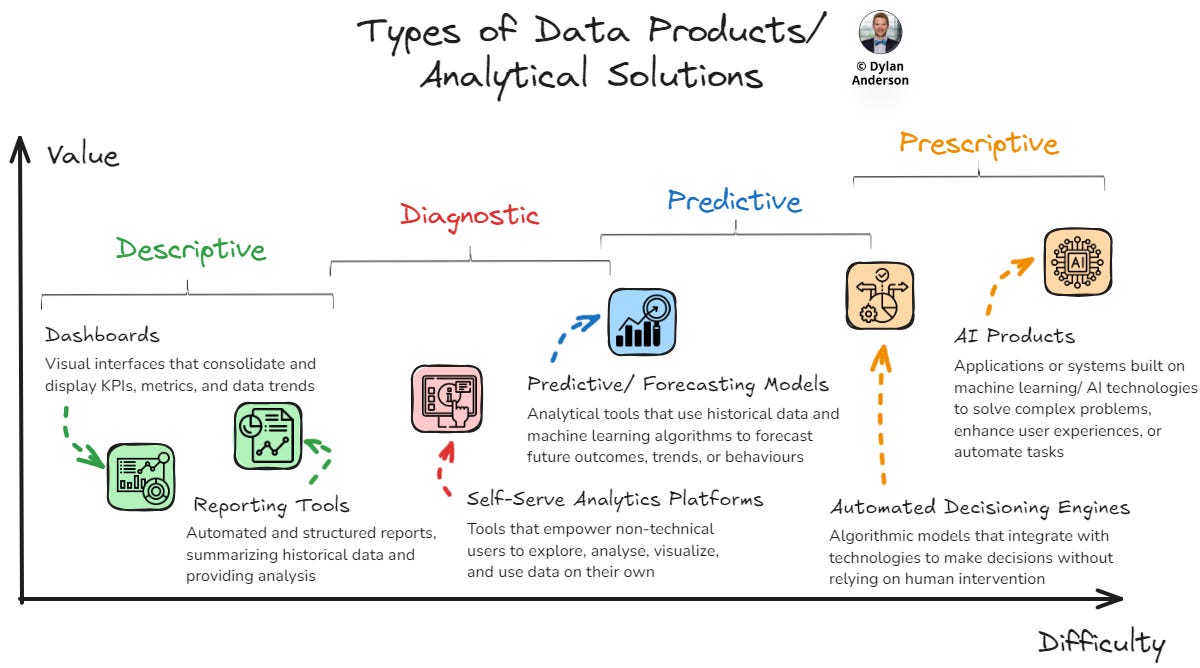

To explain this, I have defined the six most popular categories of data products:

Dashboards

Visual interfaces that consolidate and display key performance indicators (KPIs), metrics, and data trends in real time.

Reporting Tools

Technology that generates structured reports, often summarising historical data and providing analysis based on business requirements.

Self-Service Analytics Platforms

Tools that empower non-technical users to explore, analyse, visualise, and use data independently.

Predictive Models

Analytical tools that use historical data and machine learning algorithms to forecast future outcomes, trends, or behaviours.

Automated Decisioning Engines

Often leveraging predictive models, these automated algorithmic solutions integrate with technologies to make decisions without relying on human interventions.

AI Products

Applications or systems built on machine learning/ artificial intelligence technologies (e.g., natural language processing, computer vision, large language models, etc.) to solve complex problems, enhance user experiences, or automate tasks. These products learn from data and adapt to new information to provide better results for the user.

Contrary to the data-as-a-product perspective, I view these solutions as the products and the data that feeds them as raw materials. These raw materials are processed and curated to enhance the value of the end products that business stakeholders use.

📝 Editor’s Note

To remove any conceptual silos (which is often hazardous given the information overwhelm of our times), we wanted to connect the dots with our former community contributions with some additional context.

A data product is different from traditional consumable data given how it’s a vertical slice of the data infrastructure, cutting across the stack, from ingestion points and infra resources to the final consumption ports. This is to induce interoperability and reusability. And as Dylan highlighted, raw data is akin to raw material for this product.

Example: A Dashboard that is a Data Product is A Dashboard coupled with

(1) Infrastructure

(2) Code that runs the dashboard

(3) Ready-for-Consumption Data +Metadata

(4) Output ports (where the said Dashboard is equal to an o/p port)

Learn more here!

Foundational Data Product Components

Now that we have defined what data products are, we can go build them, right?

Most people would rightly say: “No, you can’t.” Unfortunately, those same people who’d do it anyway because leadership wants to “prove value” and “become data-driven.”

So, instead of jumping straight to developing data products, teams need to ensure the foundations are in place to build in a scalable and impactful way.

When talking about data product delivery, there are four foundational capabilities that you should have in place prior to the design, build and production phases:

Strategic Alignment

The first step is understanding the broader business goals, the data strategy that underpins that and the needs of your business stakeholders. You ensure the product will be strategically aligned by interviewing and workshopping with business leaders and stakeholders from the outset. This doesn’t end with the discovery phase; book check-ins and updates with these stakeholders to facilitate buy-in and user acceptance.

📝 Related Reads

Infrastructure, Architecture & Engineering

The next step is the foundational tooling below the data product. Teams need to consider four things in sequence. First, what is the overall enterprise & technology architecture within the organisation? Hopefully, the organisation has built its technology stack strategically and has a clear data flow from source to consumption, with the right tooling to deliver against the business requirements.

Second, build some semblance of a conceptual, logical and physical data model connected to the business model or the most pertinent business processes for the identified data products…

Third, think about the solutions architecture, which translates business requirements into technical specifications and functional requirements, specifically how the data product design/ build will align with the organisation’s infrastructure. Fourth, you have the engineering. Leveraging the designs in the data modelling and solutions architecture, the engineering team should be able to design and build high-quality data assets that can scale as per data product requirements.

📝 Related Reads

Data & Product Governance

Governance ensures that your data and underlying products are built with quality, security, scalability, and usability in mind. Frameworks should outline ownership for products, linking those to relevant data owners & stewards. Aligning this ownership with the overall data model and solutions architecture helps create transparency in the data lineage mapping and provides the basis for proper documentation.

📝 Related Reads

Data Management

With the governance established, data management is required to maintain the quality of data feeding into the product. A lot can fall into this bucket (especially because data management is such an ambiguous term).

Still, in this context, this should focus on master data management, data quality standardisation, and observability. Without this component, you will get the classic “garbage in, garbage out” result.

📝 Related Reads

With the foundations laid, it is time to build the functional and valuable data products the analytics and business teams need!

Data Product Operational Delivery Model

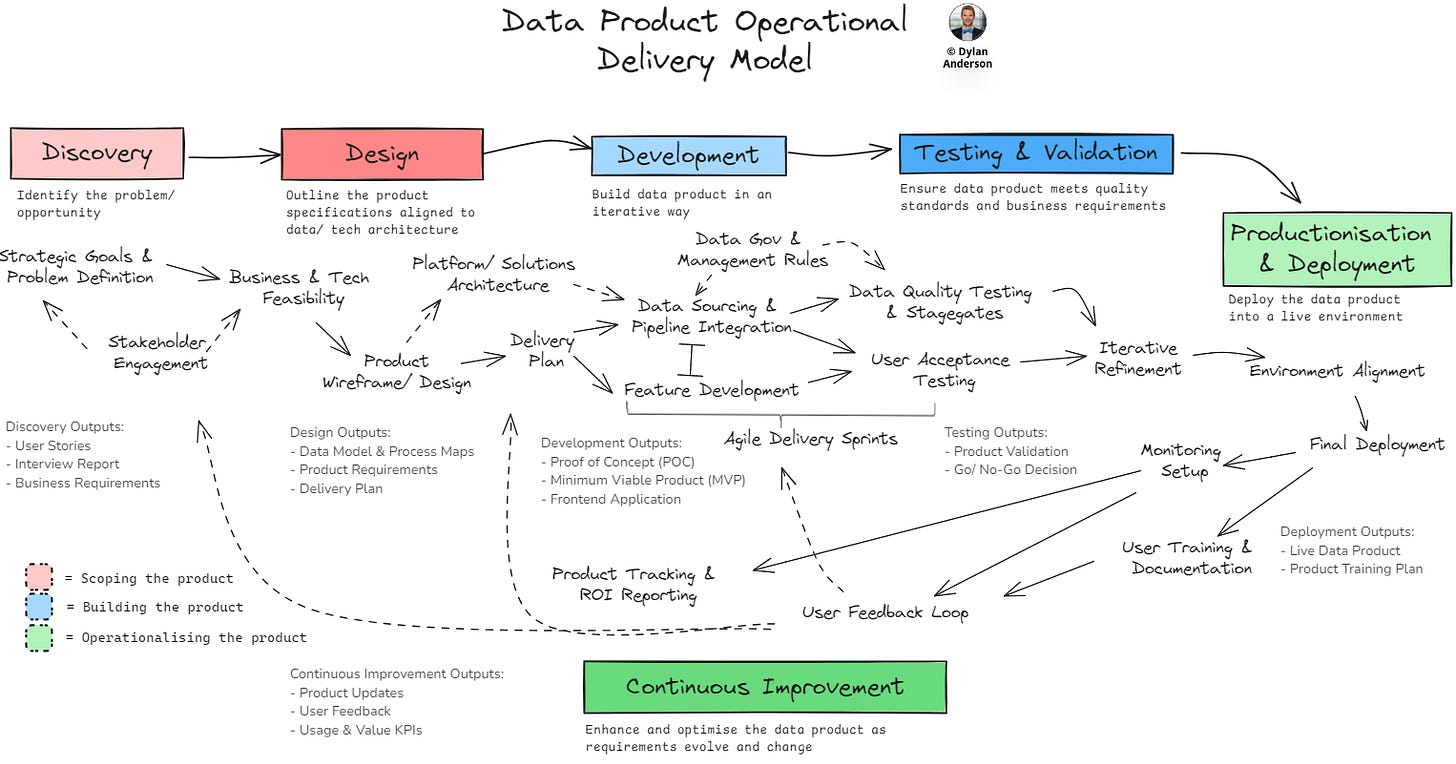

So, with a Data Product properly defined and the foundational components in place, it’s time to dive into the Data Product Operational Delivery Model.

This is a high-level view of a data product delivery (and may leave out some of the finer details), but it addresses the primary considerations within a best practice delivery approach (and trust me, I’ve overseen the delivery of quite a few products).

I have broken the operational model into three distinct phases with two steps each. This leaves six total steps with multiple activities and deliverables within each:

The first phase is Scoping the Data Product. This involves defining the why and how behind the data product. Before going off and building the thing, teams need to discover the problem/ opportunity and scope out the design of the data product to add value to the business.

Discovery

Understand what the problem this data product is trying to solve or the opportunity it presents to the broader business.

Every data product needs to start with the business's Strategic Goals and the Problem Definition of what the product solves. The best reference point for this is an existing Data Strategy or the business/ team goals that help dictate why the product needs to be built.

One of the best ways to ascertain this is to conduct Stakeholder Engagement activities like interviews, requirements workshops or primary research (e.g., user testing, process mapping, etc.). Summarising the insights from this exercise provides the basis for what the product needs to do and how it might function.

These insights from the strategy and stakeholder consultation feed into determining the Business & Technology Feasibility. Here is where the product delivery team needs to determine the priority and potential value of the data product, input their expertise into the effort required to build it, and decide whether it is feasible or not to begin the design.

Deliverables

Business Requirements – An understanding of what the data product needs to deliver to enable business value and what KPIs it will measure (or be measured against)

Interview Report – Insights from stakeholder interviews into their current business needs, pain points in existing processes, and potential solutions they have identified

User Stories – Captured requirements in stories demonstrating how business and product users will interact with and use the product

Design

Turn insights from the Discovery stage into requirements and design documentation to provide clear guidance for product delivery.

Within the design phase, the product team should continue adding to the Business & Technology Feasibility with non-functional and functional requirements. This will also feed into an initial Product Wireframe/ Design that provides a view of how the product should look, what it does, what data would feed into it, the relevant KPIs it answers/ displays, etc. Mapping and verifying the design with end users is crucial. Still, it is also essential not to over-engineer this phase and to ensure feedback is constructive rather than debilitating.

Next, aligning the Data Product with the organisational data model and Platform/ Solution Architecture is crucial. Ensuring the product is built in a way that is consistent with other products and the overall platform enables efficiencies, scalability, and interoperability.

With the design foundations in place, the next step is to create a realistic Delivery Plan. Consider the optimal Delivery Model (how are teams set up to deliver initiatives and what the approach is) and how that can be aligned to this product development. Understand the Workflow and Delivery Processes in place to properly collaborate with all necessary. Reporting Lines and the organisational structure should enable this understanding across teams, helping determine who needs to be involved and the leadership required to guide the delivery.

Deliverables

Data Model & Process Maps – Schemas and process maps to show how data fits into the business process

Product Requirements – Technical and data requirements of the product, linking the business needs with the actual data delivery work

Delivery Plan – A detailed plan on how the dev team will build and test the product, including involved stakeholders, experts, governance and timelines

📝 Related Reads

Validating Data Product Prototype/Design

End Products of the Design Stage

How to Build Data Products - Design | Part 1/4

Development

The second phase is Building the Data Product. This should be done iteratively, allowing for business stakeholder feedback. At the same time, it needs to leverage existing foundations, with testing and validation playing a key role before rushing to production.

Use validated designs to iteratively develop the data product (both the back and front ends), building in sprints to ensure alignment with users and relevant stakeholders.

While many companies talk about building in an Agile manner, genuine agile delivery is much more complicated. The Agile Delivery Sprints start with the Delivery Plan, constructed during the design phase. Designed in 1-2 week sprints, agile delivery means taking a user-centric approach (e.g., user stories, user testing & feedback) with flexibility in mind, allowing teams to reprioritise based on updated requirements from the business/ users.

Agile delivery is led by a scrum master or delivery lead who defines clear success metrics, plans agile ceremonies (sprint plan, standups, reviews, retrospectives), and leverages a cross-functional team to bring the right expertise to the data product development.The first two development activities within the Agile Delivery Sprints are Data Sourcing & Pipeline Integration (the backend) and Feature Development (the frontend).

First, on the backend, data engineers can build the pipelines to connect the primary data storage solution and the proposed frontend solution. Engineers should consult any Data Governance & Management experts or guidance to ensure quality standards are set and maintained within the ingestion process.

Next, on the front end, analysts should begin using the user stories, interview report, and product requirements/ wireframe to build the required features that teams will interact with. Incremental builds and check-ins of the features are important here to ensure the data product will be used, that users are bought into the process/ end solution, and that the data analysts are sharing their expertise with users on why things are being built in a certain way.

In true agile fashion, both the backend and frontend teams should collaborate with one another to ensure the two workstreams are done in an aligned manner.

Deliverables

Proof of Concept (POC) – Preliminary version of the product solution to demonstrate its feasibility and potential. This version needs to demonstrate value to warrant further investment.

Minimum Viable Product (MVP) – Basic version of the data product that includes most features. Allows users to provide feedback for the dev team to iterate and improve upon.

Frontend Application – A user-facing part of the data product, providing a more tangible solution for feedback. This would be included in the POC and MVP development.

📝 Related Reads

End Products of the Develop Stage

How to Build Data Products - Develop | Part 2/4

Testing & Validation

Ensuring data that feeds into the product meets quality requirements while confirming the frontend requirements for business needs.

This whole step still falls within the Agile Delivery Sprints, with the main delivery team verifying what is being built is working as designed.

Like development, there are two sides to the testing and validation. Data Quality Testing & Stagegate Implementation should occur and align with the data sourcing and pipeline build. Continuous Integration and Continuous Deployment (CI/CD) approaches will ensure pipeline development has automated processes to detect code issues. This should align with quality standards set by Data Governance or Management teams. Data quality stages within the data lifecycle can also be set up to verify that the data running through the pipelines to the end product meets requirements.

The other side of this step is User Acceptance Testing in which analysts and business stakeholders ensure the product meets their needs. This should be done at the end of each sprint with workshops to bring together ideas and ideate on how to improve usability.

Iterative Refinement is the culmination of testing. After collecting feedback, the team should refine and improve the product. In reality, this is an ongoing activity that should happen throughout the Agile Delivery Sprints.

Deliverables

Product Validation – A product that has been tested by users, with detailed specifications on how it has been tested and improved upon

Go/ No-Go Decision – Leadership decision about whether the product is ready for production based on UAT results and other feedback.

Productisation & Deployment

The third phase is Operationalising & Optimising the Data Product. This entails careful monitoring of the product launch to prevent last-minute glitches or problems. Then, the team needs to understand if users understand how to use the data product and whether it is delivering against stated goals, helping inform further improvement.

Deploying the data product into a live environment ready to be used by end users

In preparation for go-live, the team should ensure Environment Alignment. This entails activities to match the development/ testing environment with the production environment and should include:

Configuring production servers and databases

Setting up necessary software and dependencies

Granting the right access controls

Creating security rules and measures

Verifying integrations and connections work in the production environment

Finally it is time for the Final Deployment, moving the data product to the production environment. Before pressing the go button, the dev team should do any final checks and validations. If necessary, a phased rollout might also be considered, especially for more complex launches. Teams might also include a rollback plan in case of unexpected launch issues. Oh, and don’t launch on a Friday.

Monitoring Setup provides the guidelines and tooling to understand the data product's performance, usage, and issues. Error tracking, logging, usage dashboards, critical issue alerts, and performance thresholds might be considered within this.

In parallel to monitoring, User Training & Documentation should be rolled out to any data product users. Best practice would be to develop these training materials during the agile sprint phase. Support channels for user questions, training sessions, product champions/ owners, and user manuals are all things that need to be factored into this activity.

Deliverables

Live Data Product – A fully operational product accessible to end-users

Product Testing Plan – A plan to test and understand how the product team will monitor the data product after go-live (metrics, KPIs)

📝 Related Reads

End Products of the Deploy Stage

How to Build Data Products - Deploy | Part 3/4

Continuous Improvement

Seeking out feedback on the live data product and implementing updates to enhance and optimise its effectiveness.

Continuous improvement isn’t a real step; it is more of an approach to the continued evolution of the data product. This is enabled by a User Feedback Loop, where ongoing comments, suggestions and performance data are collected to input into future designs/ iterations of the product.

This feedback should be facilitated by the Data Product Owner/ Manager, nominated to own the data product after going into production. This feedback loop needs to extend beyond users to other data teams, as changes in security policies, data quality standards, platform infrastructure, etc., will all impact product management.

In addition to the user feedback, product owners/ managers should stay on top of Product Tracking & ROI Reporting. Being data-driven should extend to knowing whether your data products are helping deliver value and meet business goals. This builds on the Monitoring Setup activity.

Deliverables

User Feedback – Insights from users about existing pain points, opportunities for improvement, and best practices to inform product updates

Product Updates – Recommendations for the product based on user needs and KPI outputs. These should be tangible and executable to facilitate expected improvements.

Usage & Value KPIs – Accurate metrics via dashboards or reports that demonstrate the performance of the data product against business objectives and needs

📝 Related Reads

End Products of the Evolve Stage

How to Build Data Products - Evolve | Part 4/4

📝 One from the MD101 Archives

Dylan covered several interesting nuances in the exhaustive piece. Here’s a rough overview we pulled from the archives to share a bare-bone visual summary of the end-to-end lifecycle with the outcome of each stage.

Data Products aren’t an easy subject. For many users, they are the endpoint of the data lifecycle, and everything in the Data Ecosystem leads to them.

That is why this subject of Data Products and doing it correctly is so important! When trying to prove the business value of data, the foundations and operational delivery model are essential to ensuring a data product gets used. So good luck, and I hope this article helps you put your data product development on the right track!

From The MD101 Team

Bonus for Sticking With Us to the End!

🧡 The Data Product Playbook

Here’s your own copy of the Actionable Data Product Playbook. With 500+ downloads so far and quality feedback, we are thrilled with the response to this 6-week guide we’ve built with industry experts and practitioners. Stay tuned on moderndata101.com for more actionable resources from us!

🧡 The Ultimate Cookbook for Data Product Aspirants

🎯 End-to-End Guide to Building Data Products

This is a collection of materials addressing all you need to know about building data products for your use cases or your organisation. We keep updating this series.

📝 Author Connect

Thanks for the read! Comment below and follow my newsletter for more insights! Feel free to also follow me on LinkedIn (very active) or Medium (increasingly active). See you amazing folks next week in The Data Ecosystem!